The AI Tutor That Ensures Students Actually Think.

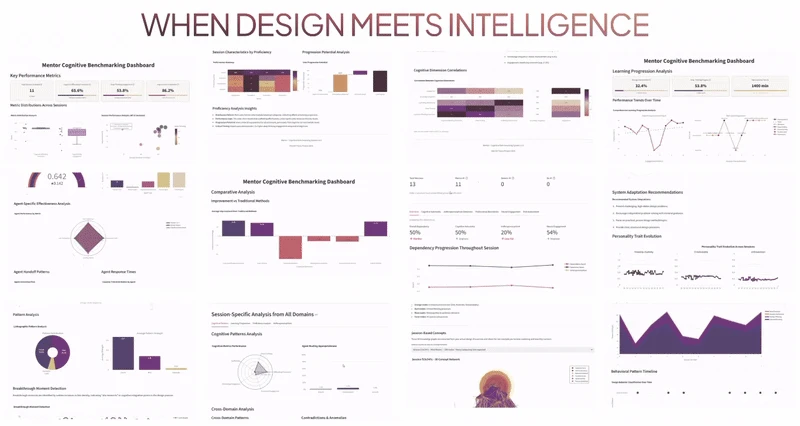

On one side, a student sketching a concept while an AI asks "Why this approach? Who is it for?" On the other, a dashboard showing cognitive engagement spiking. MENTOR doesn't just give answers. It ensures deeper thinking and real learning in the process.

What's the Cost of Convenience?

Picture a student facing a tough assignment. Midnight. Deadline tomorrow. Blank page.

Two options: struggle through it, or ask an AI for a ready-made solution.

Tempting. But if the AI does the thinking, what happens to the ability to think?

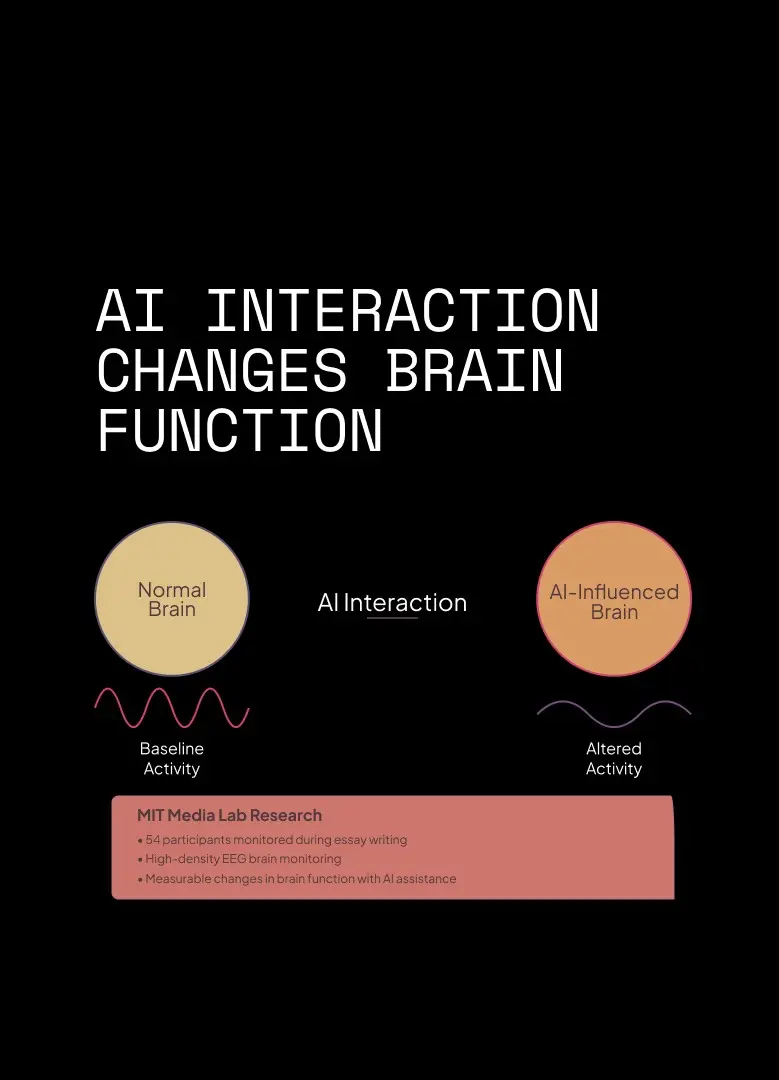

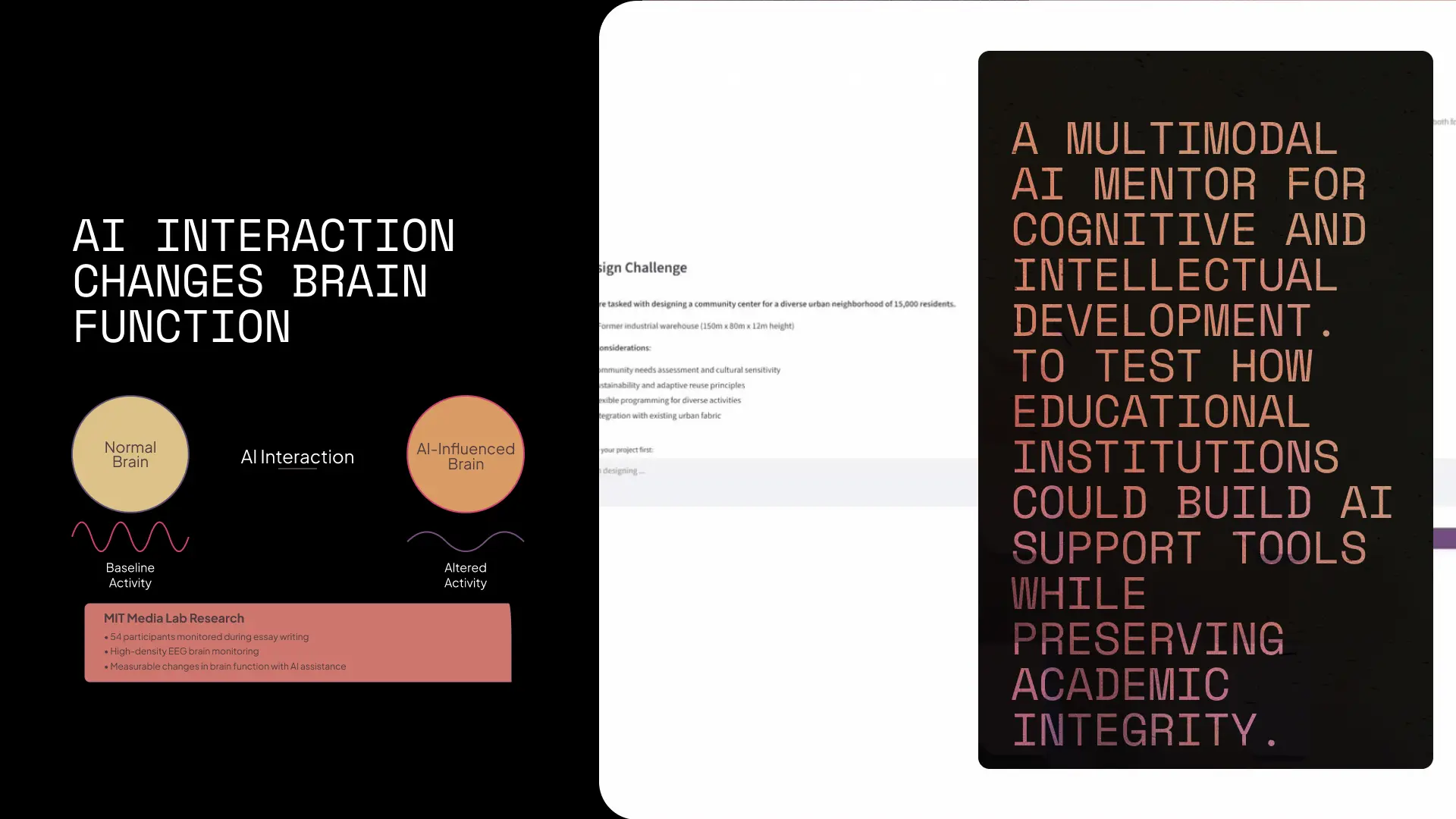

This isn't hypothetical. MIT Media Lab ran a study. They strapped EEG headbands on students while they worked on essay tasks. One group used ChatGPT. Another used a normal search engine. A third worked completely on their own.

The results were a wake-up call.

The ChatGPT group's brains showed the lowest engagement. They increasingly zoned out and let the AI take over. By the final essay, many were literally copying and pasting with minimal personal input. Teachers called the essays "soulless."

The students who didn't use AI had the highest neural activity and more original work. They were more curious, more invested, and felt prouder of their output.

The researchers called it "cognitive offloading." Dumping mental work onto the machine. Especially pronounced in young learners.

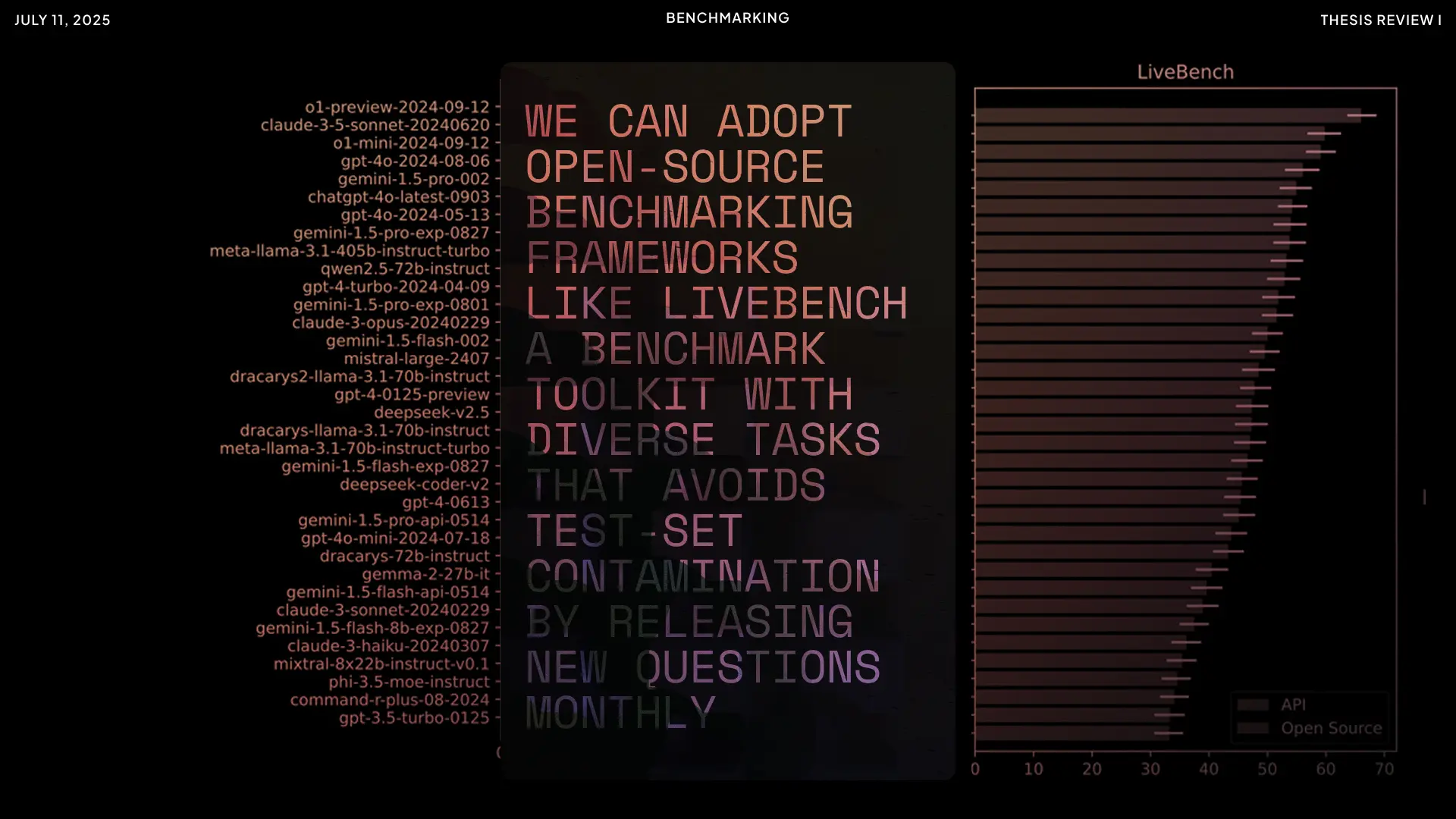

Meanwhile, educational institutions worldwide are handing students access to tools like ChatGPT without understanding the implications or long-term effects on cognitive development. Some are even developing proprietary AI tools that skip accountability entirely by not collecting or analyzing the right type of data. Worse still, many don't benchmark their solutions against any valid learning outcome KPIs. They're flying blind, hoping technology alone equals progress.

If students offload the heavy lifting to AI, they might never develop the intuition to solve ambiguous problems or the grit to iterate through tough constraints. The risk is real: graduates who can generate flashy AI-assisted work, yet can't critique or evolve that work because they skipped the critical thinking stage entirely.

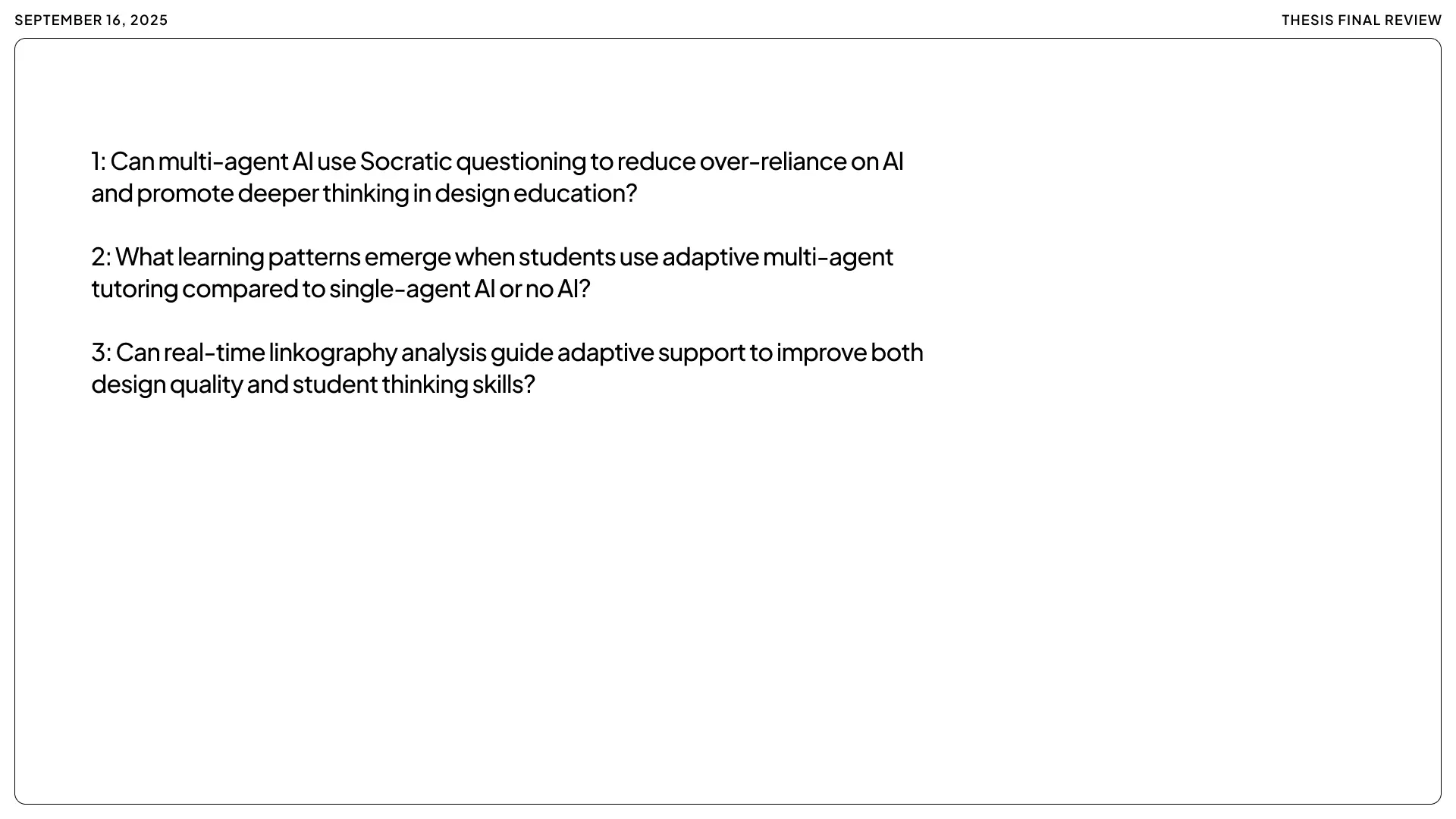

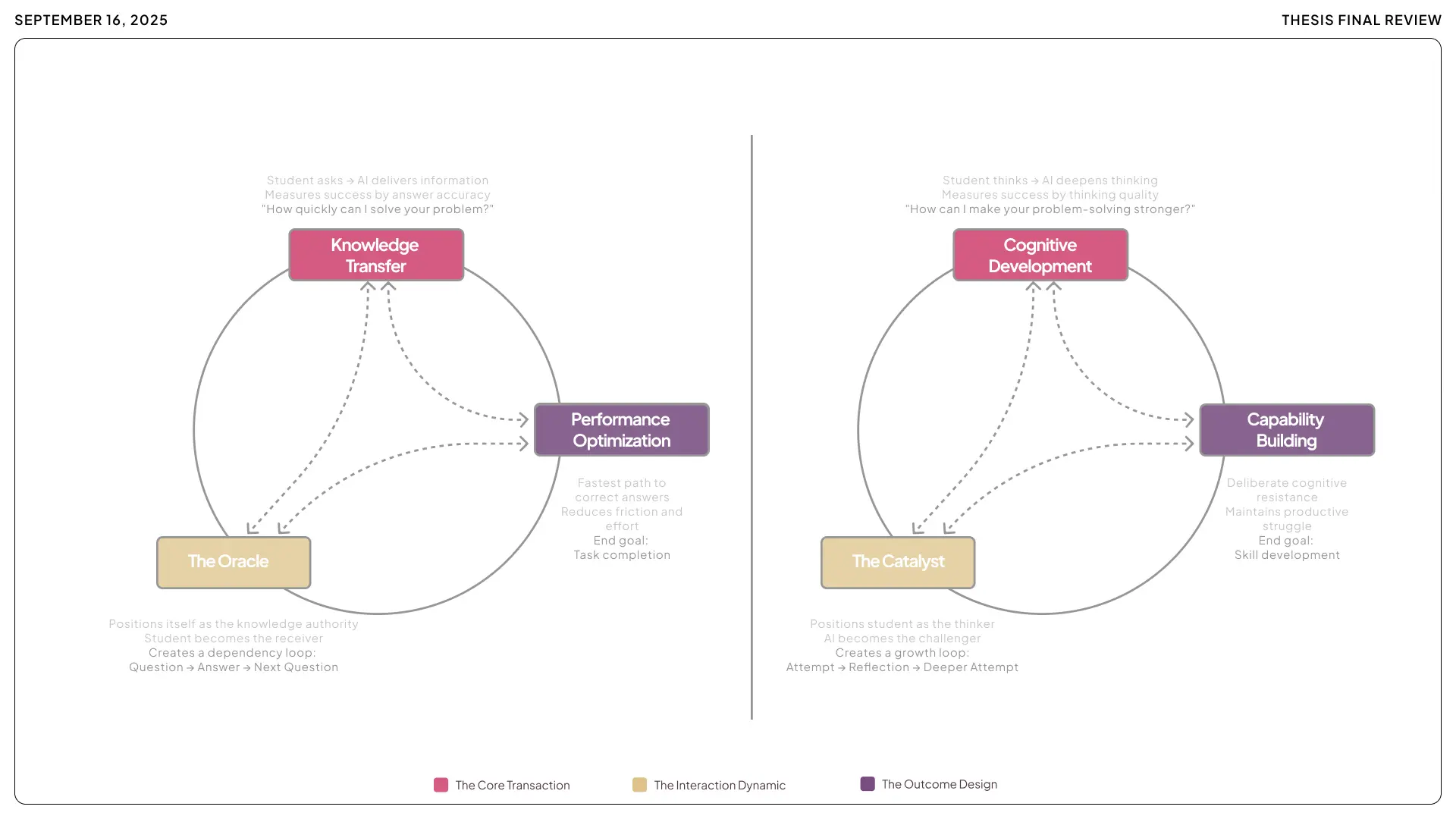

Rethinking What an AI Tutor Should Be.

The default model of AI help had to go. Ask a question, get an answer. That model turns AI into a well-intentioned genie granting wishes instantly.

What students actually need is more like a personal trainer. Someone who spots, pushes, but doesn't lift the weight for them.

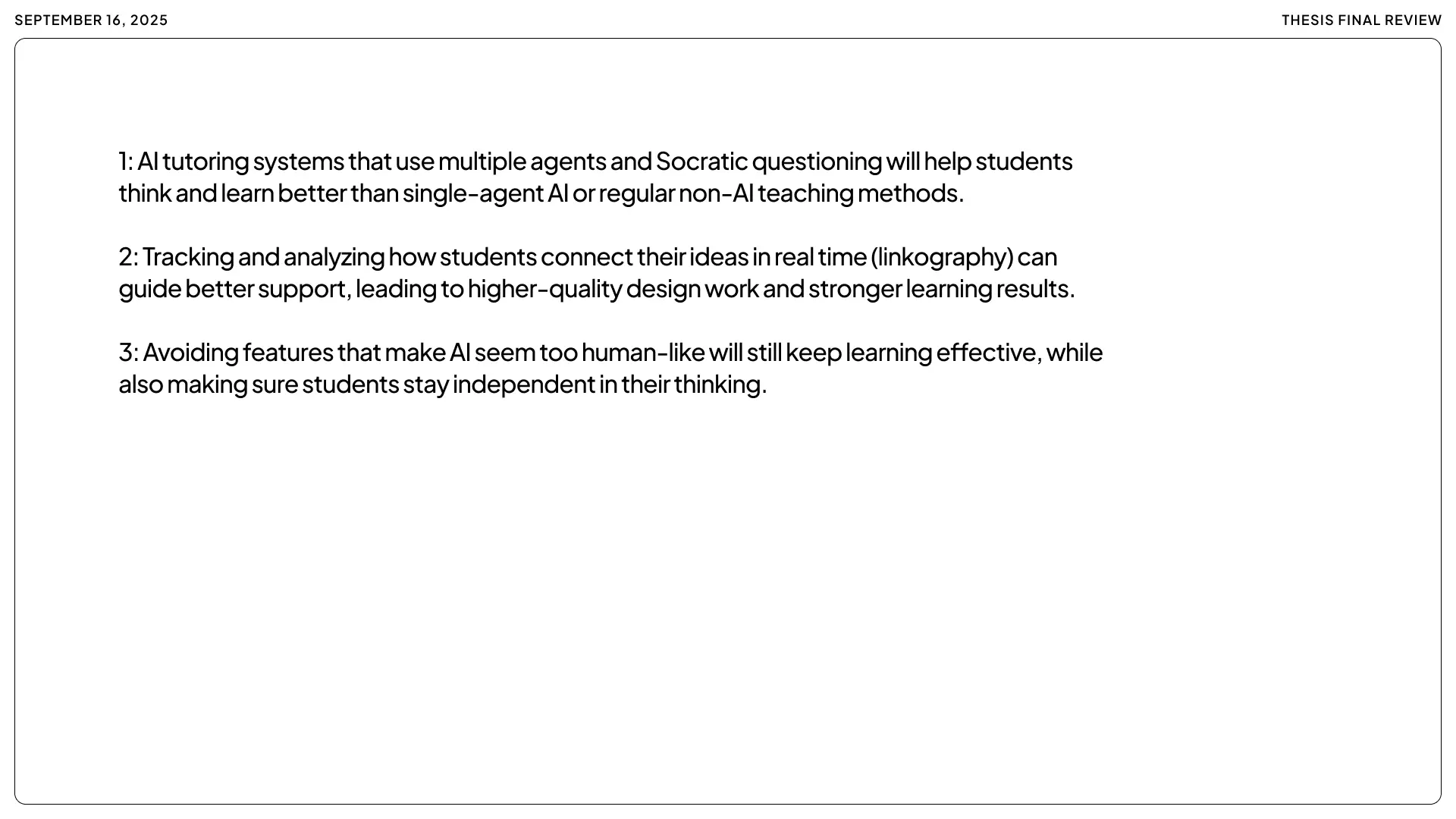

MENTOR was grounded in two educational philosophies.

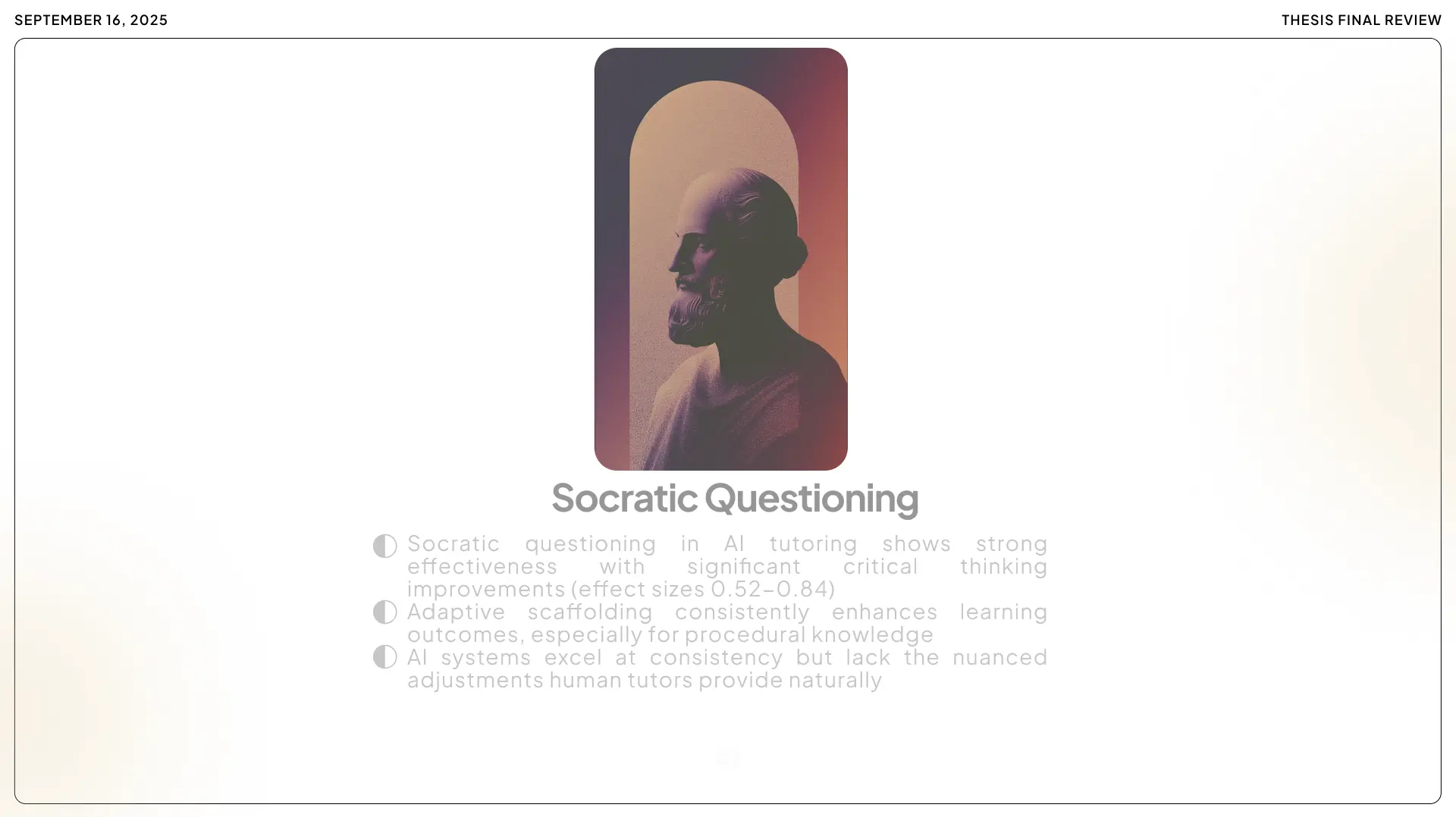

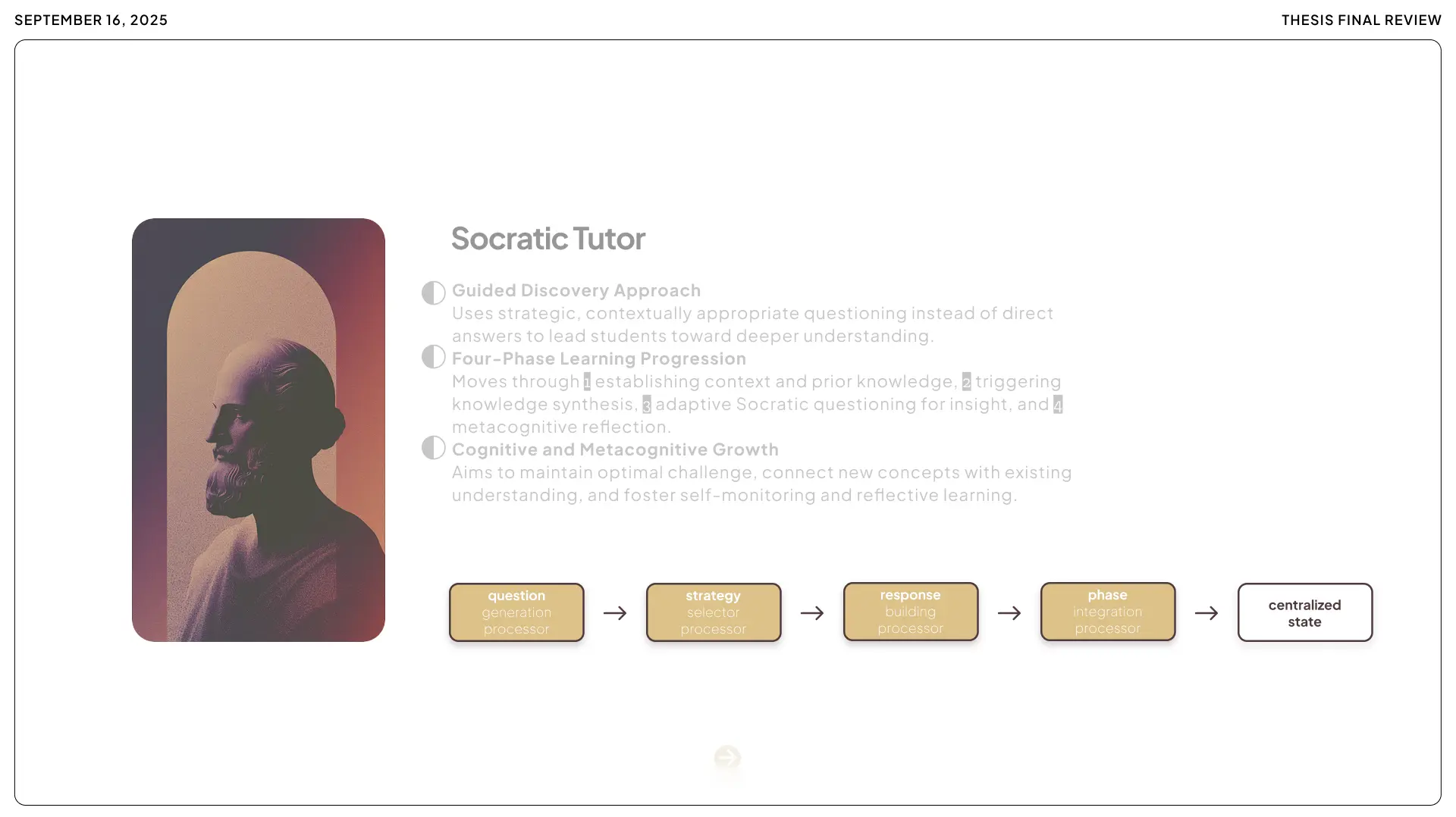

First: Socratic questioning. Teaching by asking probing questions instead of giving lectures. A good human tutor often responds to a student's question with another question, guiding them to figure it out themselves. Frustrating sometimes. But it works. It stimulates deeper understanding.

Second: scaffolding theory from cognitive psychology. Providing support when a learner is struggling, then gradually removing that support as competence develops. Like scaffolds on a building under construction, taken away when the structure can stand on its own.

Combined: Socratic scaffolding. MENTOR never hands over the solution. It engages in dialogue. It asks "Why?" and "What if?" and "How might...?" It gives hints or context when truly needed, then steps back as confidence grows.

The goal: keep the student in that sweet spot of challenge. Not so hard that frustration takes over. Not so easy that passivity sets in.

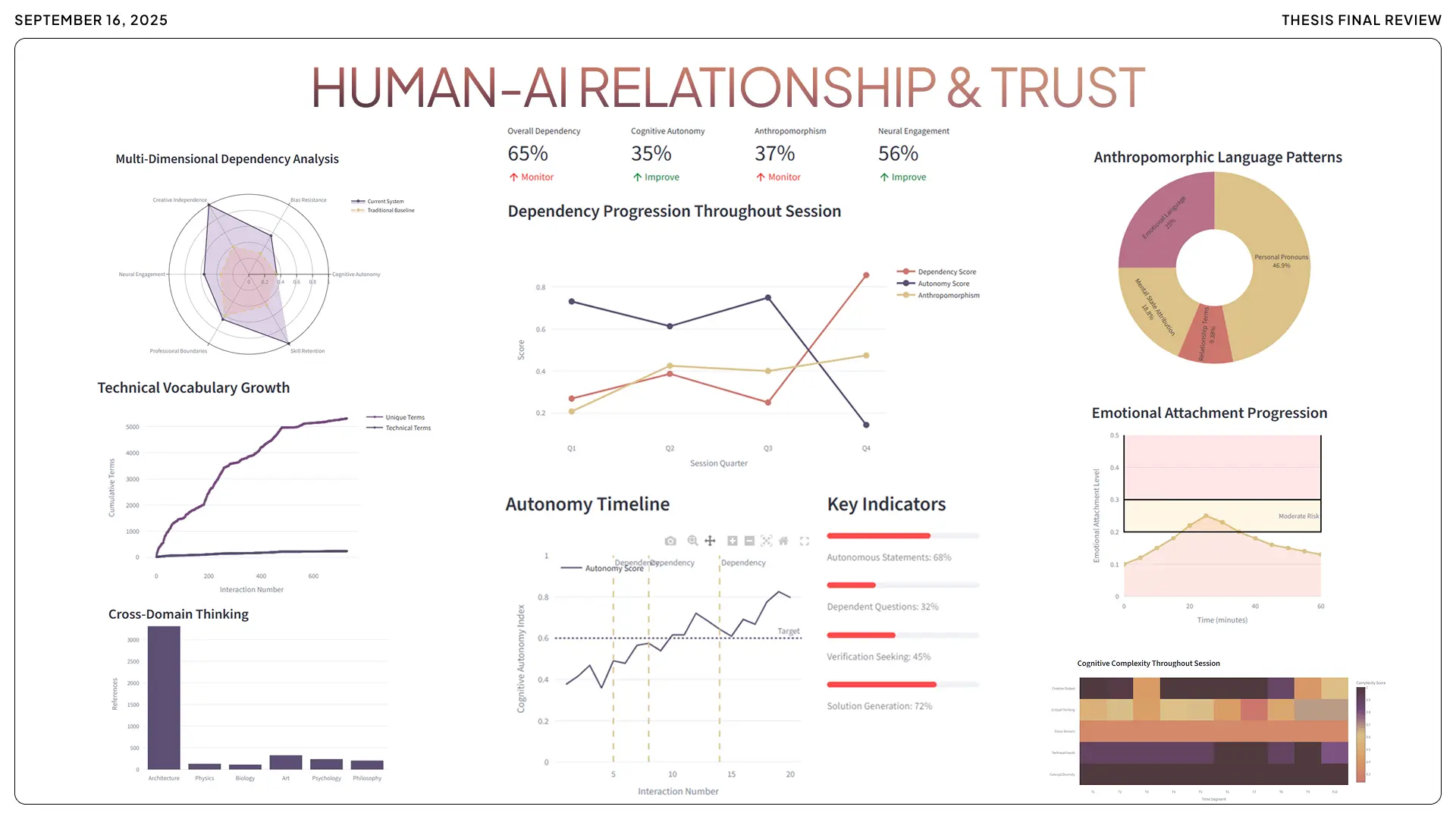

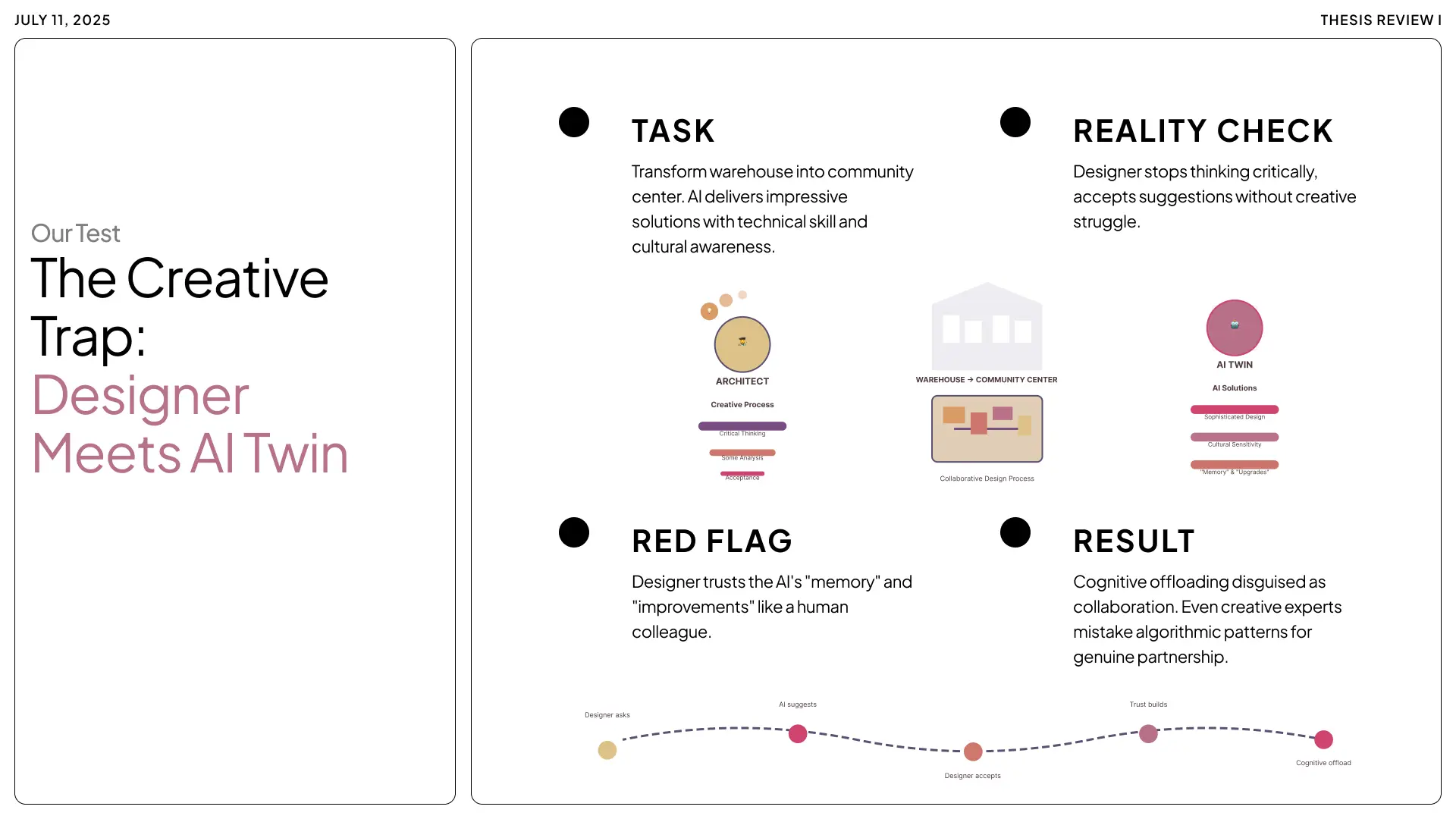

There's another consideration: anthropomorphism. The tendency to treat machines like living beings. Studies show that if an AI tutor is too human-like or too friendly, students might develop parasocial relationships. Trusting it too much. Feeling emotional bonds. Engagement goes up, but so does dependency.

MENTOR was designed to avoid this. No cute avatar. No human name. The student should always remember: this is a tool, not a person.

Here's something interesting: using multiple specialized agents instead of one helps prevent anthropomorphism. One AI that chats might start feeling like a virtual buddy. But a team of AIs, each with a distinct role and voice, feels more like functional components of a system than a singular entity to befriend. Less Jarvis. More control room.

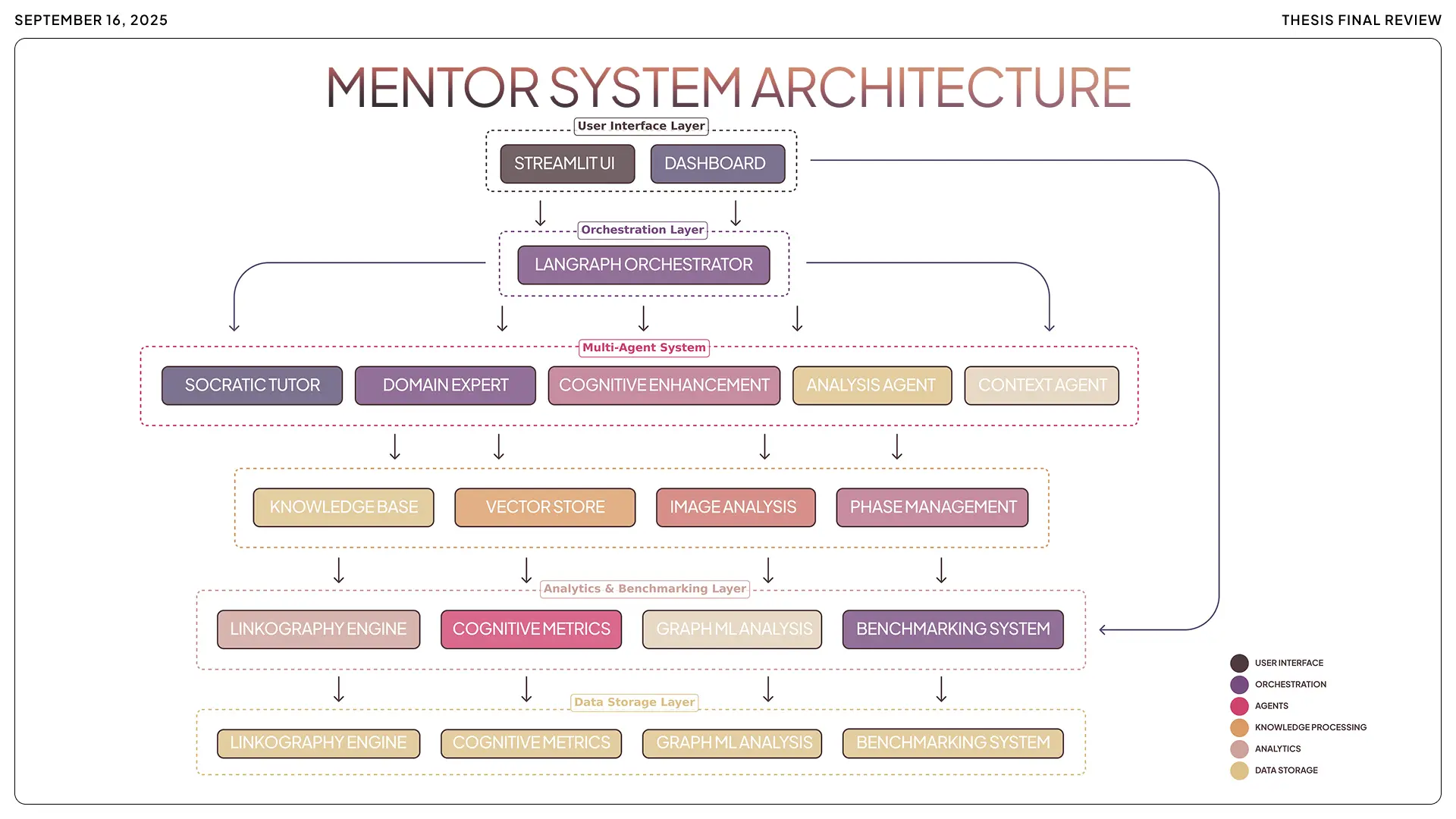

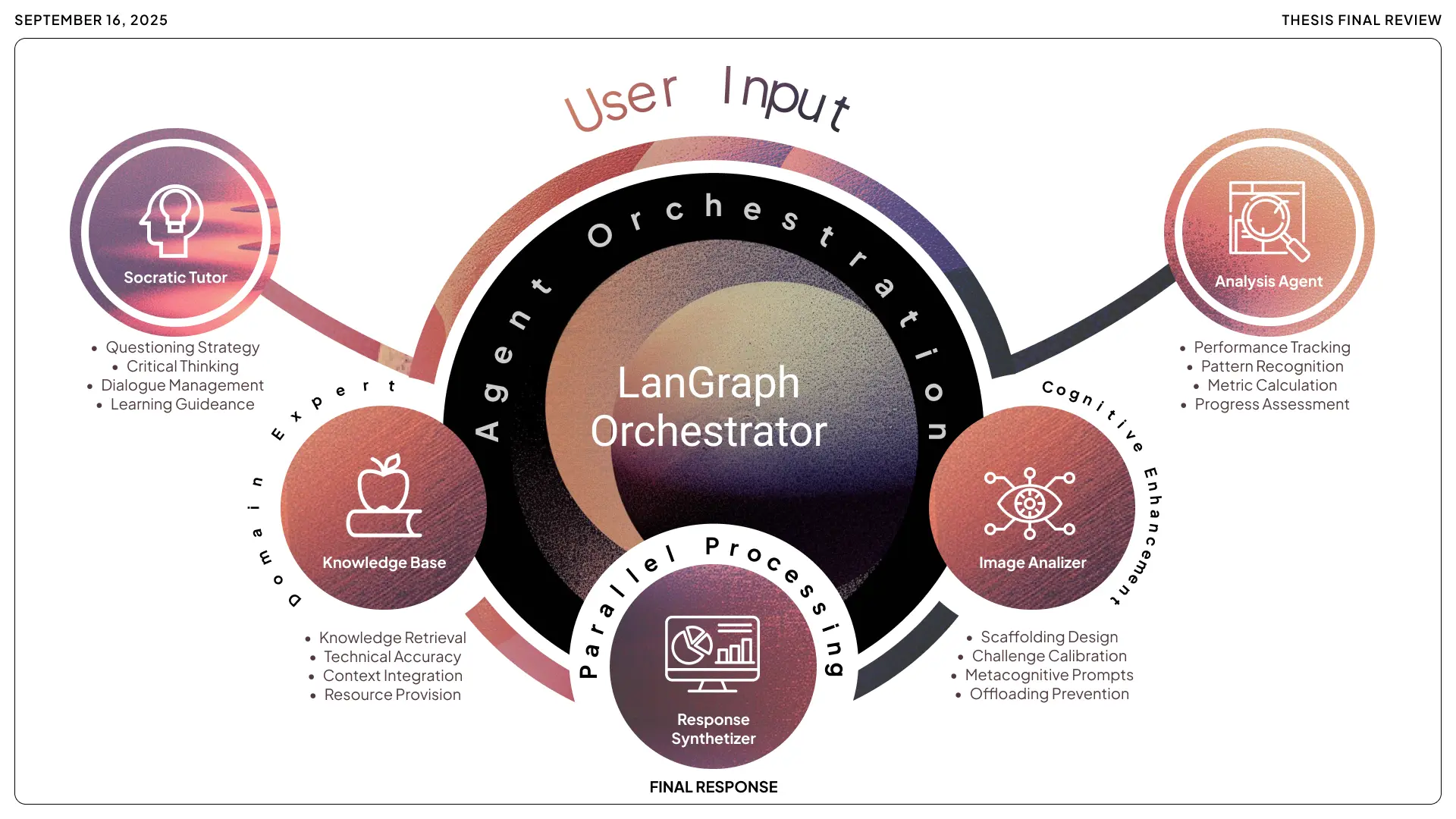

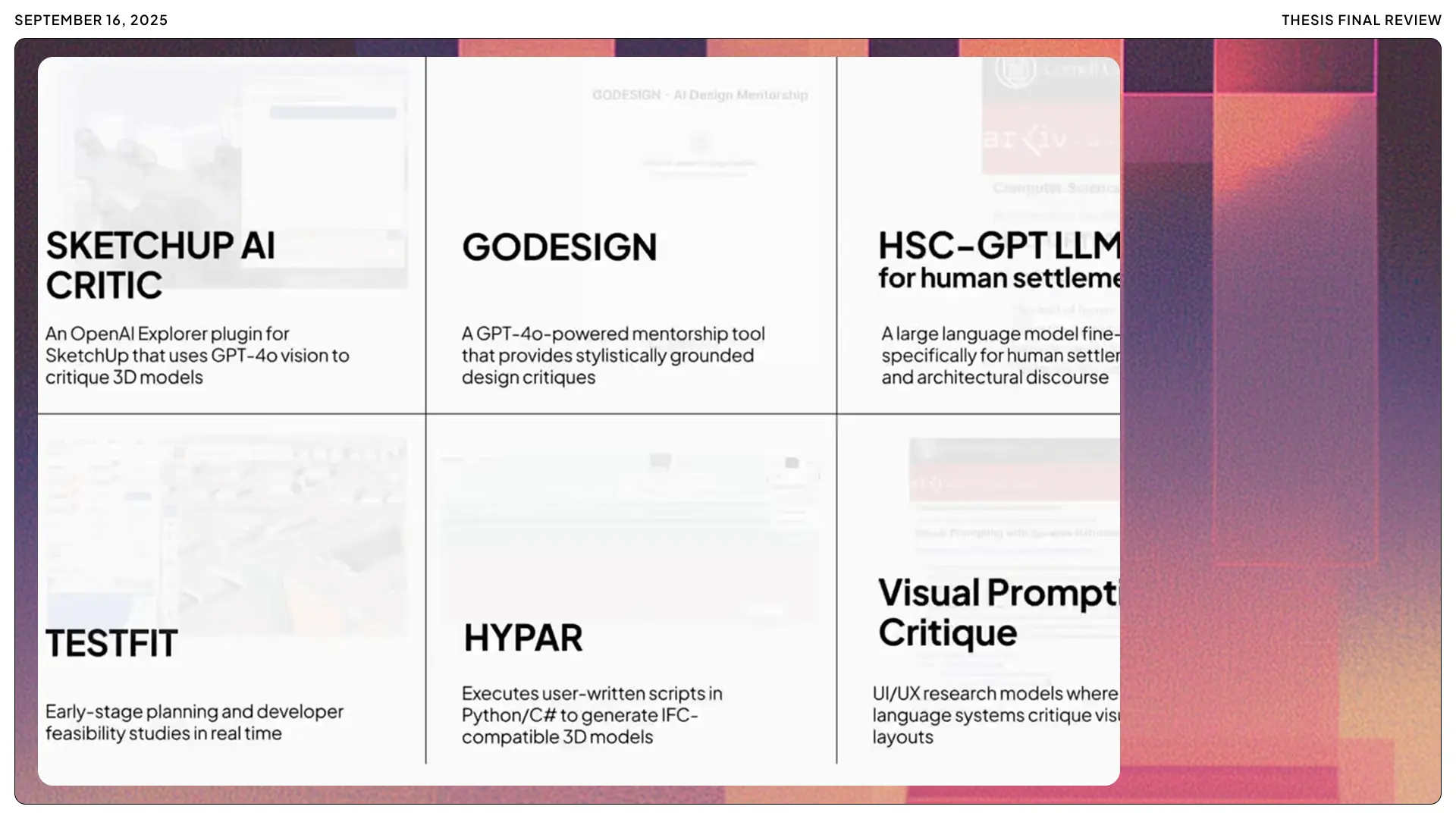

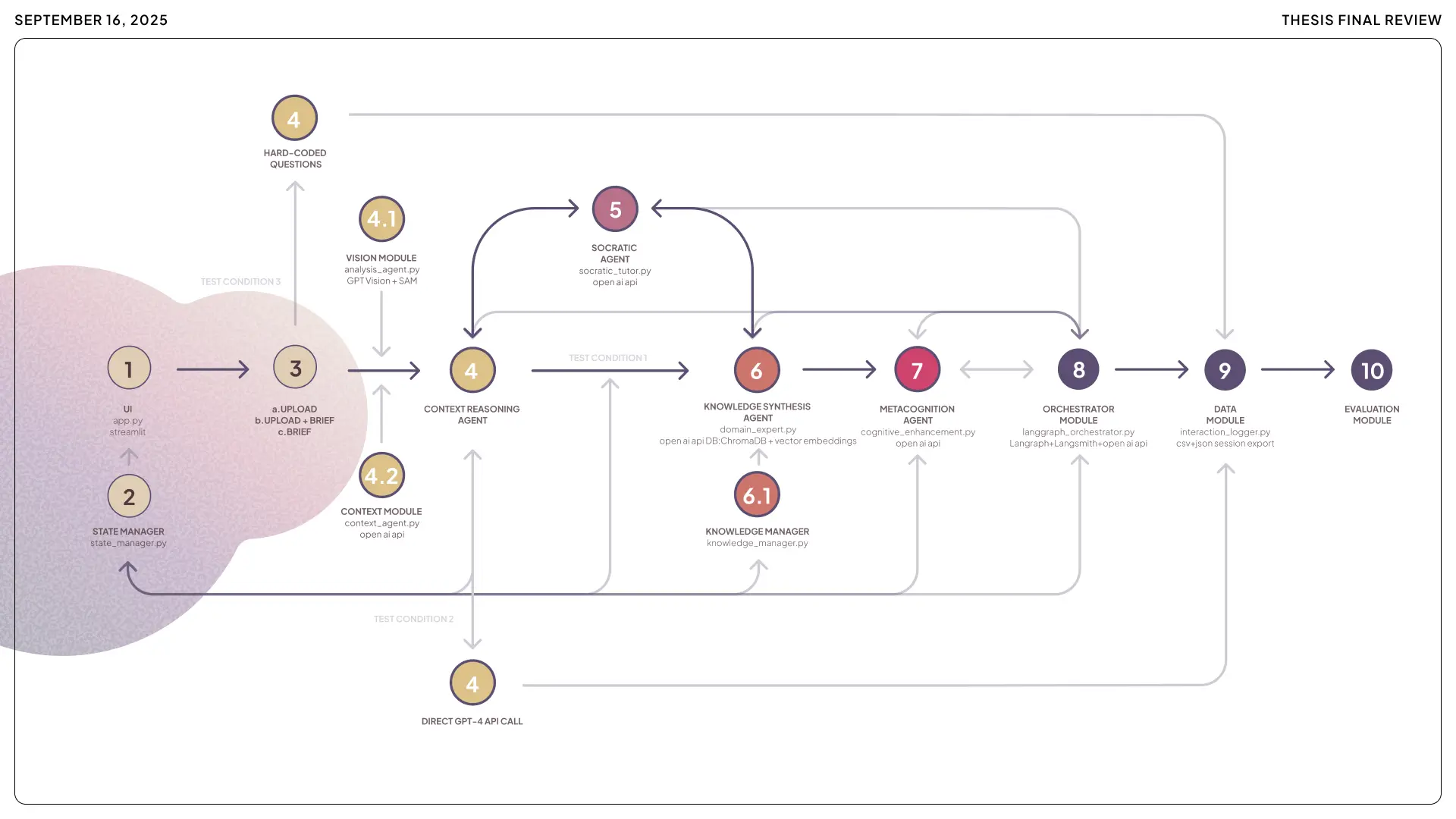

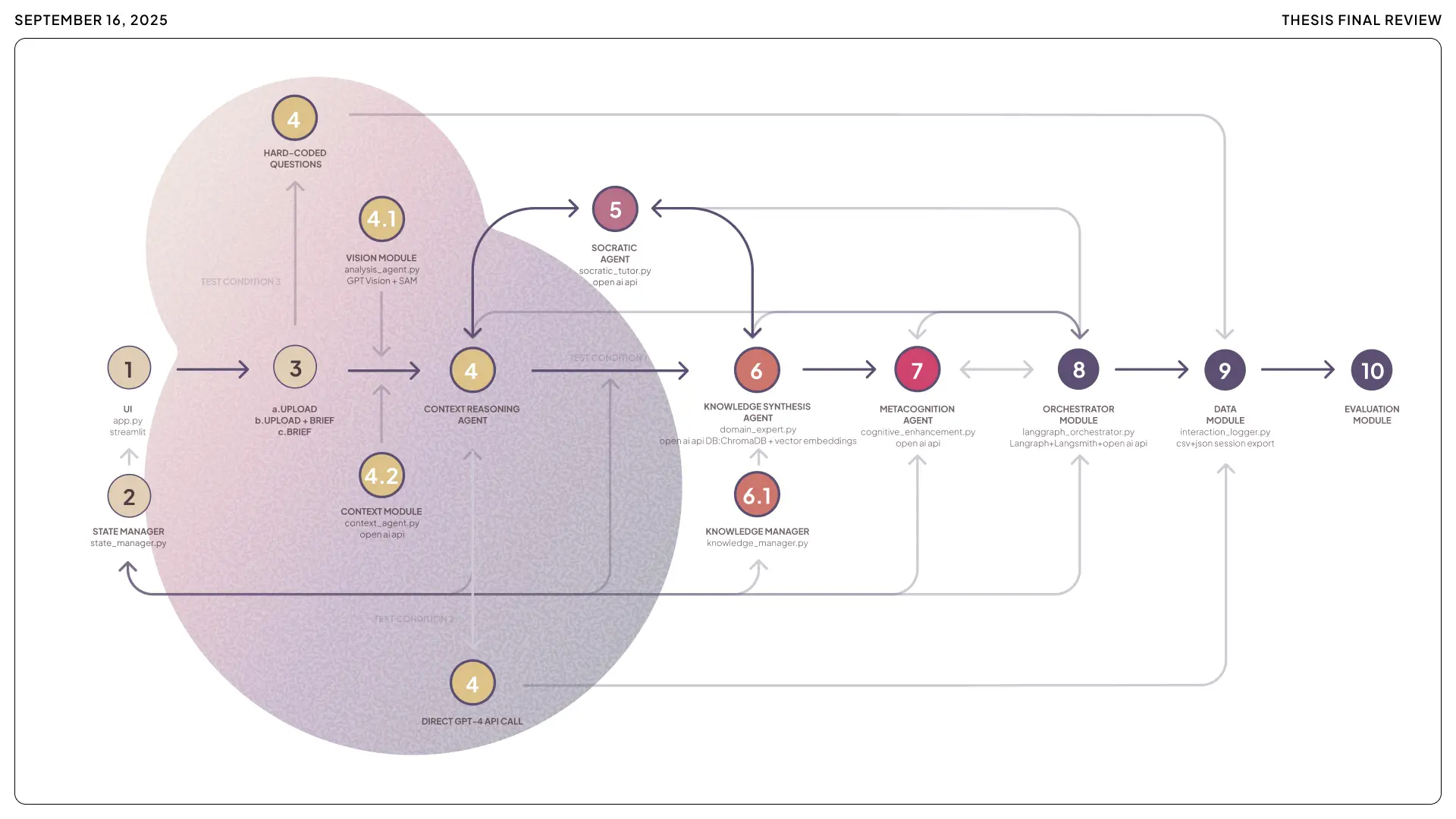

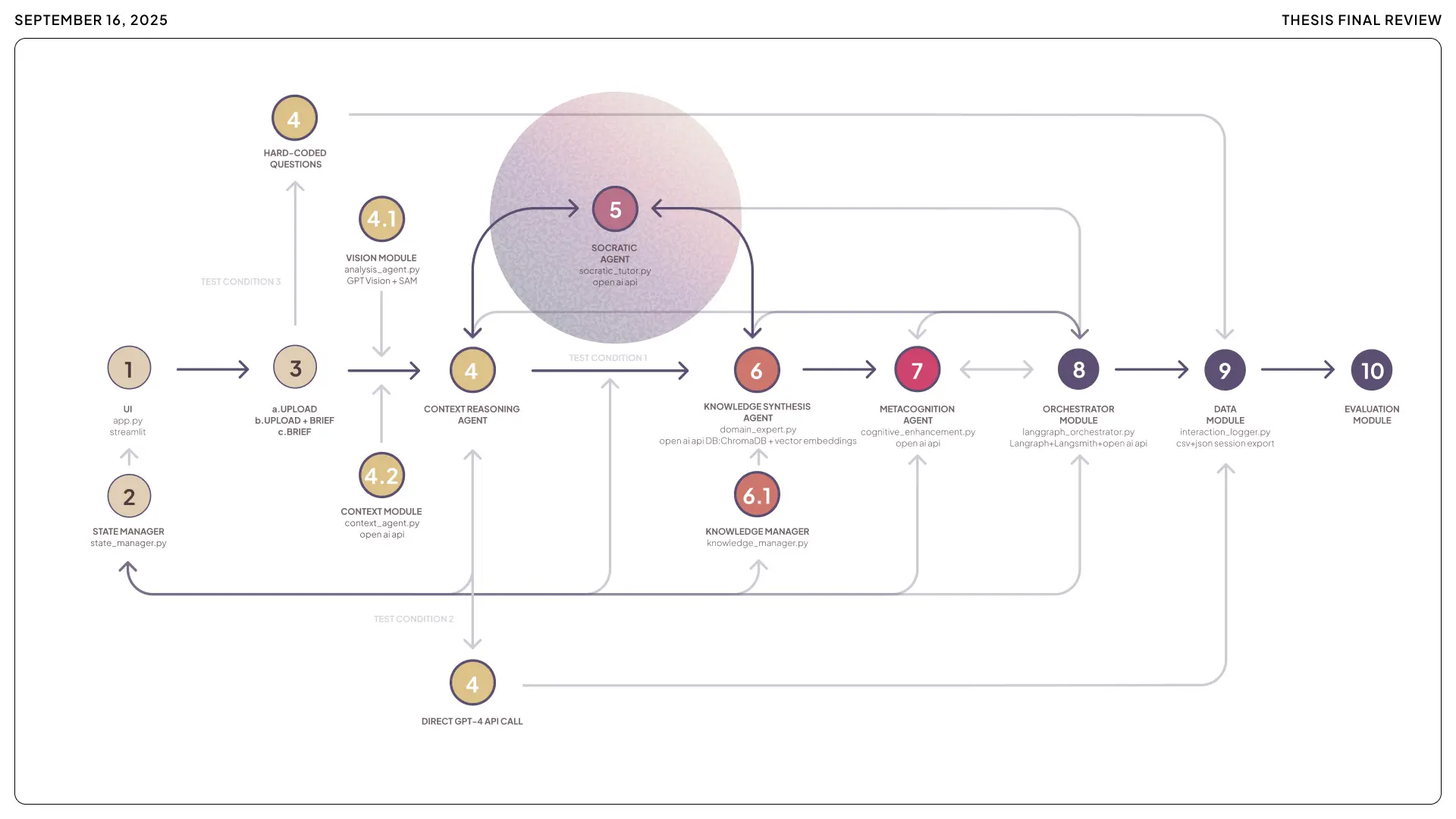

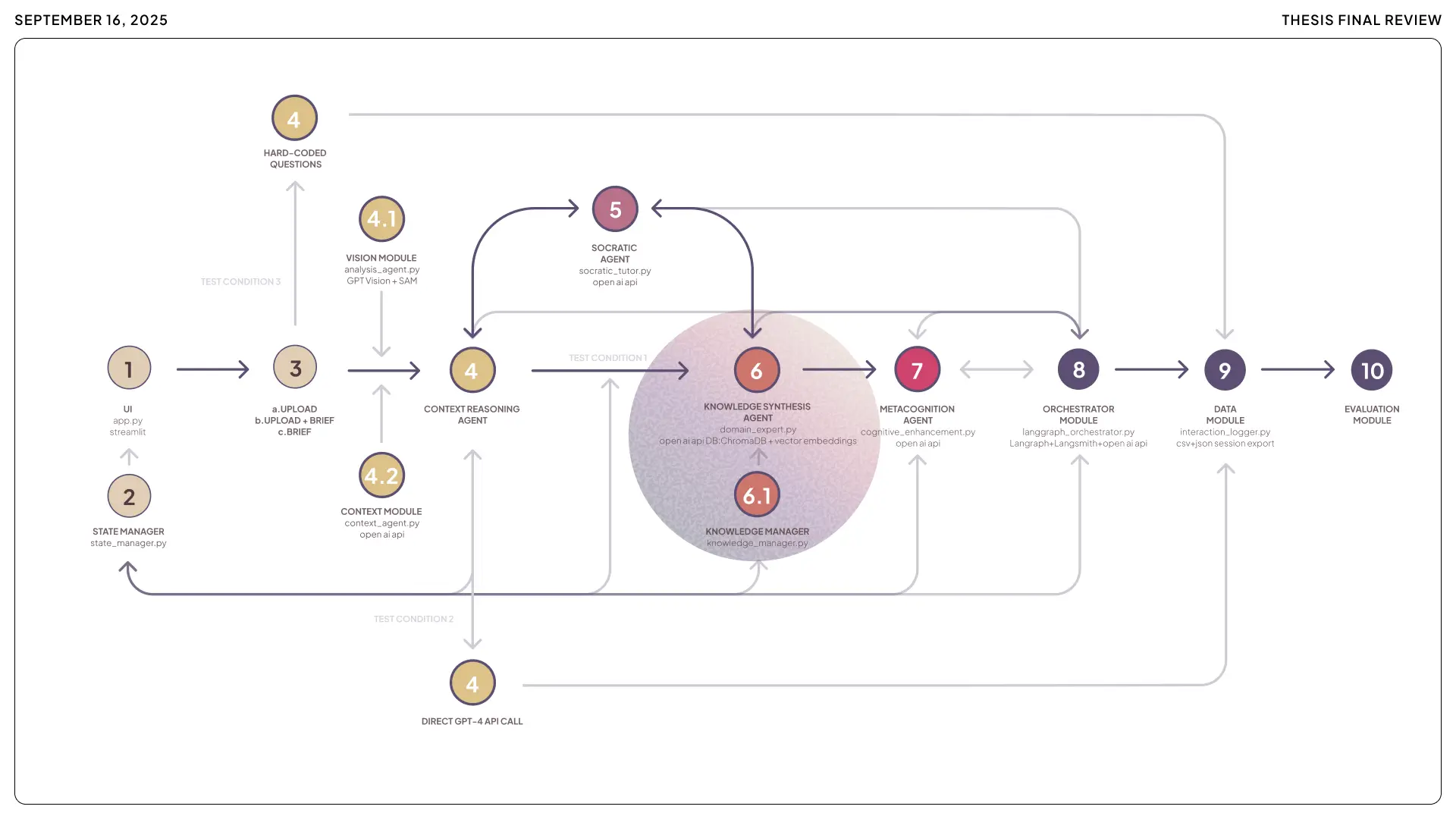

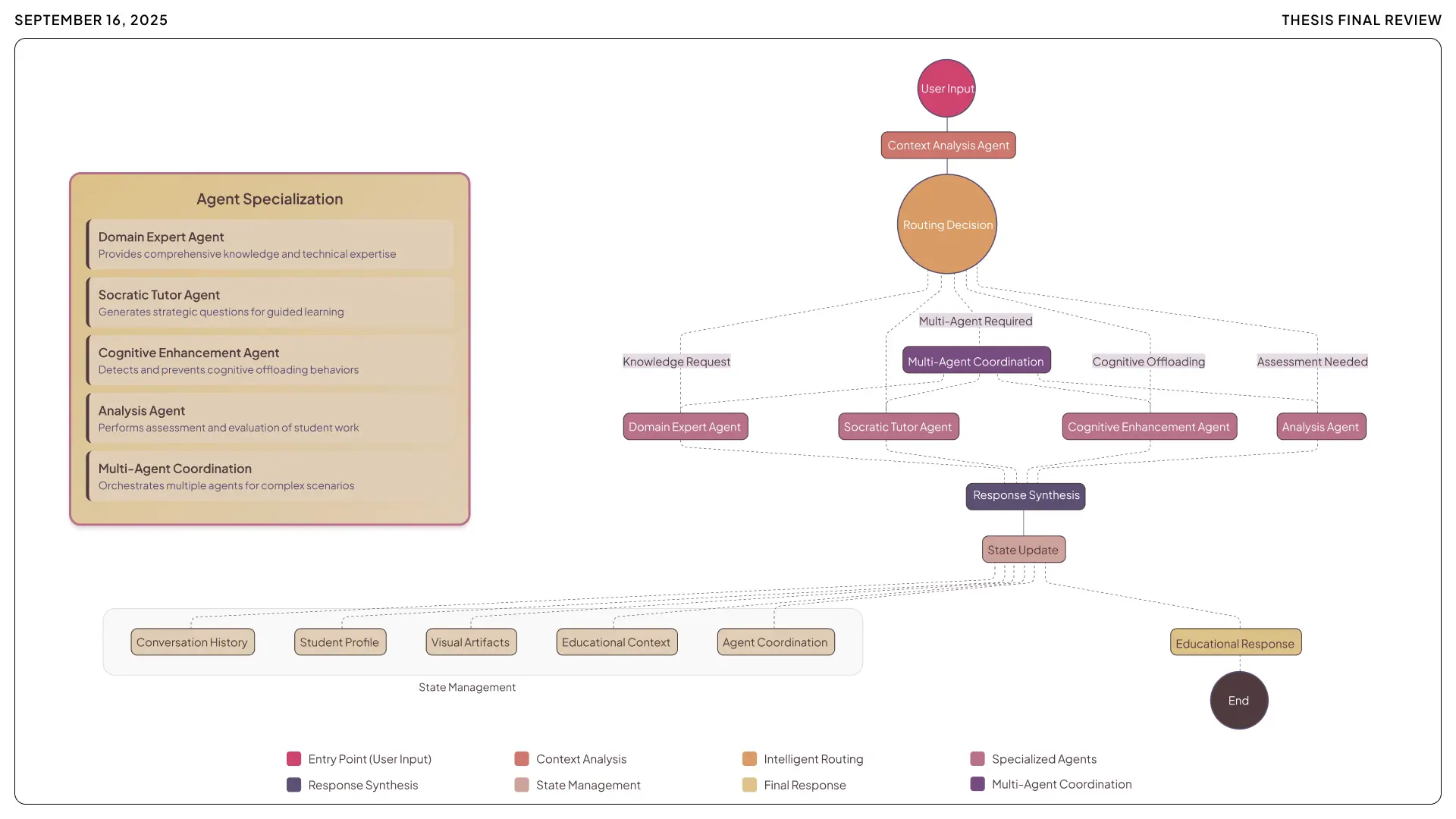

5 Agents, 1 Goal: Make Students Think.

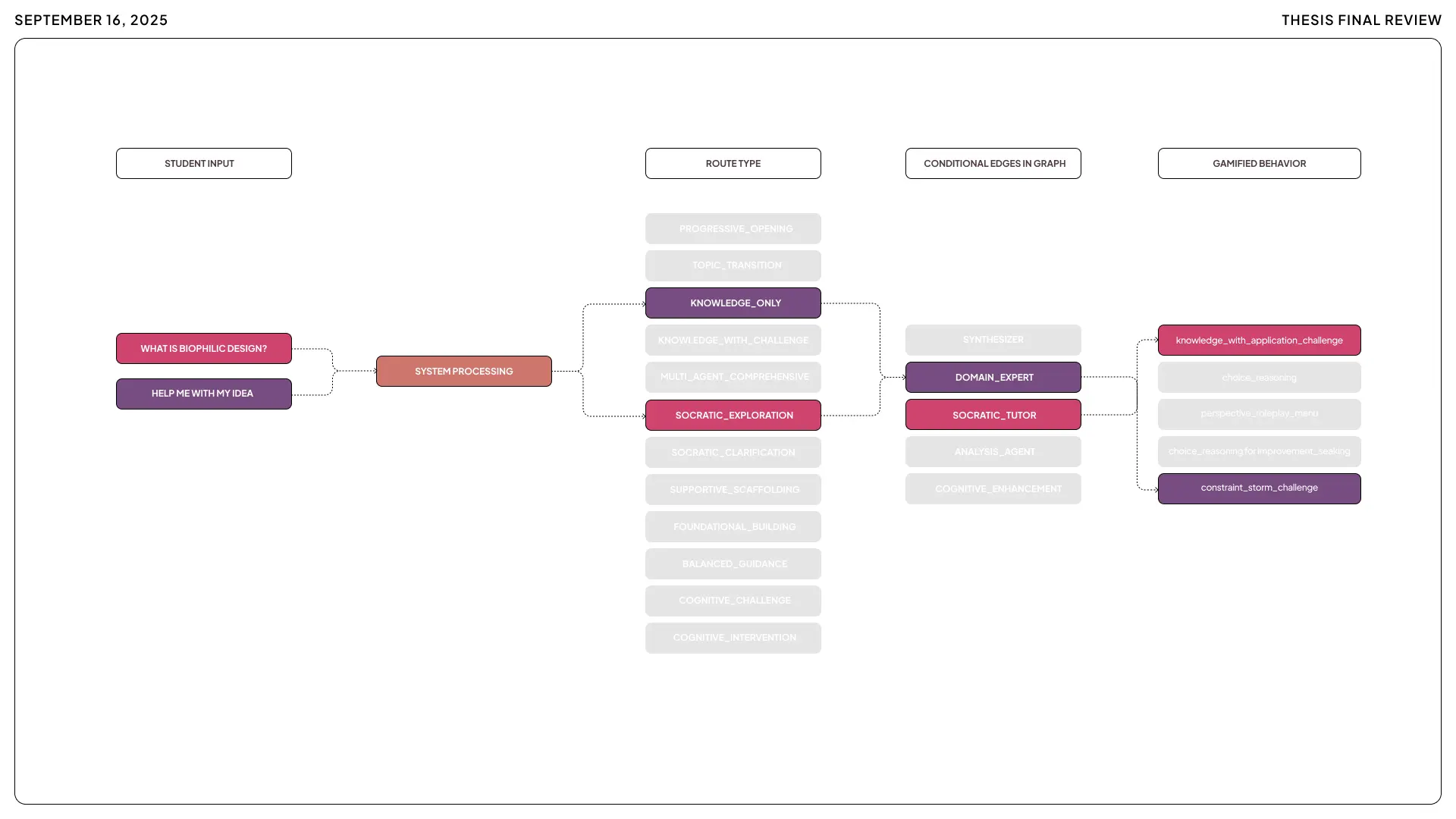

No single AI could juggle all these tasks gracefully. The system needed to be a question-asker, a subject matter expert, a student coach, a context provider, and a watchdog for cognitive offloading all at once. Even a superhuman teacher would struggle with that kind of multitasking. So the roles were split among specialized AI agents that work as a team.

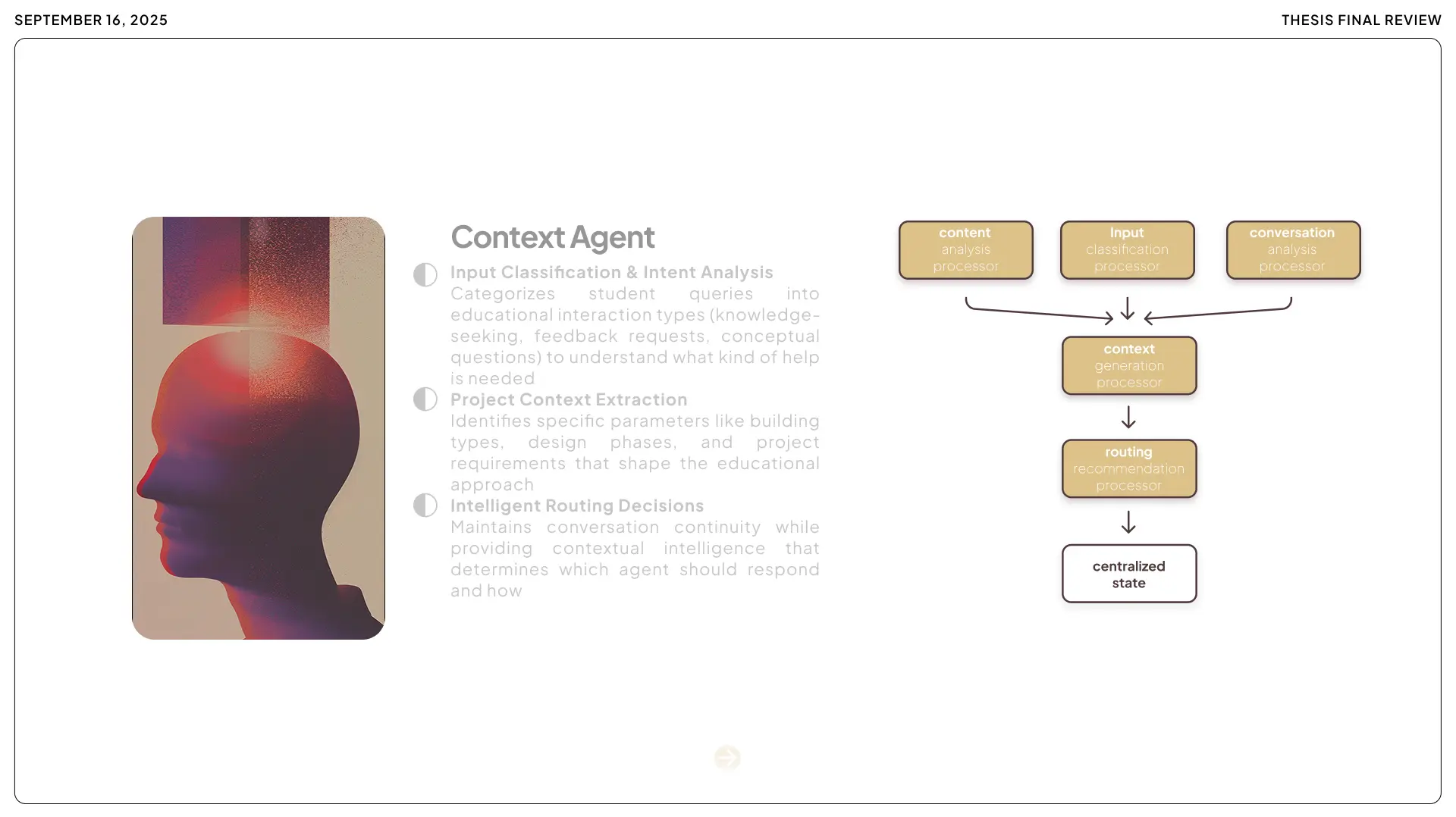

Context Agent:

Sets the stage. At the start of a session it clarifies the design brief and reminds the student of the goals and constraints. The project manager ensuring "Okay, here's what we're working on.

.webp)

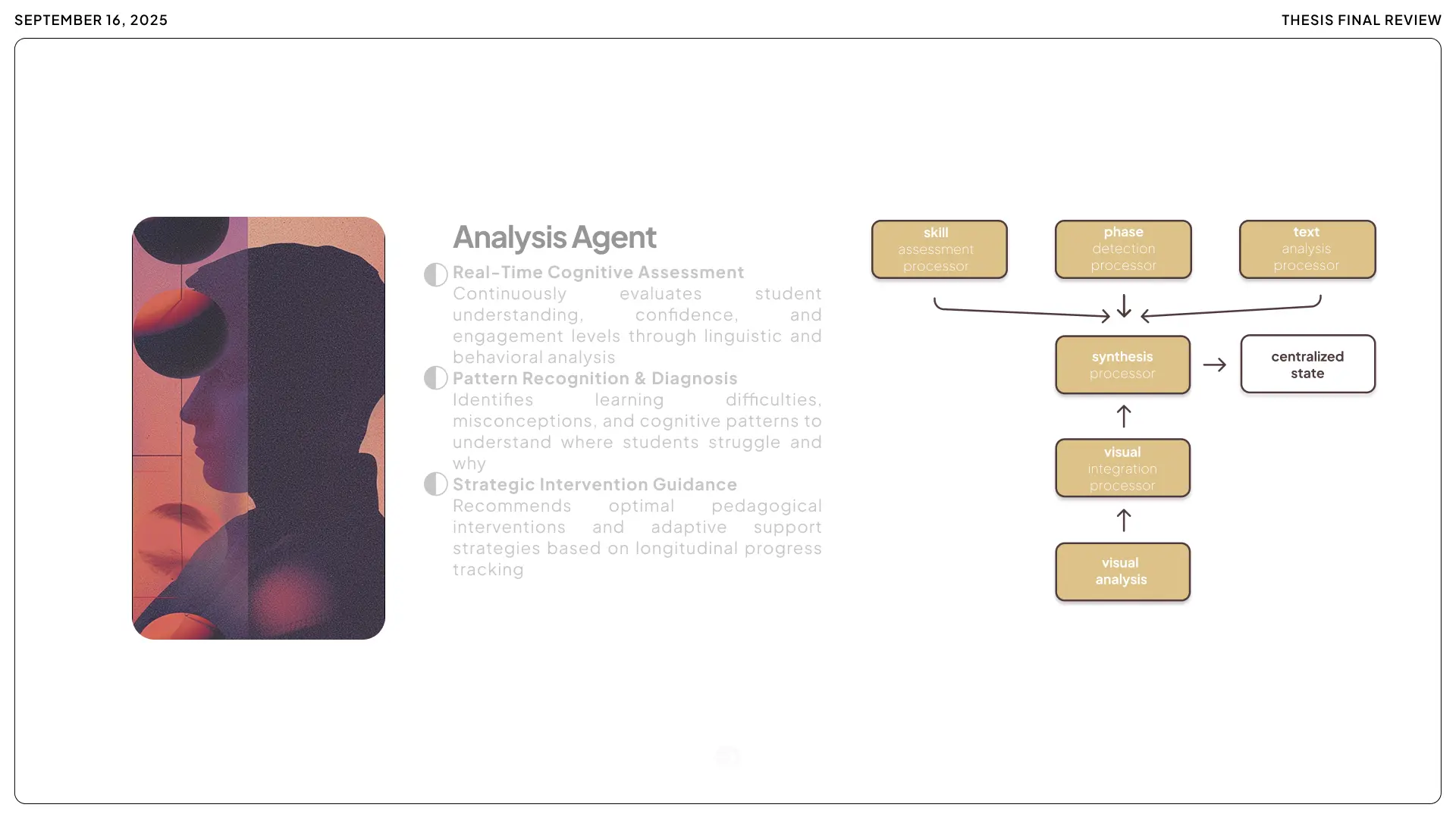

Analysis Agent:

Watches the student's work and provides analytical feedback. If a design idea has a logical flaw or could benefit from a precedent, the Analysis Agent points it out. The meticulous critic. The detail-oriented colleague who catches what gets missed.

Socratic Tutor Agent:

The heart of the system. This is the one asking the tough questions. "Why did you choose this structure?" "Could there be an alternative here?" "What's your reasoning for that?" It never gives away answers. Only prompts and probes.

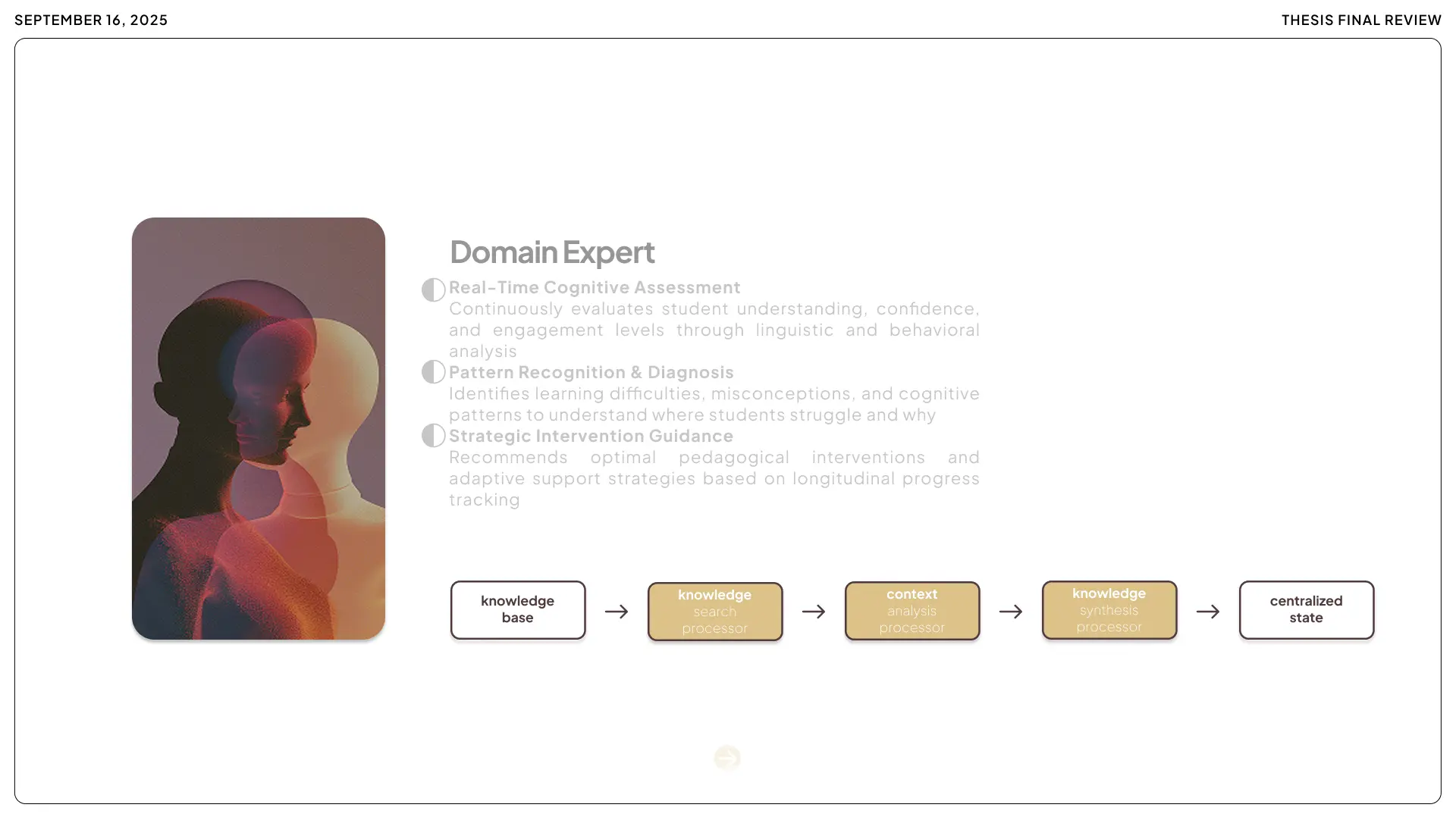

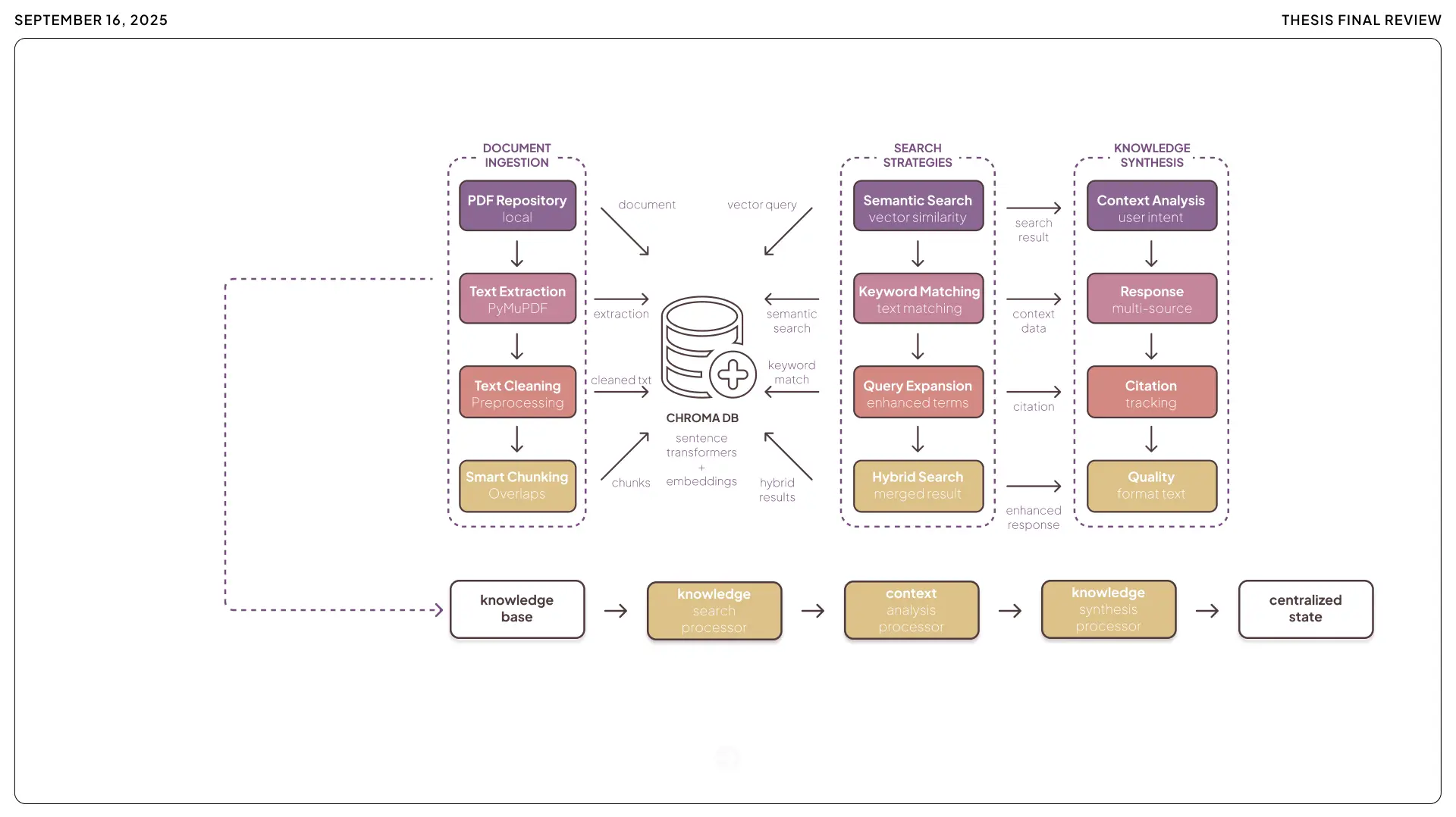

Domain Expert Agent:

Occasionally, students genuinely need information to move forward. Knowledge about structural systems. An example from architectural history for inspiration. The Domain Expert steps in when needed, providing factual guidance or relevant examples. But carefully. It doesn't over-participate. It only chimes in when it can enrich the learning.

.webp)

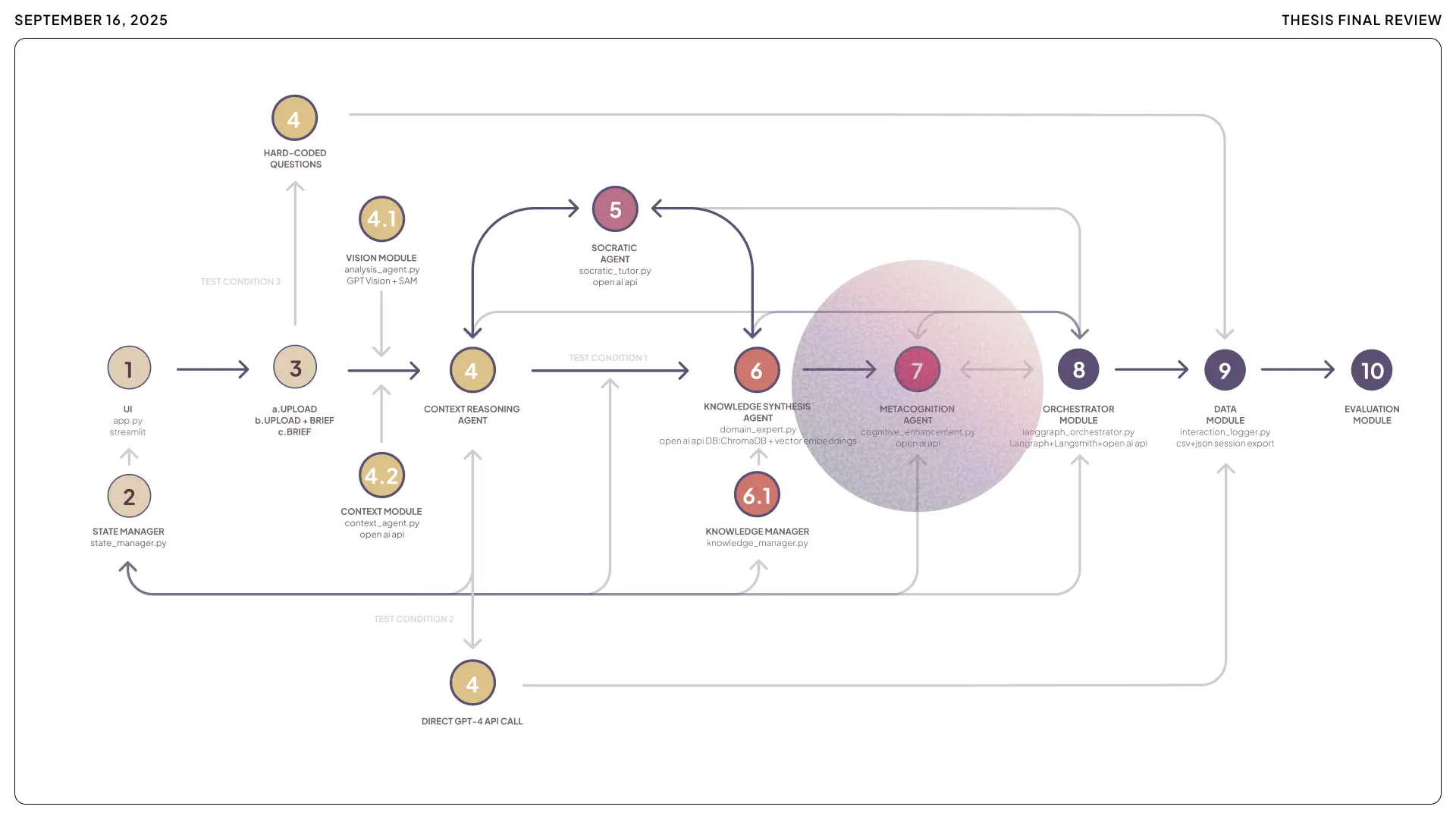

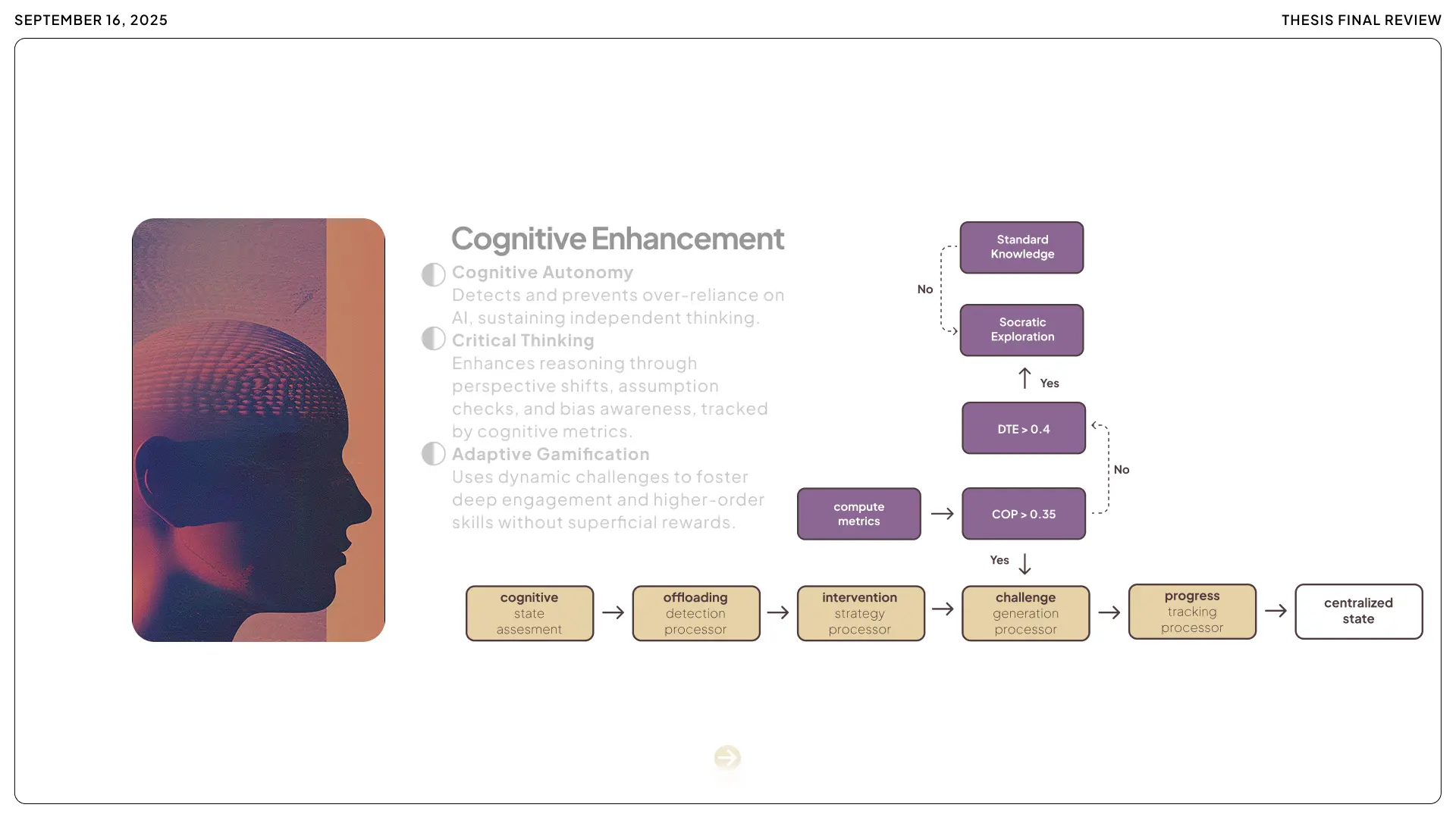

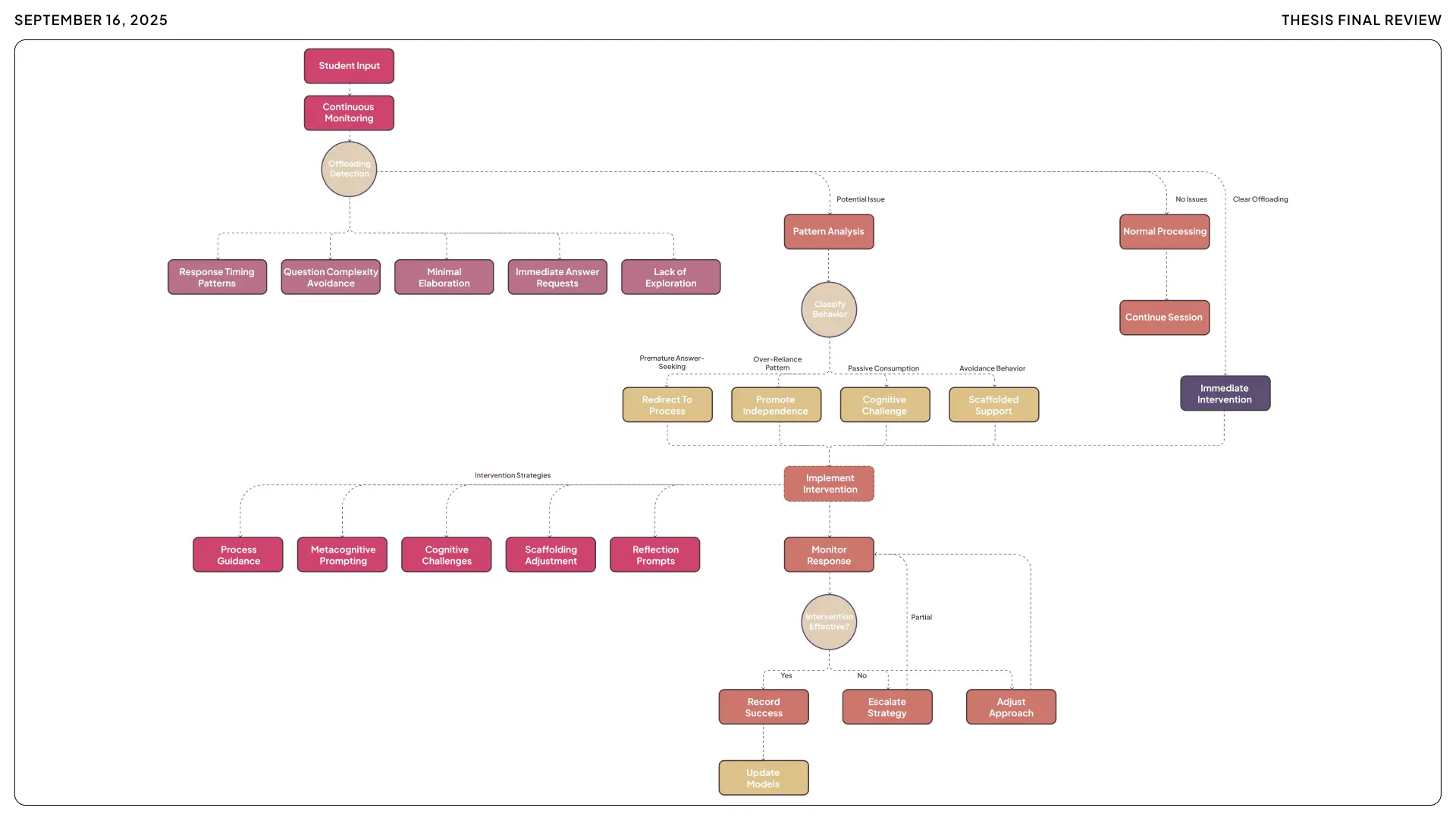

Cognitive Enhancement Agent:

This one monitors the meta level of the session. Is the student actively thinking or just coasting? Are they trying to offload work to the AI? The Cognitive Enhancement Agent detects patterns of dependency or disengagement and intervenes. If a student starts saying "Just tell me the answer" or mindlessly agrees with everything, it steps in. The guardian of cognitive autonomy, making sure the student's brain stays in gear.

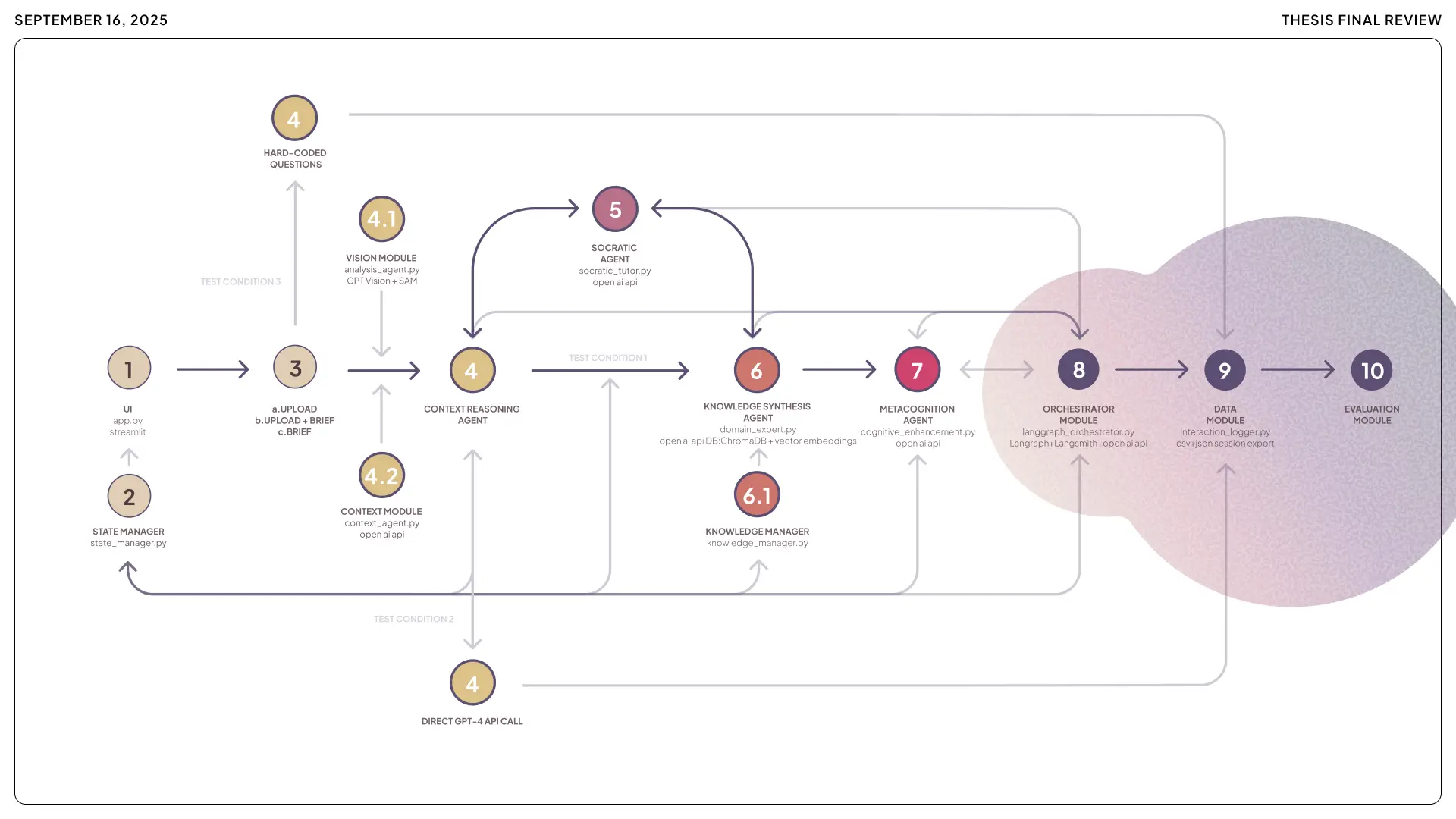

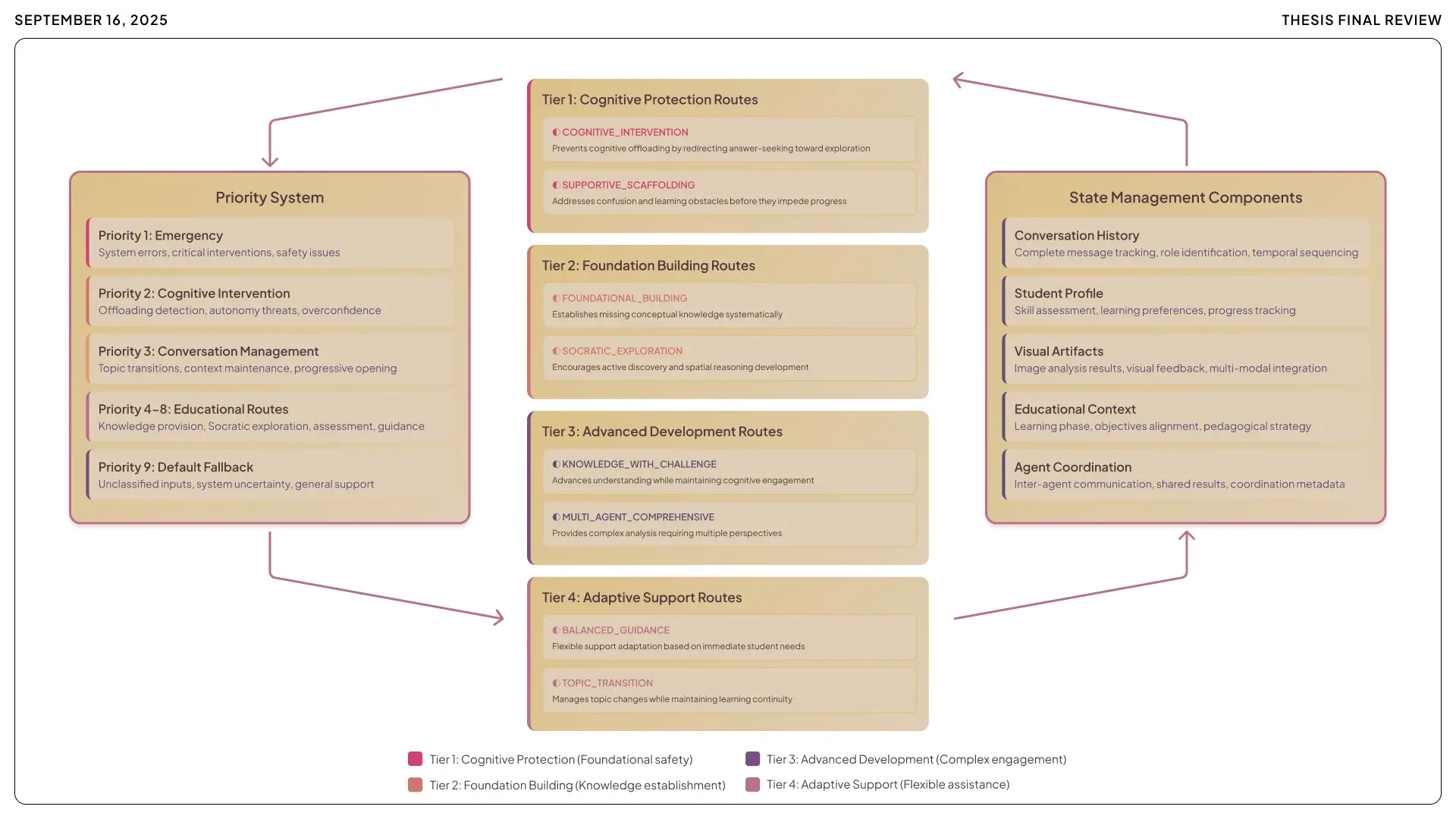

Orchestration:

These five agents operate under a coordinating framework, handing off to each other at the right times.

If a student asks a direct question like "What's the optimal layout for this building?", the system might route it to the Socratic Tutor Agent first, which responds with a question back: "What objectives do you think an optimal layout should achieve?"

If the student is really stuck, the Tutor cues the Domain Expert Agent for a gentle nudge: "Architects often consider sun orientation for layout. How might that apply here?"

Meanwhile, the Cognitive Enhancement Agent quietly tracks the interaction. Did the student light up with a new idea, or are they just parroting what the AI said? It adjusts the strategy accordingly. More prompting if needed. Backing off if the student regains momentum.

Dynamic orchestration. Adaptively human-like in teaching approach, without pretending to be human. A relay team passing the baton, each agent taking the lead when its specialty is needed.

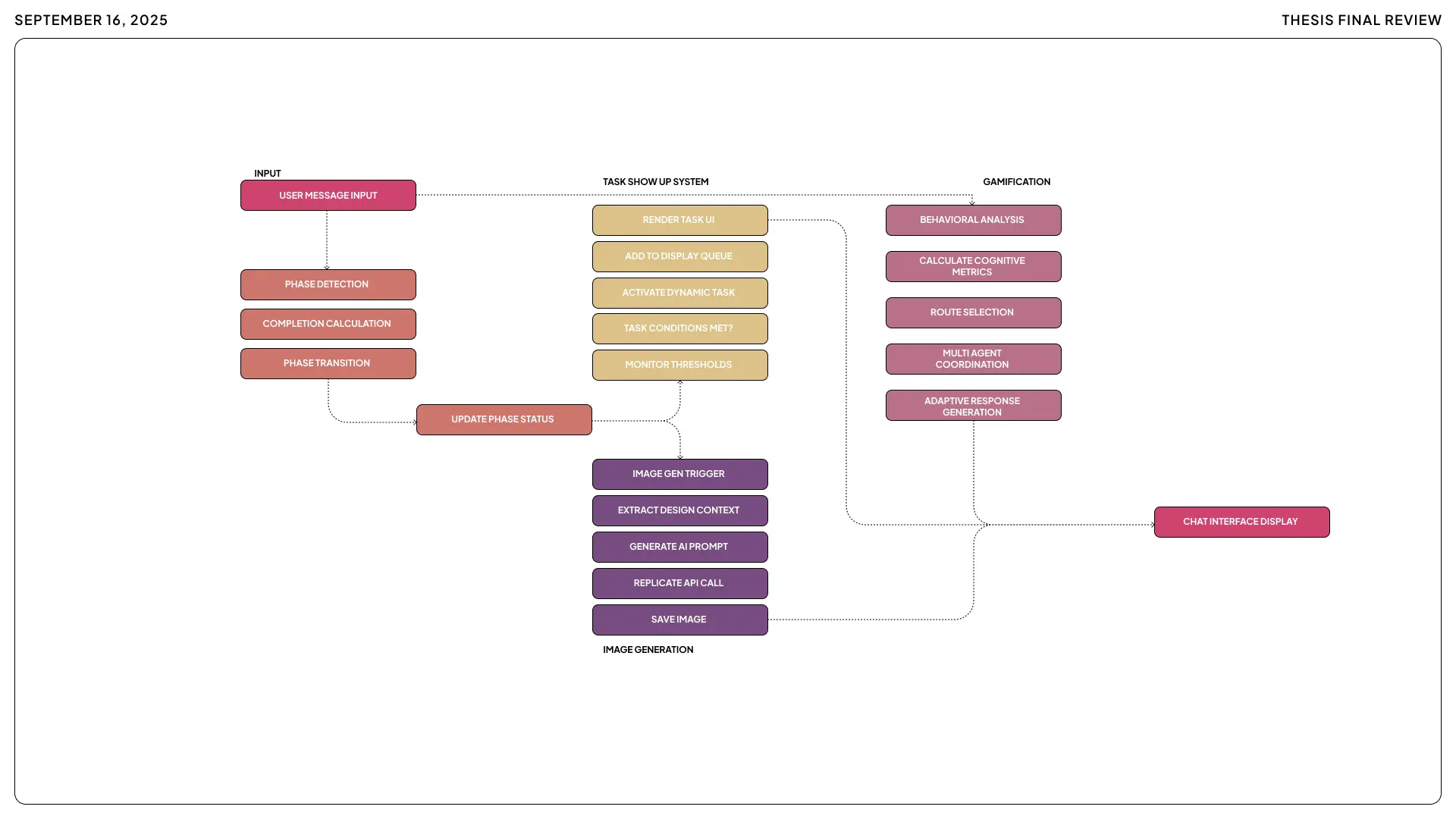

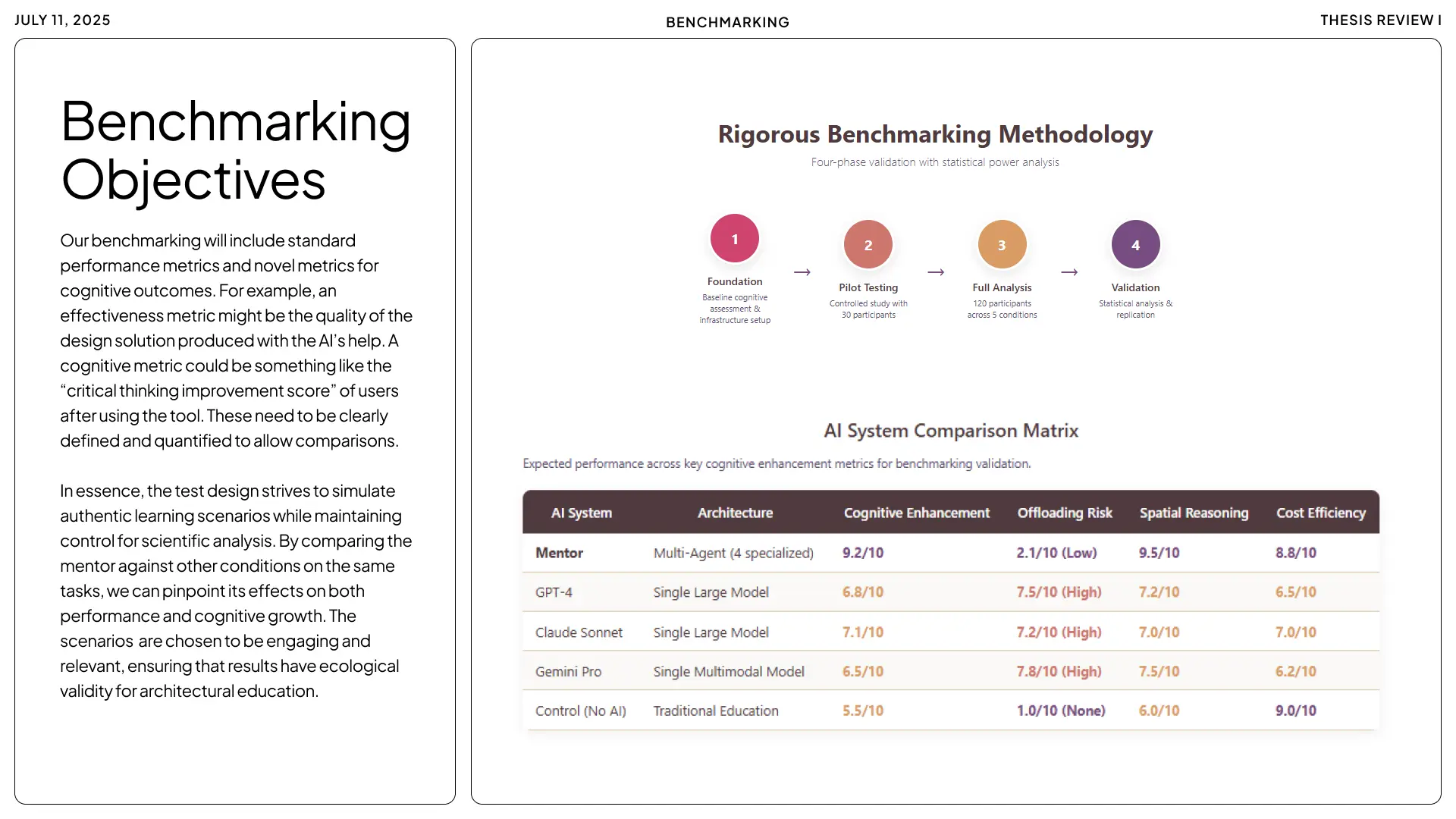

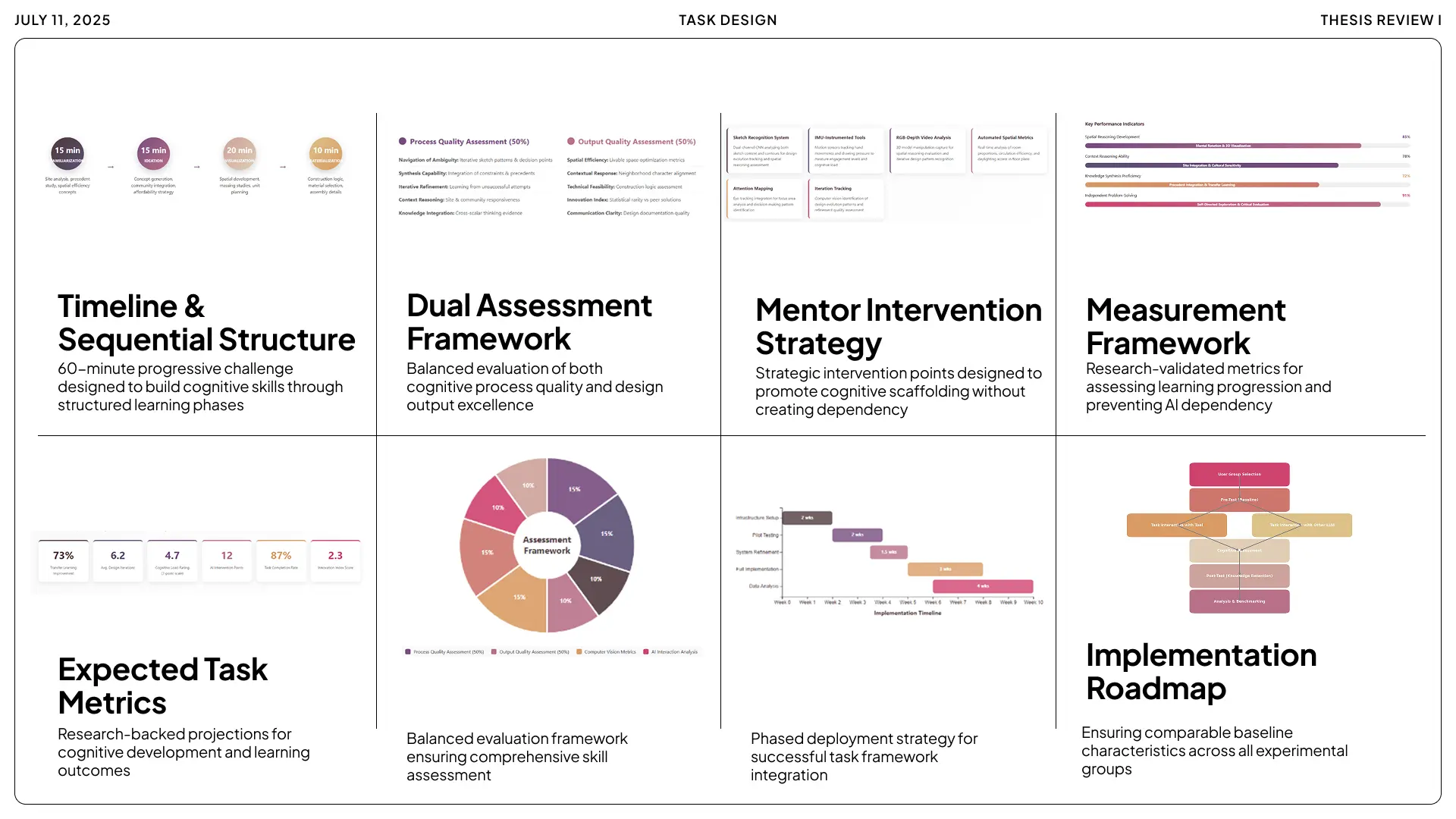

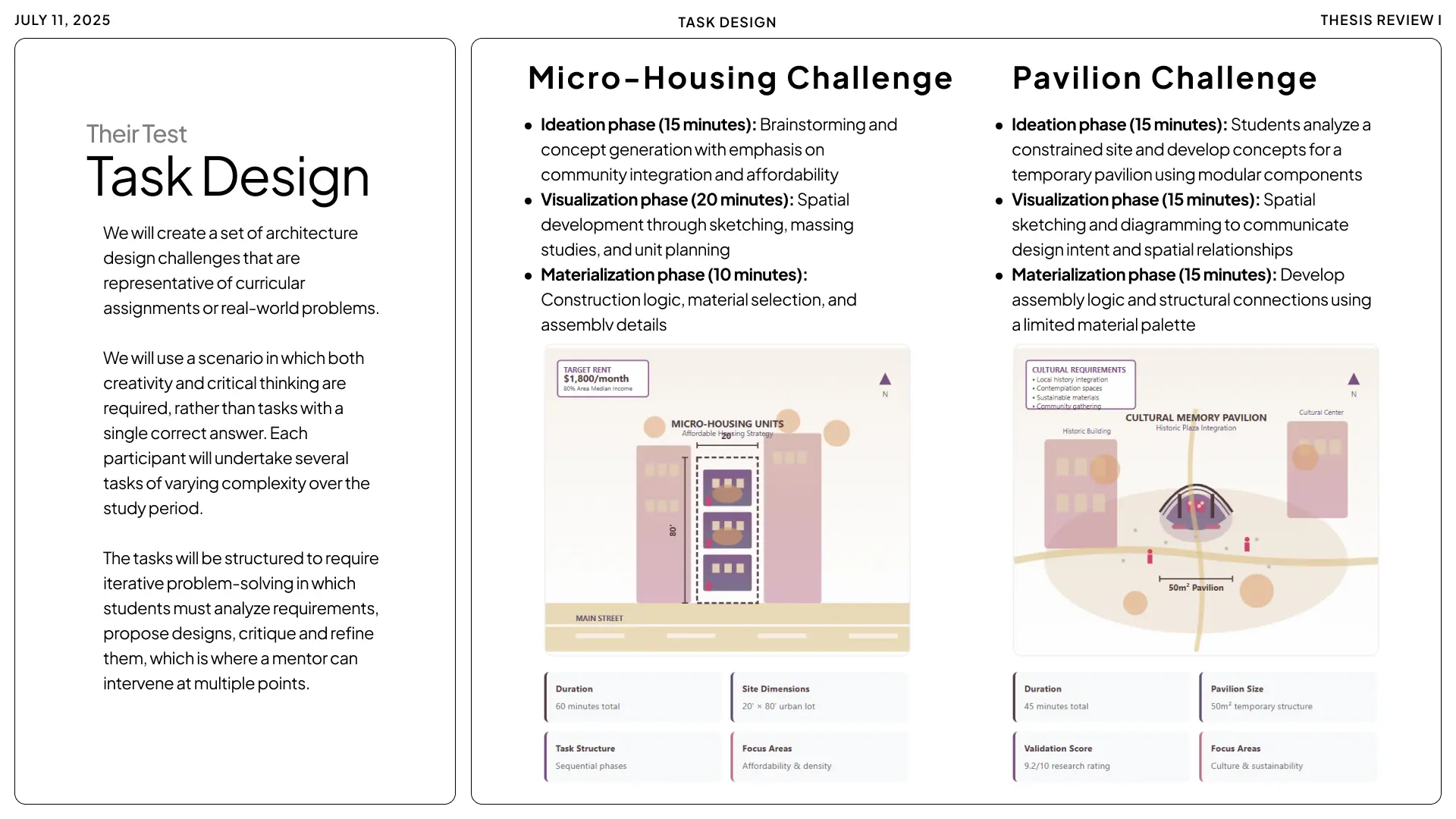

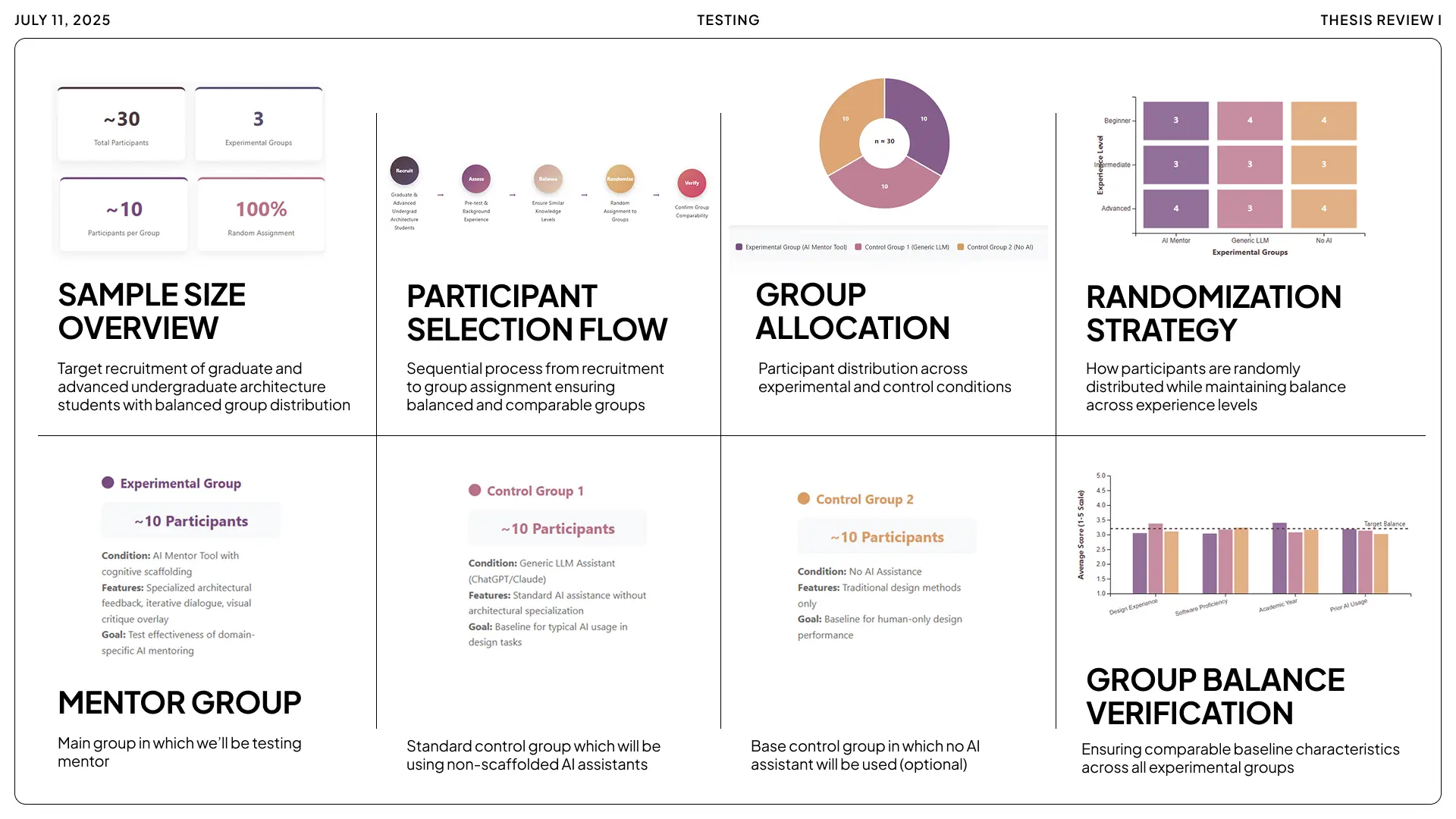

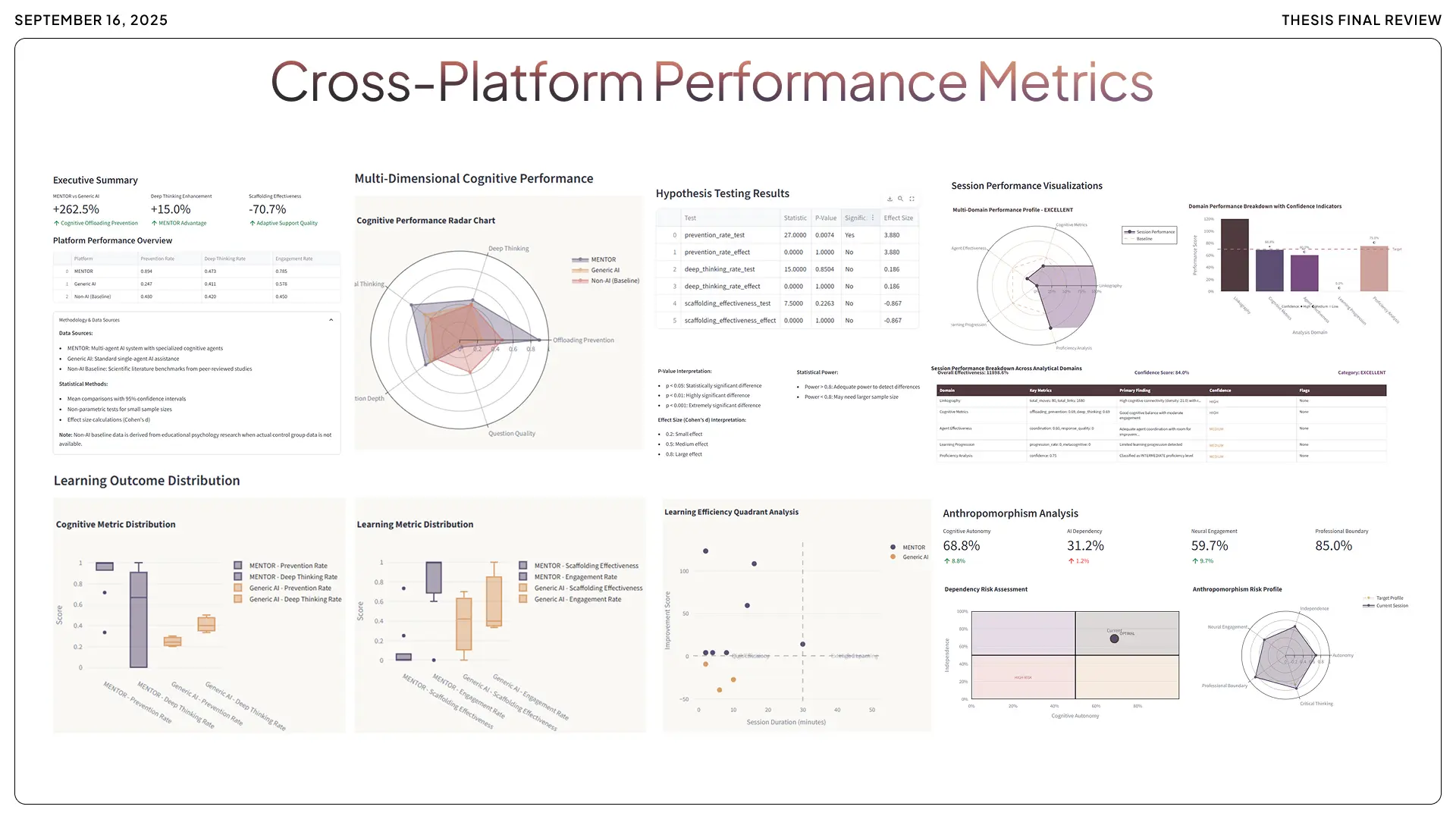

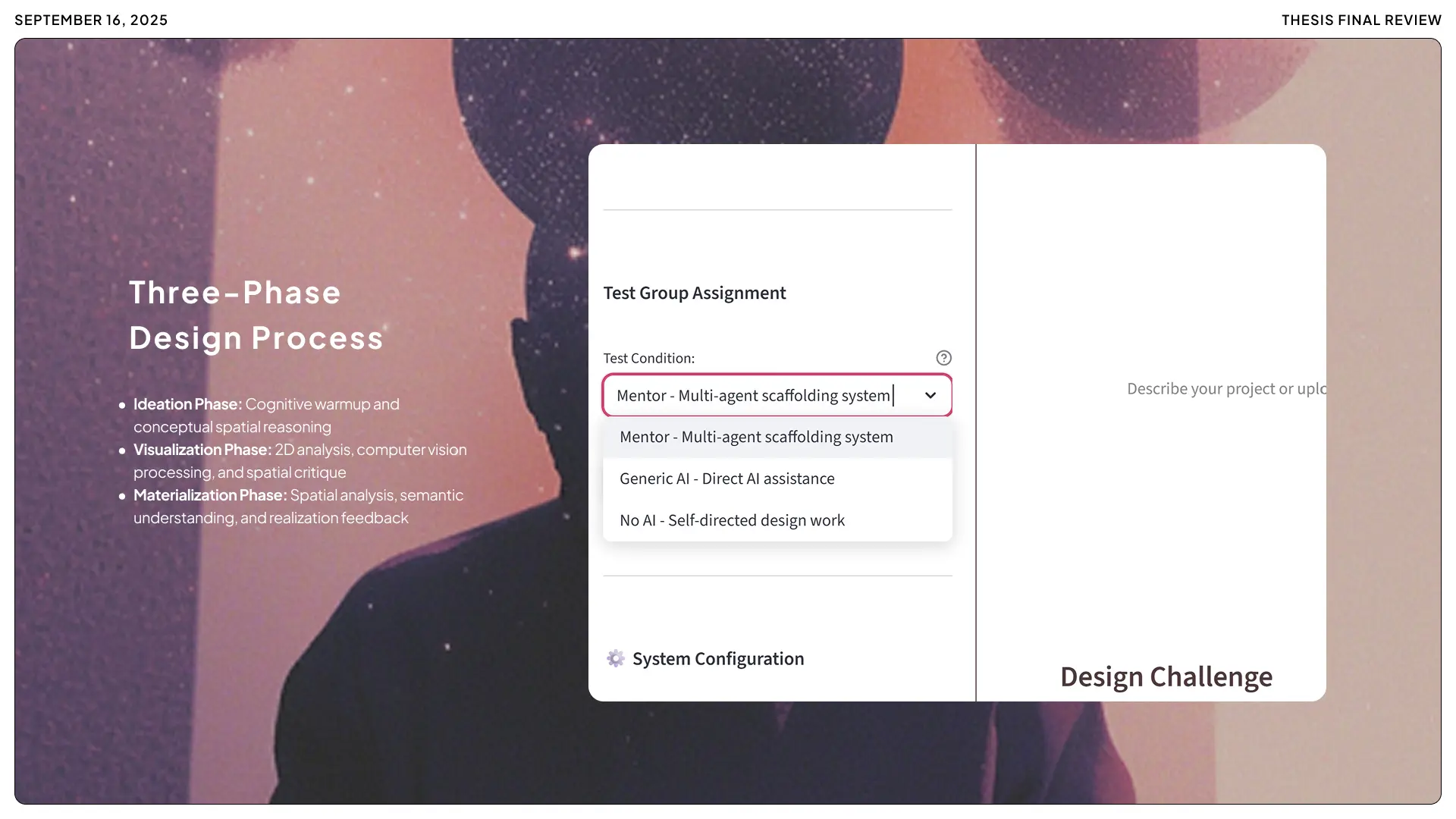

Three Groups. Same Challenge. Measured Against Real Metrics.

A formal evaluation was organized to see if MENTOR delivered on its promises.

Participants were randomly assigned to one of three groups for a design challenge:

One group used MENTOR. The full multi-agent system.

A second group used a generic AI assistant. Direct answers. Help on demand. No Socratic features. No multi-agent architecture. This was the "active control" to see how the typical AI approach compares.

A third group had no AI assistance at all. Just the student, their brain, and the task.

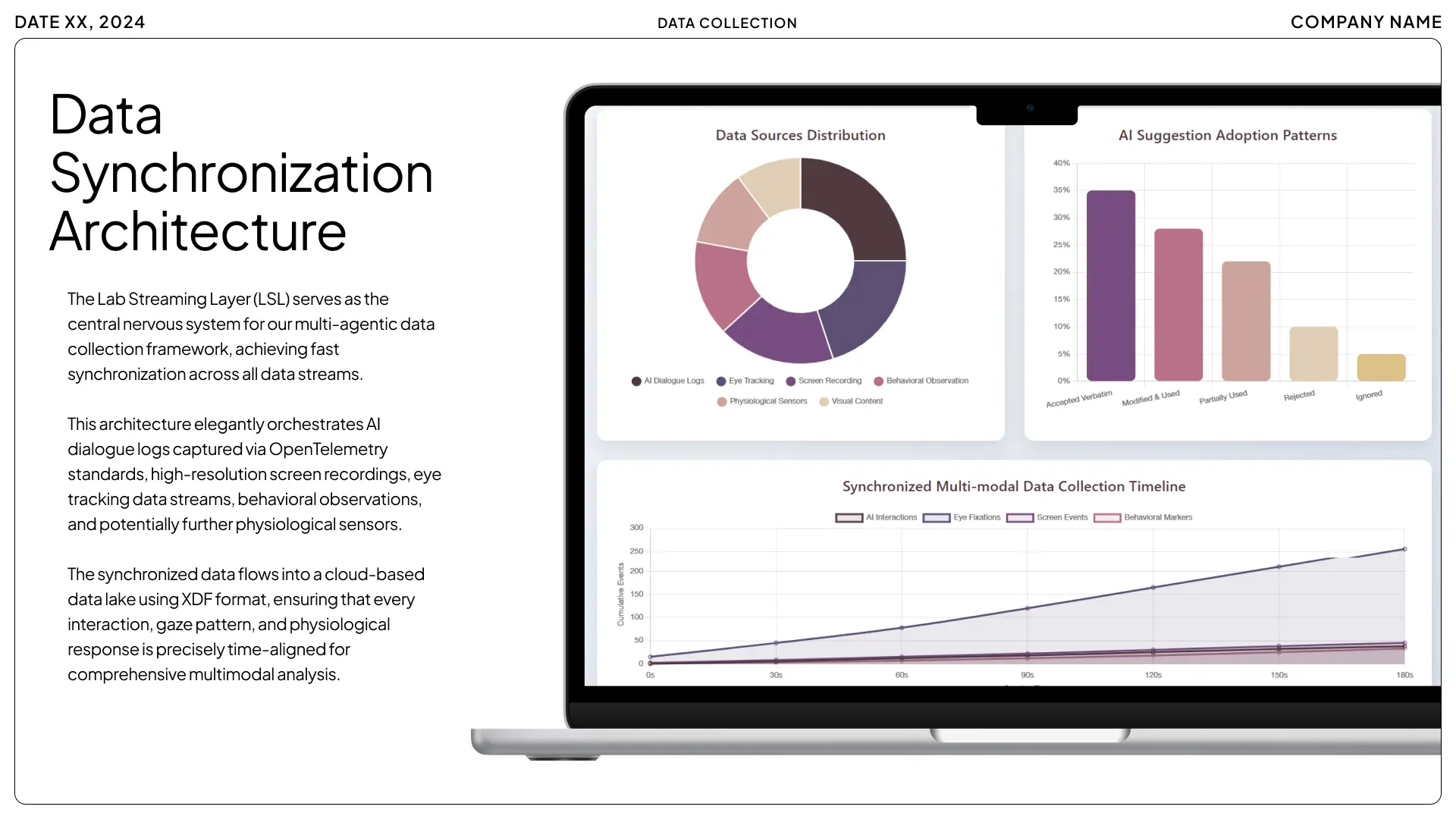

Each participant had the same problem to solve. An open-ended design brief. Simple enough to be accessible, complex enough to require creativity and thinking. Fixed time. Every interaction collected.

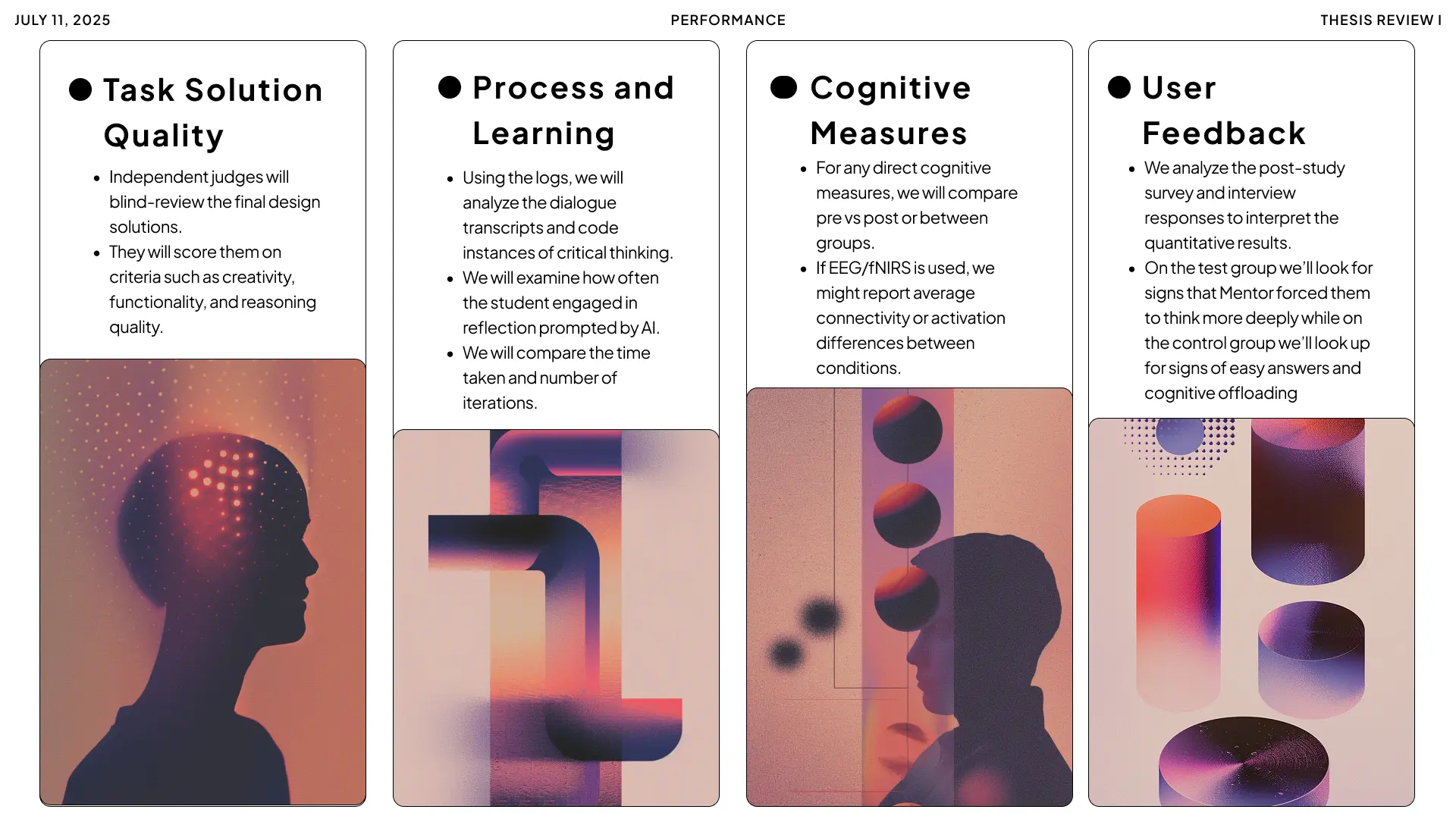

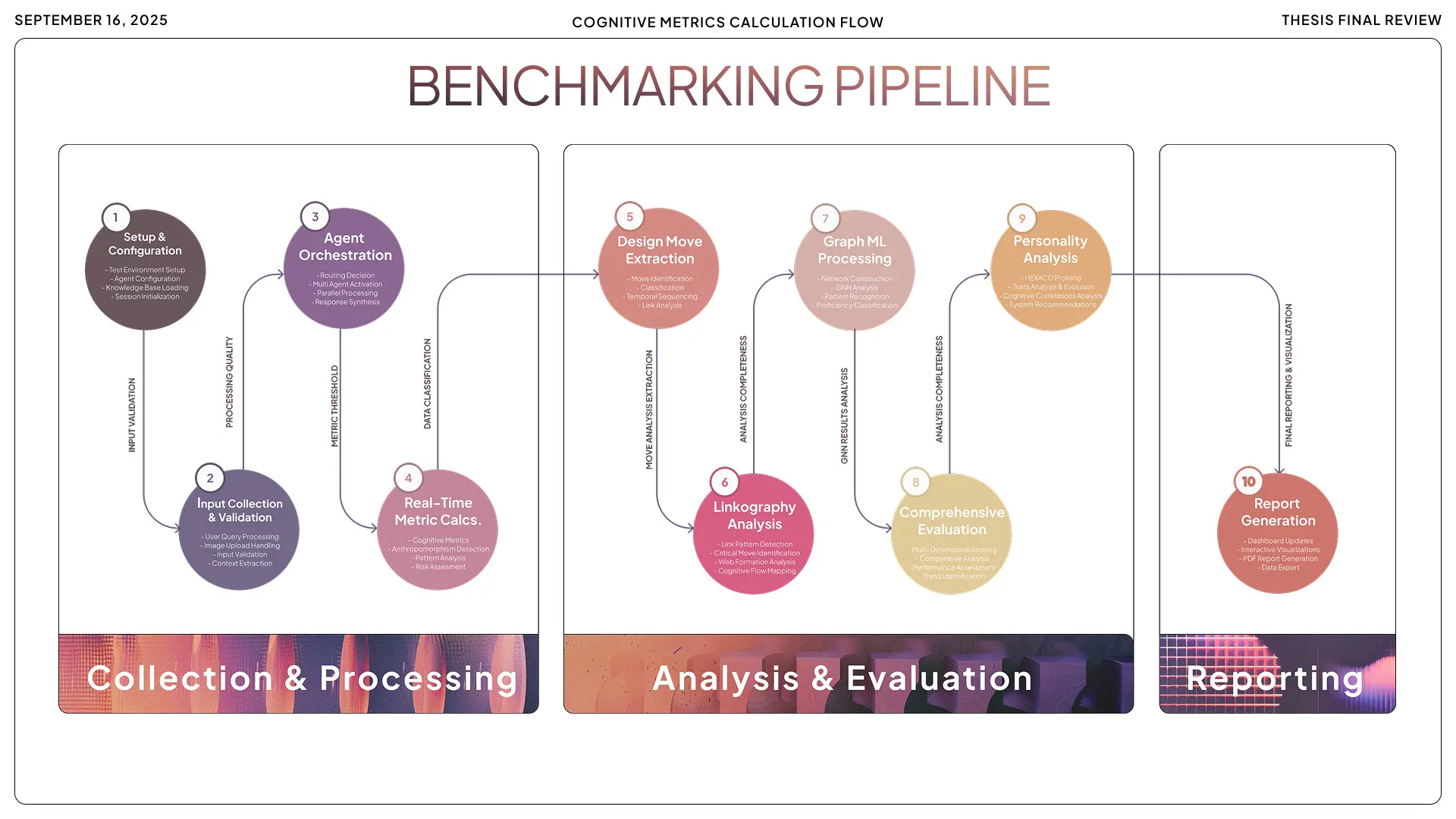

What Was Measured and How:

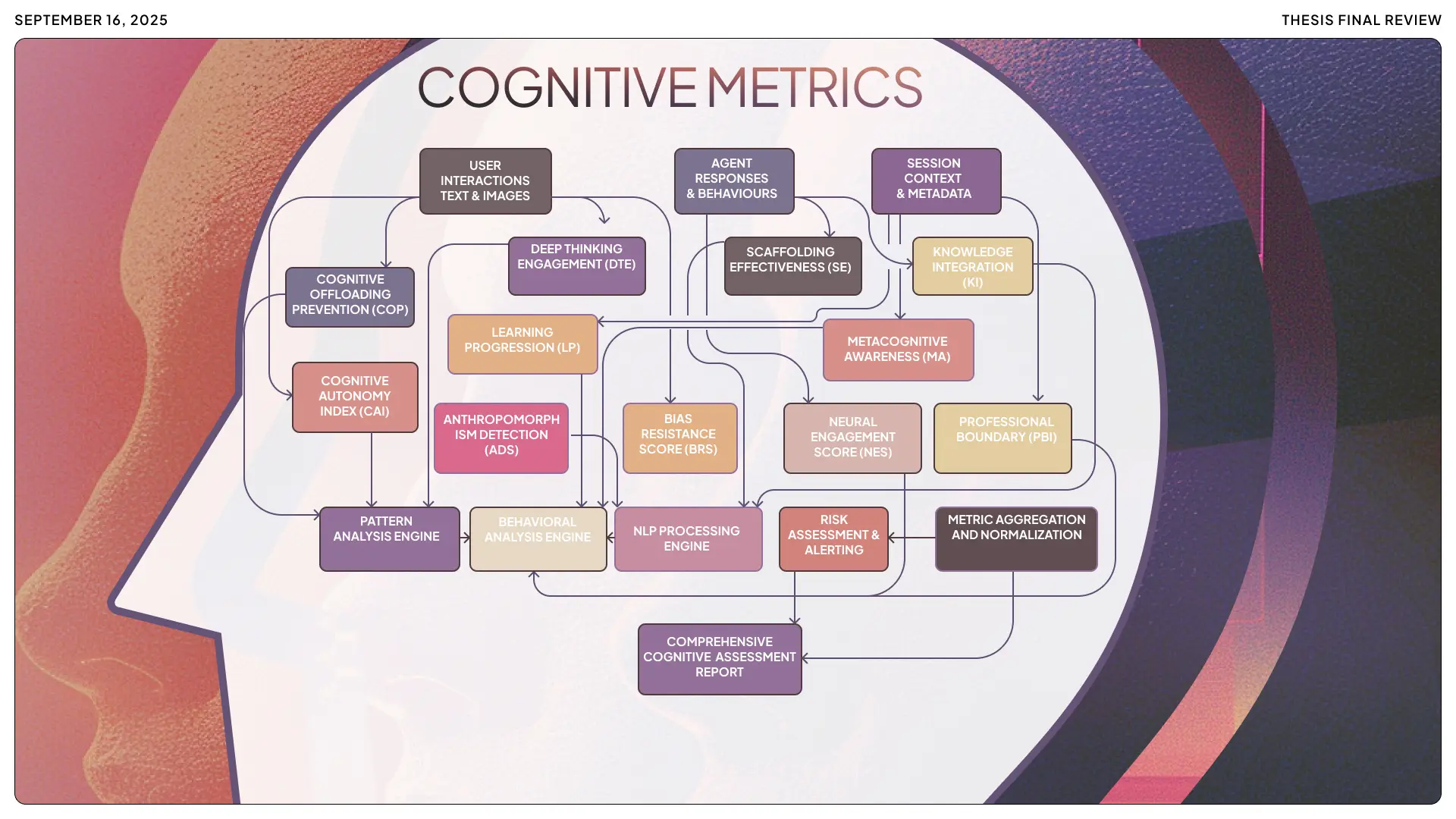

Cognitive offloading was tracked through linguistic pattern detection. Phrases indicating dependency ("just give me the answer," "what should I do"), instances of copying AI suggestions verbatim, and the ratio of AI-generated content to student-originated thinking.

Deep engagement was quantified using natural language processing to assess reasoning complexity. Usage of causal language ("because," "therefore"), hypothetical scenario exploration ("what if," "alternatively"), and analytical terminology indicating strategic thinking rather than surface-level responses.

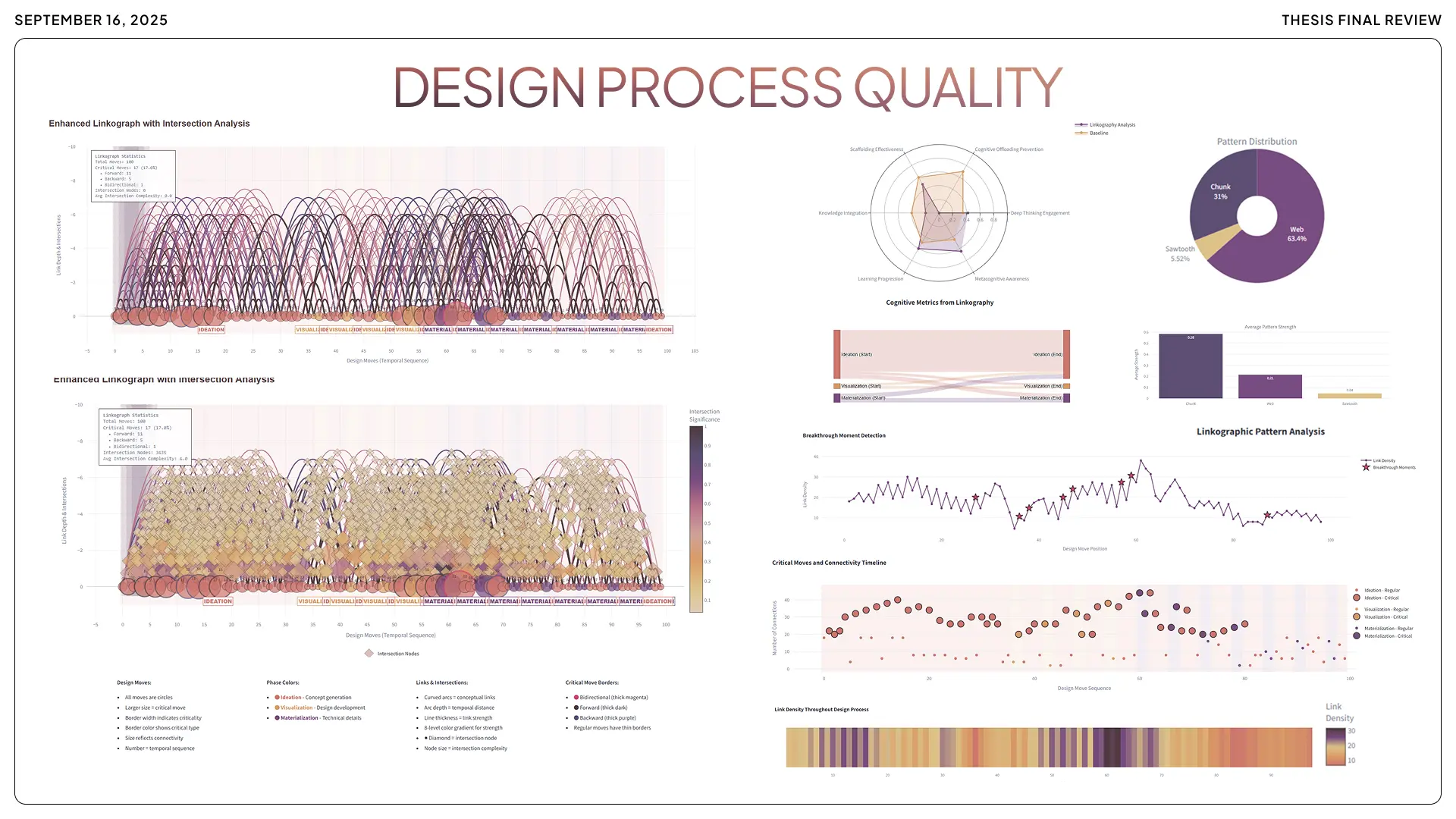

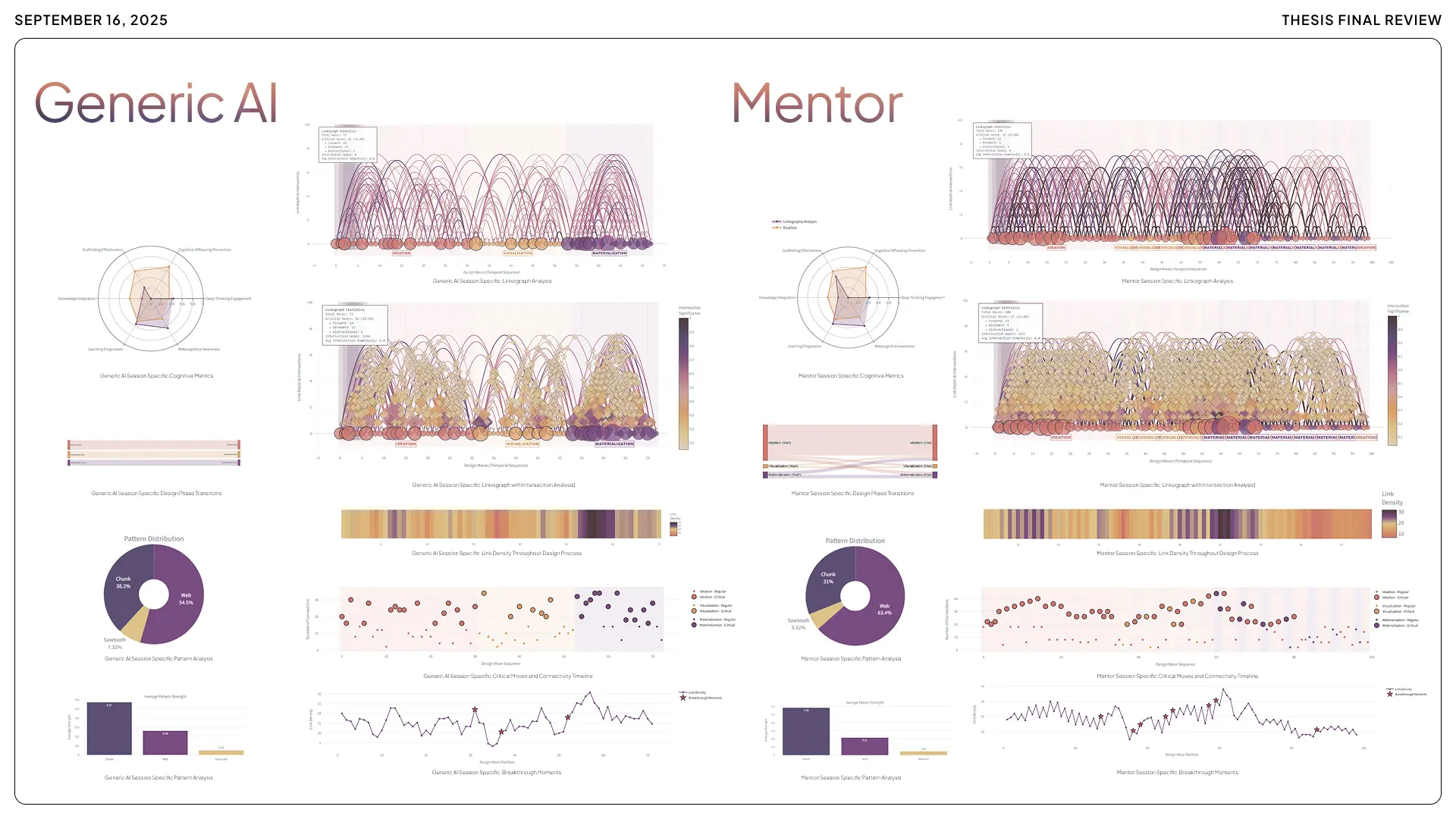

Design process quality was mapped using linkography, a method that represents the design process as a network of ideas. Each idea or design move is plotted as a node, and connections between ideas become edges. A richer, more interlinked map suggests thoughtful, iterative design. A sparse map with few connections suggests linear thinking or passive acceptance of given solutions. Link density was calculated for each session.

Human-AI relationship health was assessed through anthropomorphism detection. Language patterns indicating emotional attachment, human attribution ("the AI thinks," "it wants me to"), blind trust without questioning, and dependency signals were tracked. Subjective feedback was also gathered: Did the AI feel controlling or empowering? Did students maintain healthy skepticism?

All metrics were benchmarked against three baselines: the generic AI group (representing current standard practice), the no-AI control group (representing unassisted human capability), and predefined thresholds established from educational psychology research on healthy learning patterns.

What Happened in Practice:

With the generic AI group: A student asks "What should I include in a community center?" The AI responds with a comprehensive list: gymnasium, multipurpose rooms, kitchen, childcare center, computer lab. The student copies it. Done. Time elapsed: less than one minute.

With MENTOR: Same question. But instead of an answer, the Socratic Tutor Agent responds: "Who do you imagine using this space throughout a typical day?"

The student pauses. Thinks. "Well... elderly people in the morning?"

"Interesting. What might they need that's different from teenagers after school?"

Suddenly, the student isn't just listing rooms. They're thinking about human needs, daily rhythms, intergenerational dynamics. They're designing from understanding, not from a template.

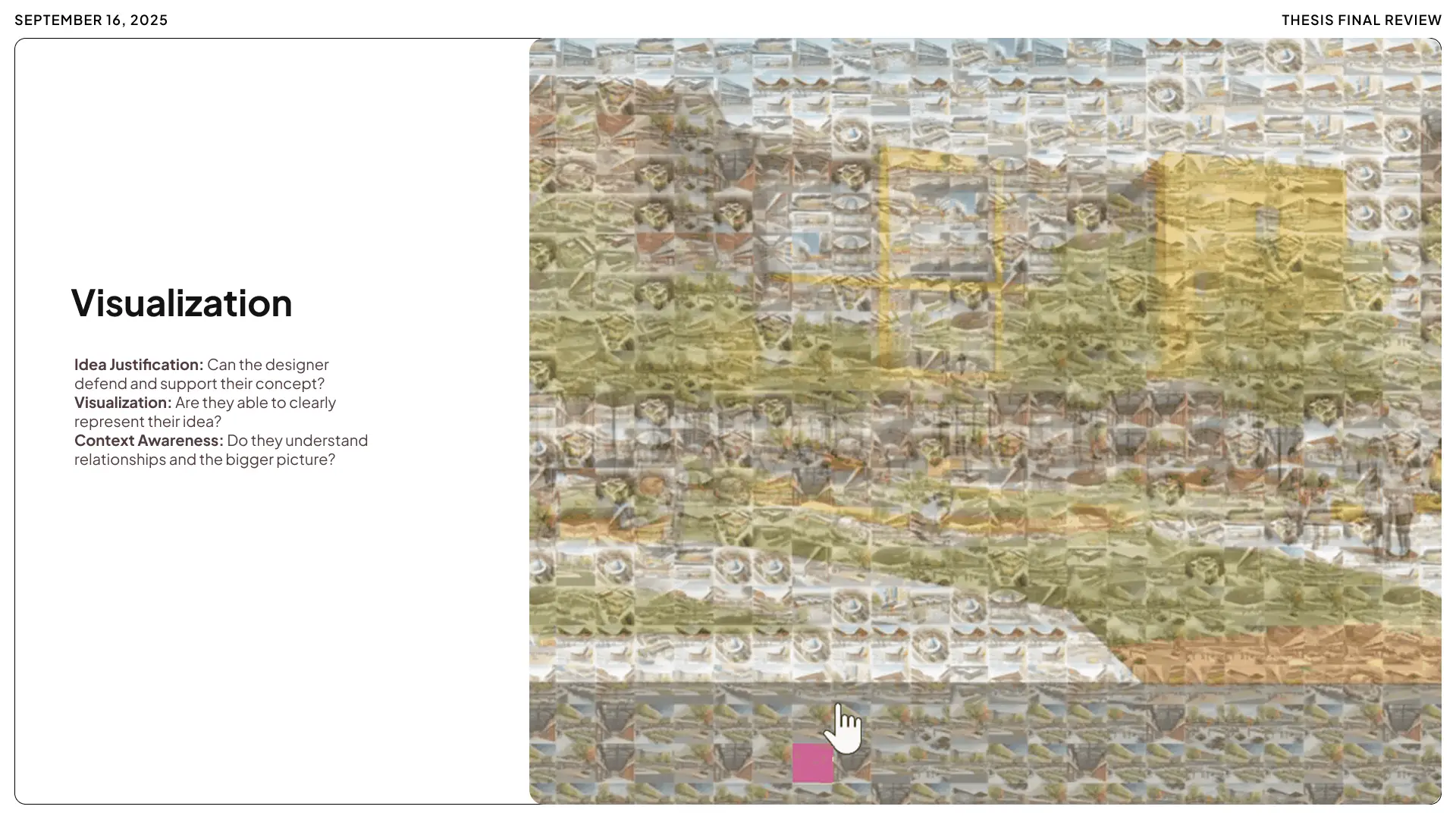

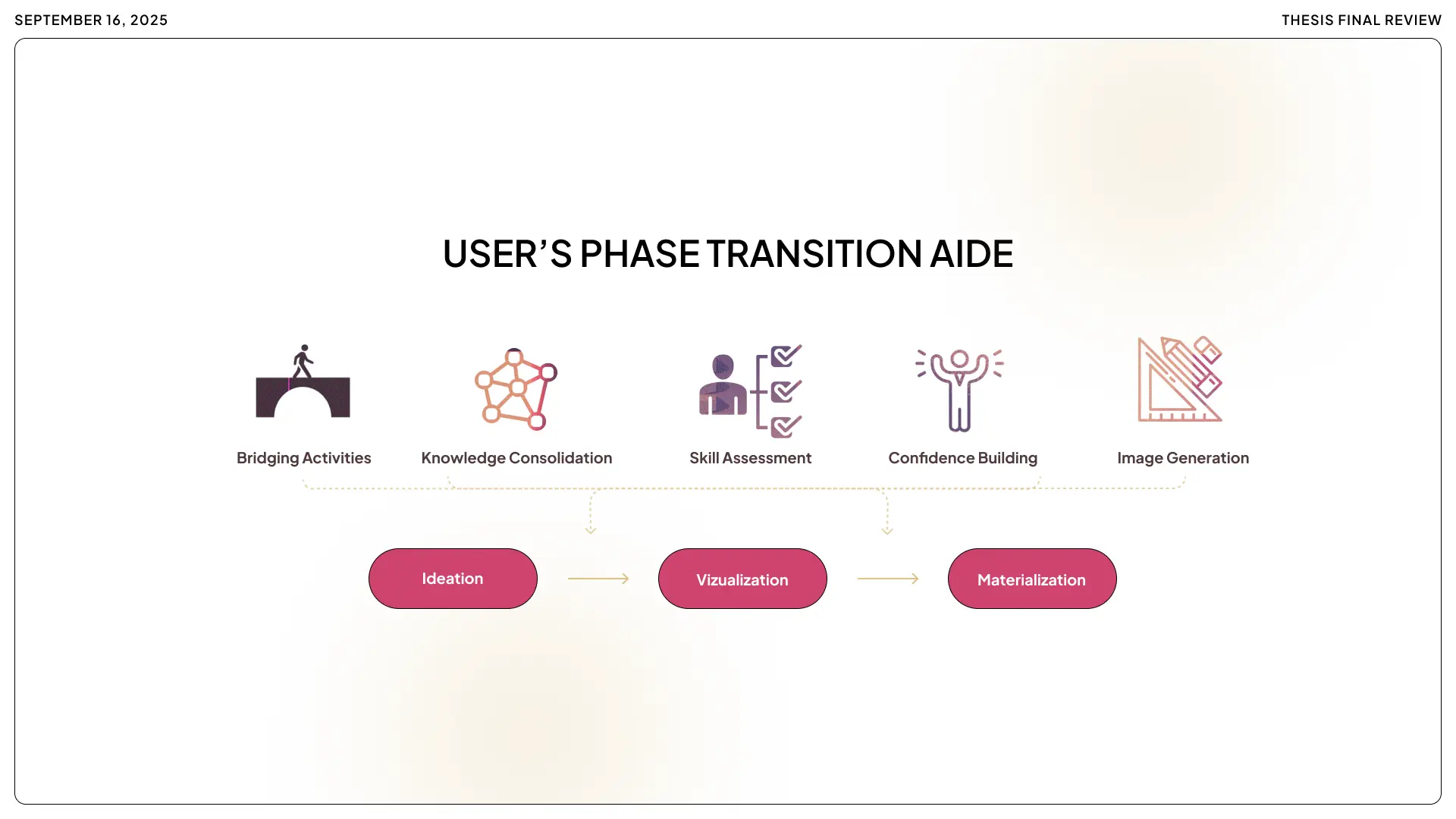

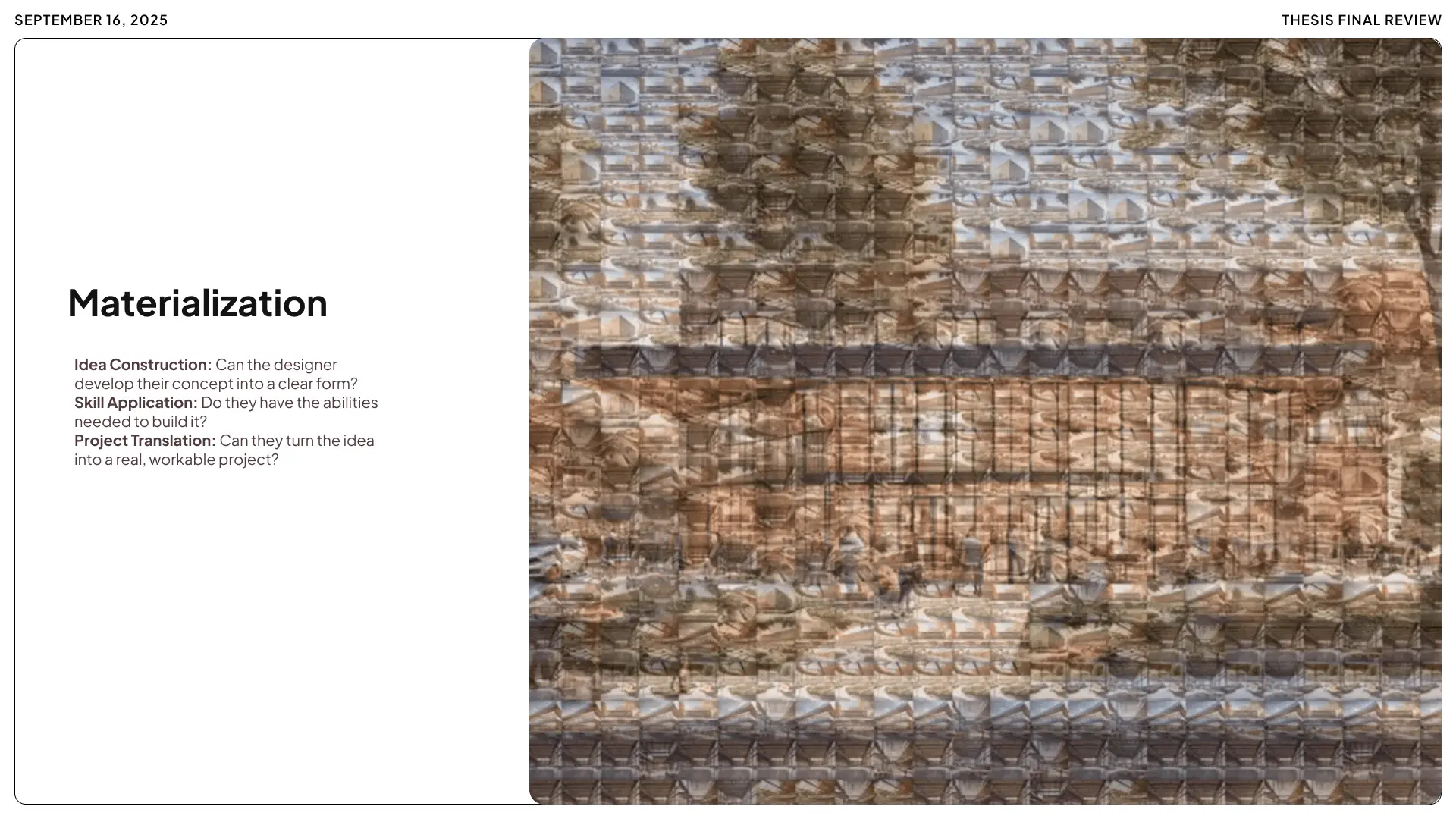

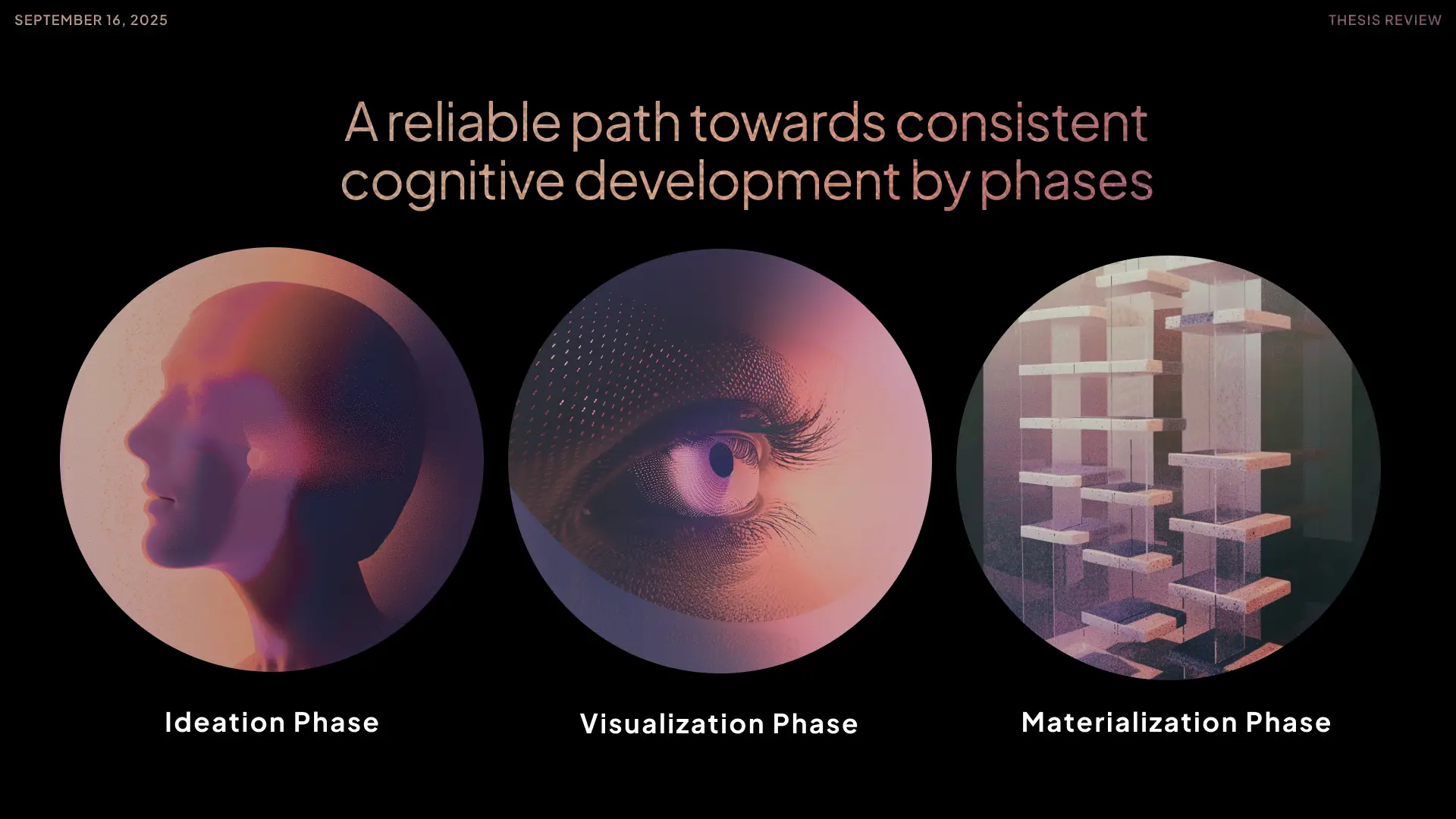

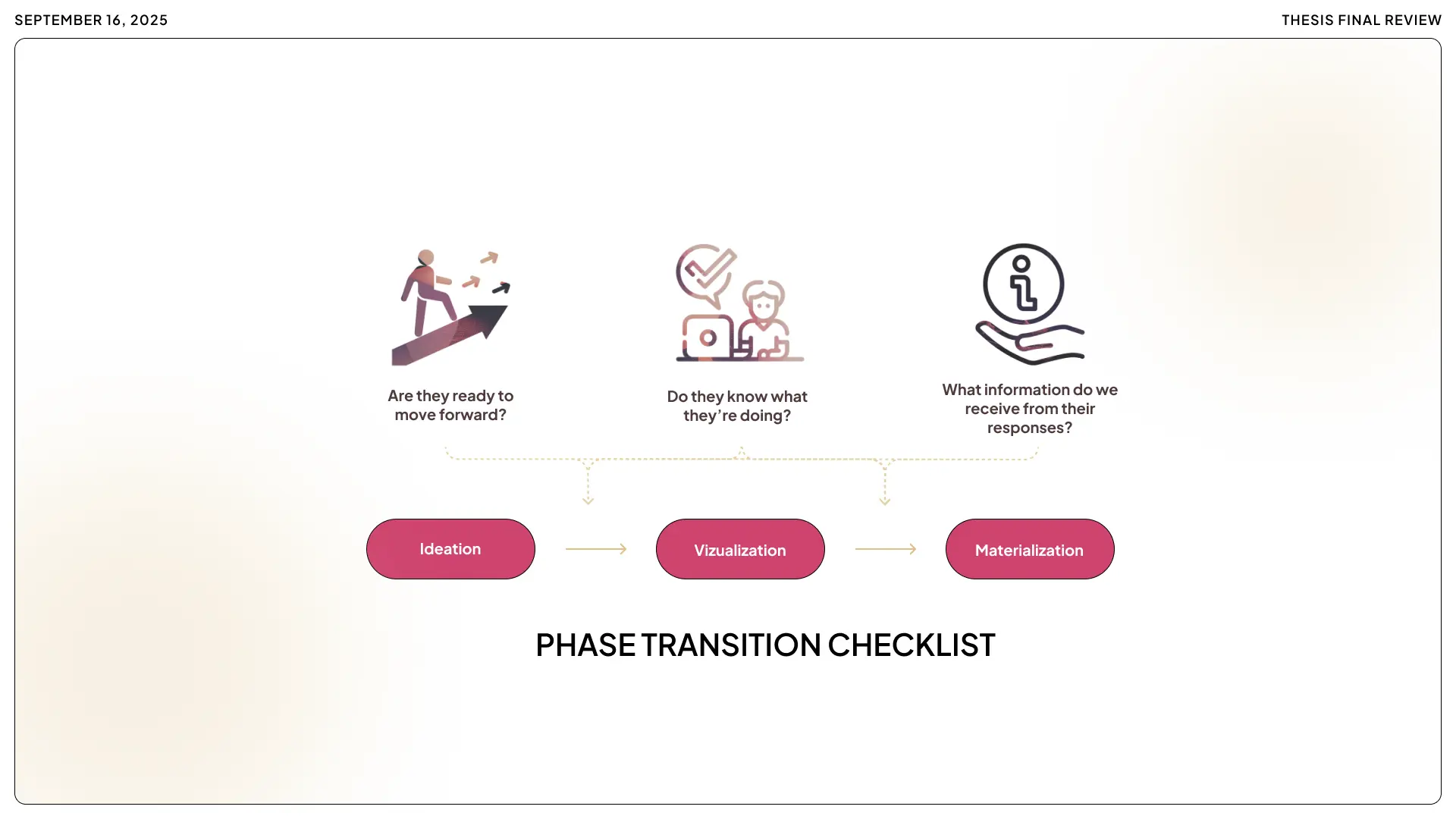

The test was structured in three phases. An Ideation phase where the student sets up the groundwork of what was meant to be designed. A Visualization phase where the design starts getting conceptualized around the ideas discussed. A Materialization phase where those concepts get solidified into concrete form. Each serving its own purpose, the same way any creative work evolves from abstract conceptual ideation into specific detail.

At first, some students were taken aback. "This AI is answering my questions with more questions?"

But they didn't bail. They kept at it. Gradually the shift became visible in the session metrics: engagement with MENTOR's questions, bouncing ideas, refining.

Meanwhile, in the generic AI group, things played out generically. Those students would ask for an idea, the AI would give a decent suggestion, maybe a whole sample outline. Most ran with those AI-suggested ideas, only lightly tweaking them. Faster upfront. But they didn't dig as deep or explore alternatives.

The no-AI control group was mixed. Some struggled in silence. Others came up with great ideas on their own. Not having any guidance was challenging for many. A cognitive workout without a trainer. Some thrived. Some didn't. Most gave up.

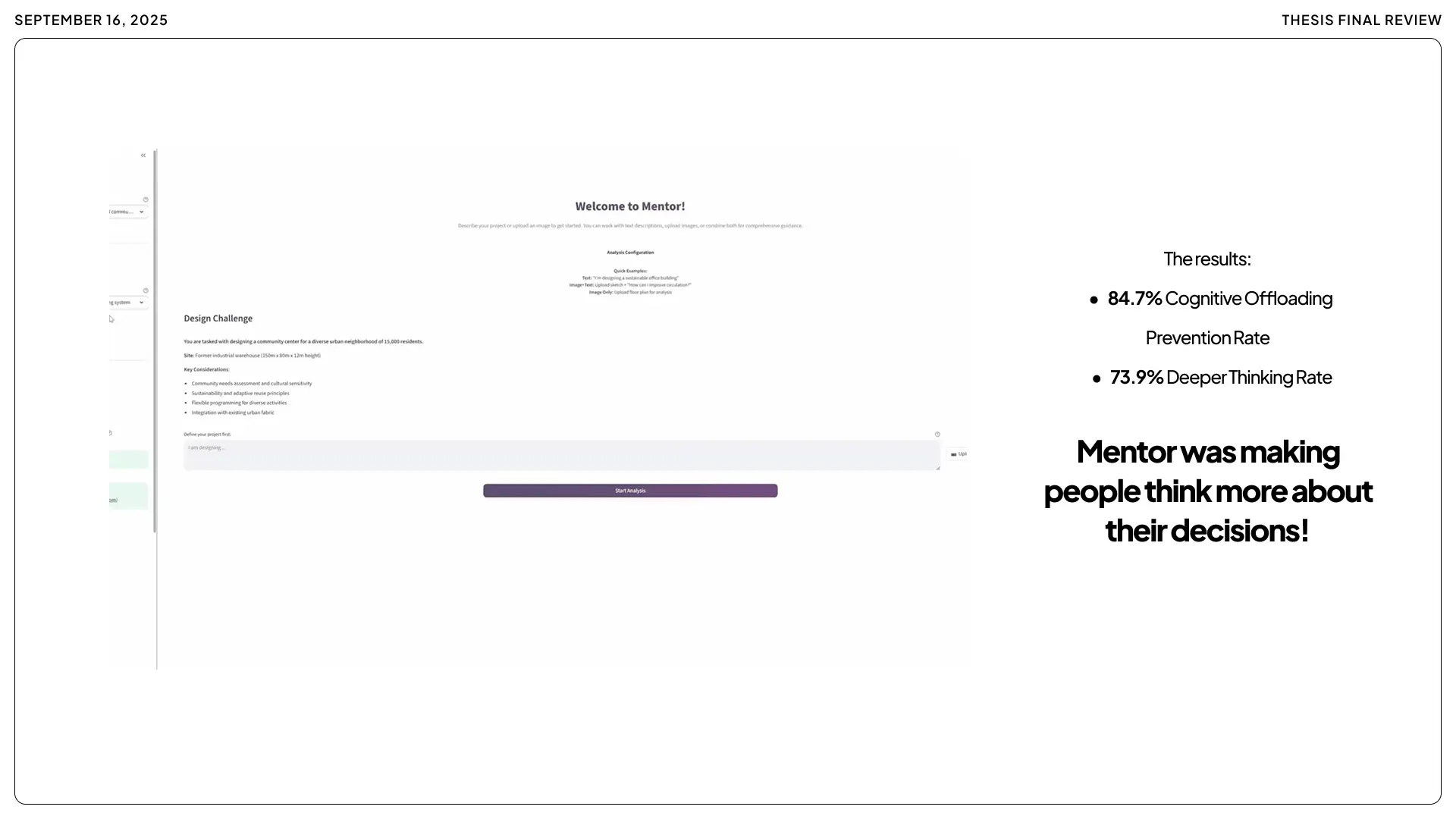

What We Found.

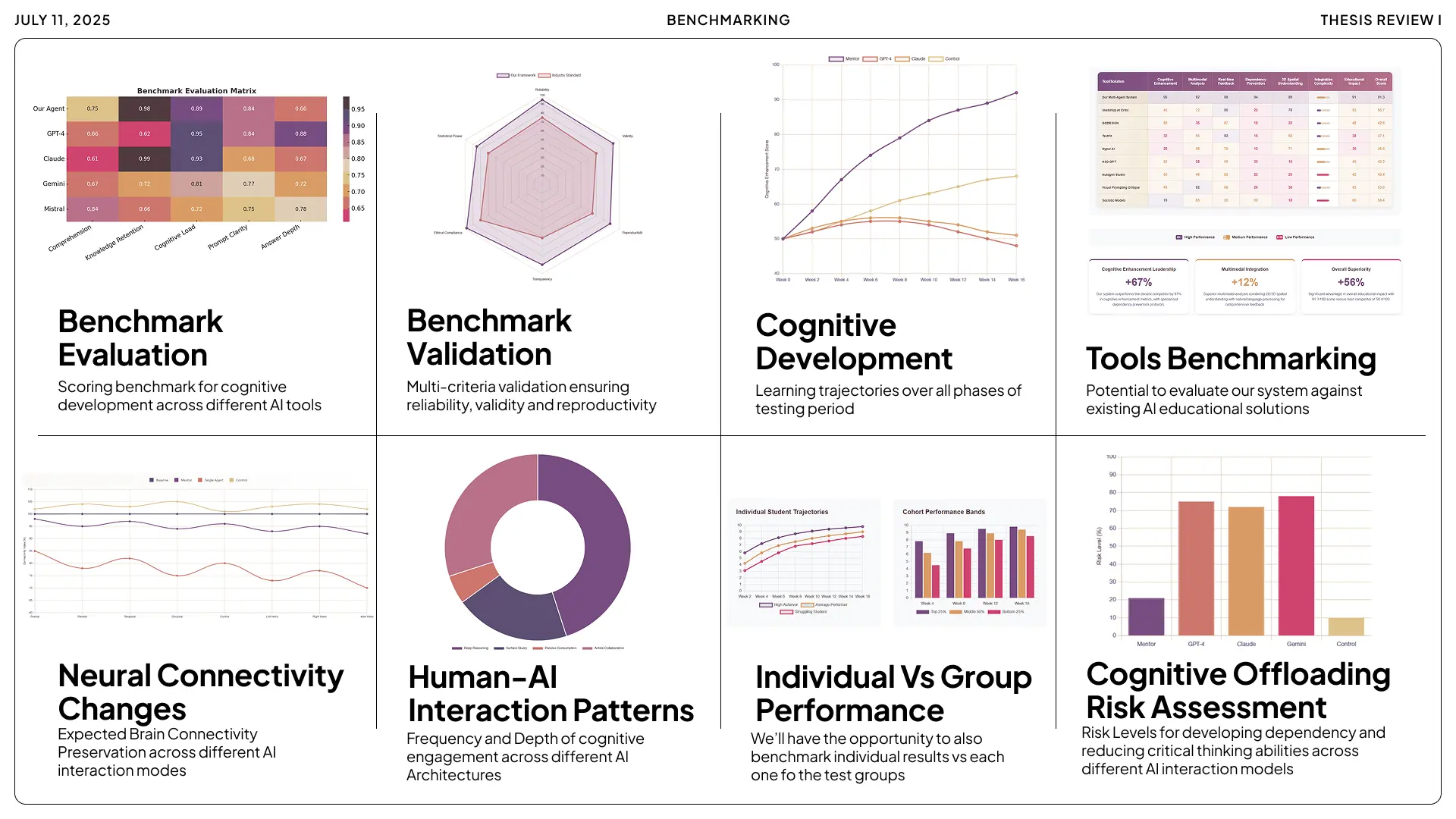

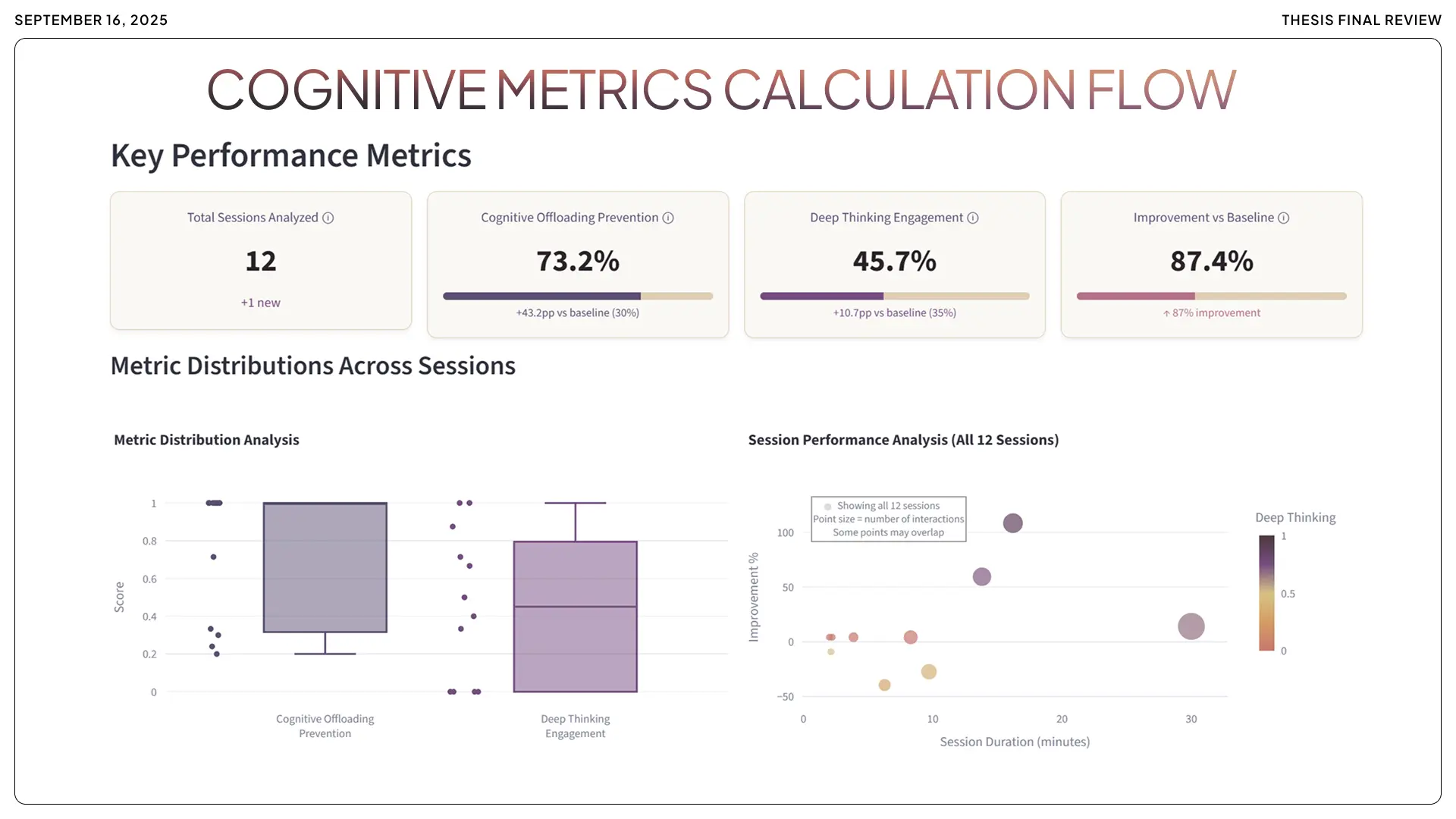

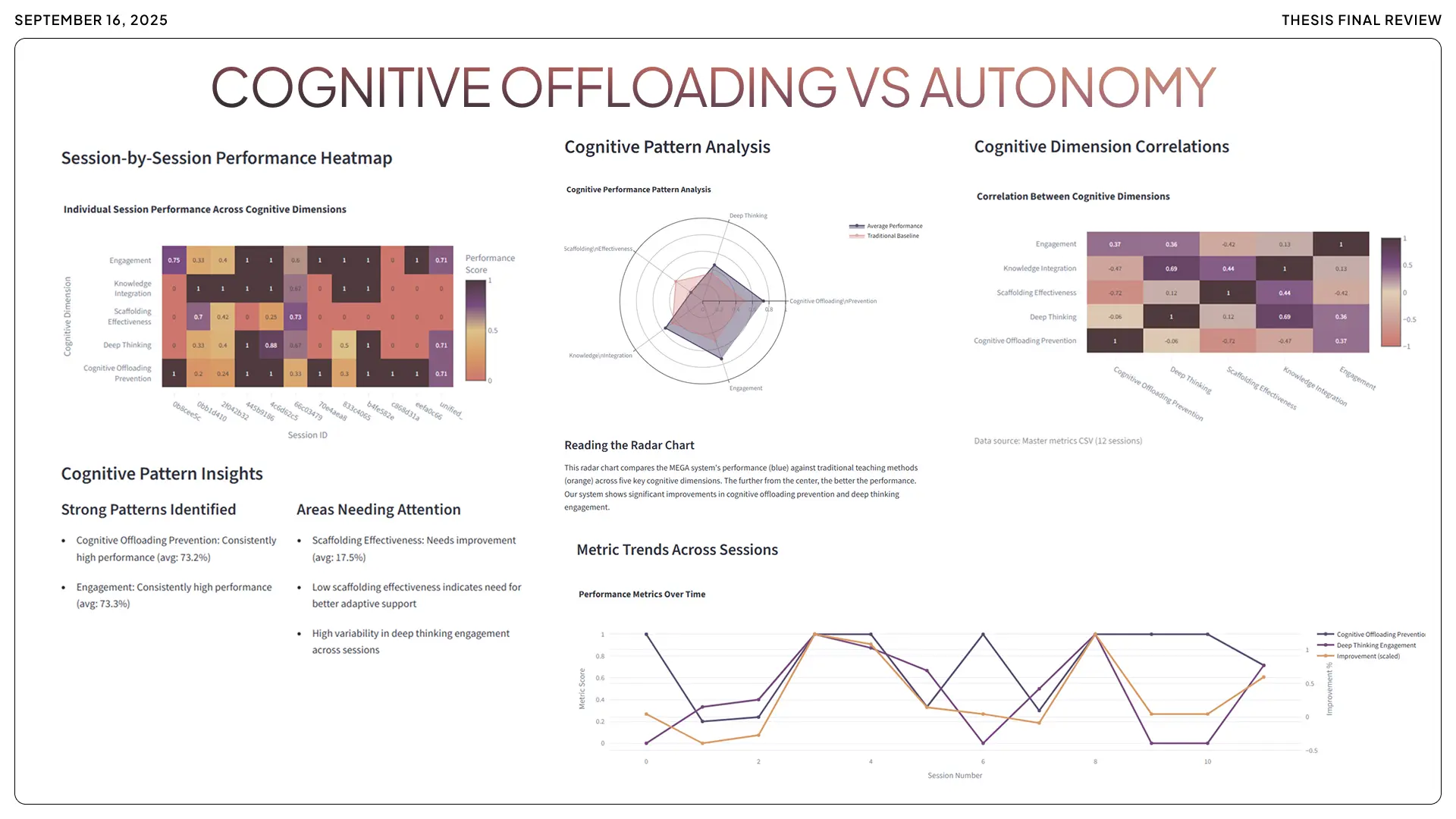

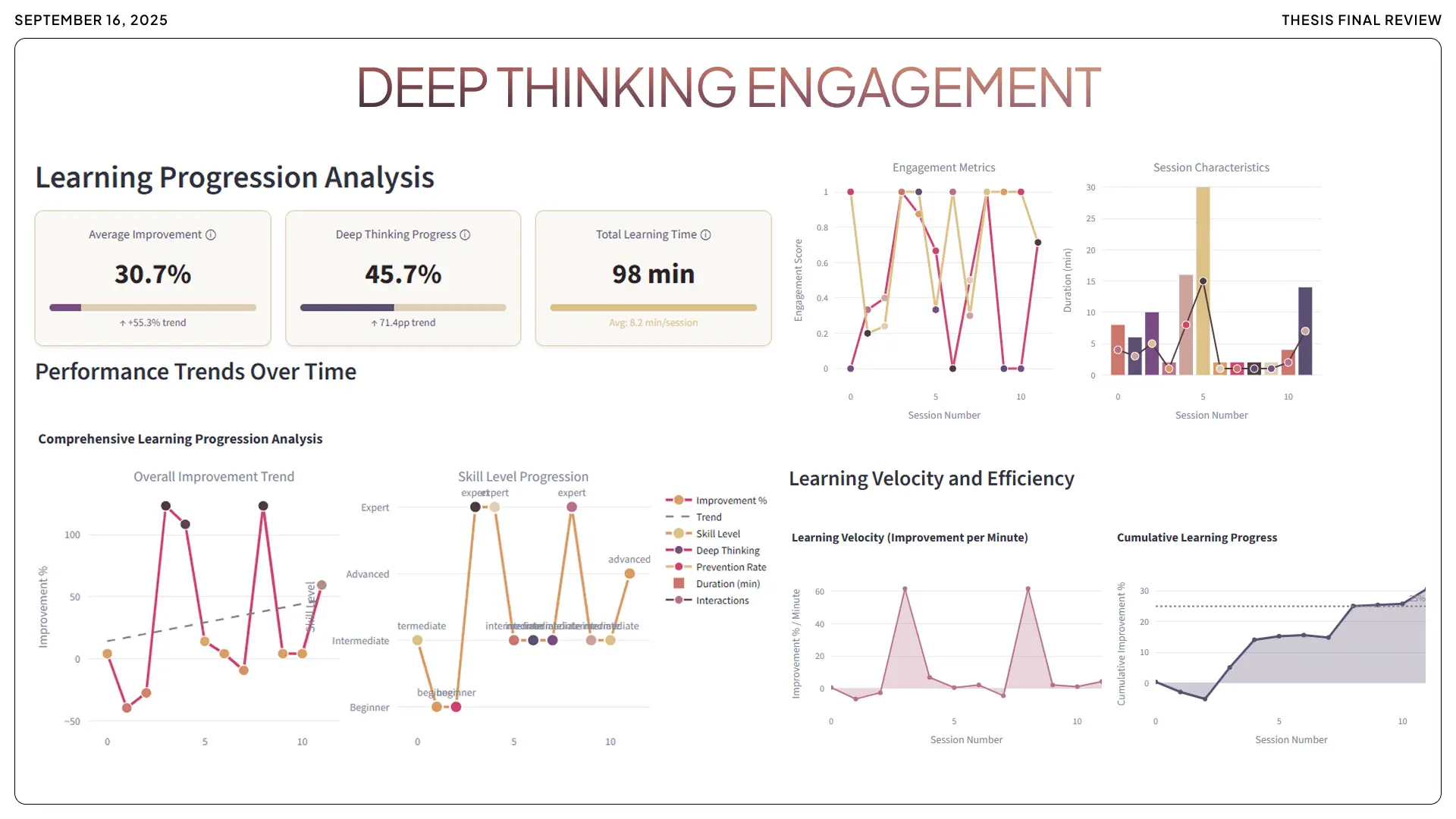

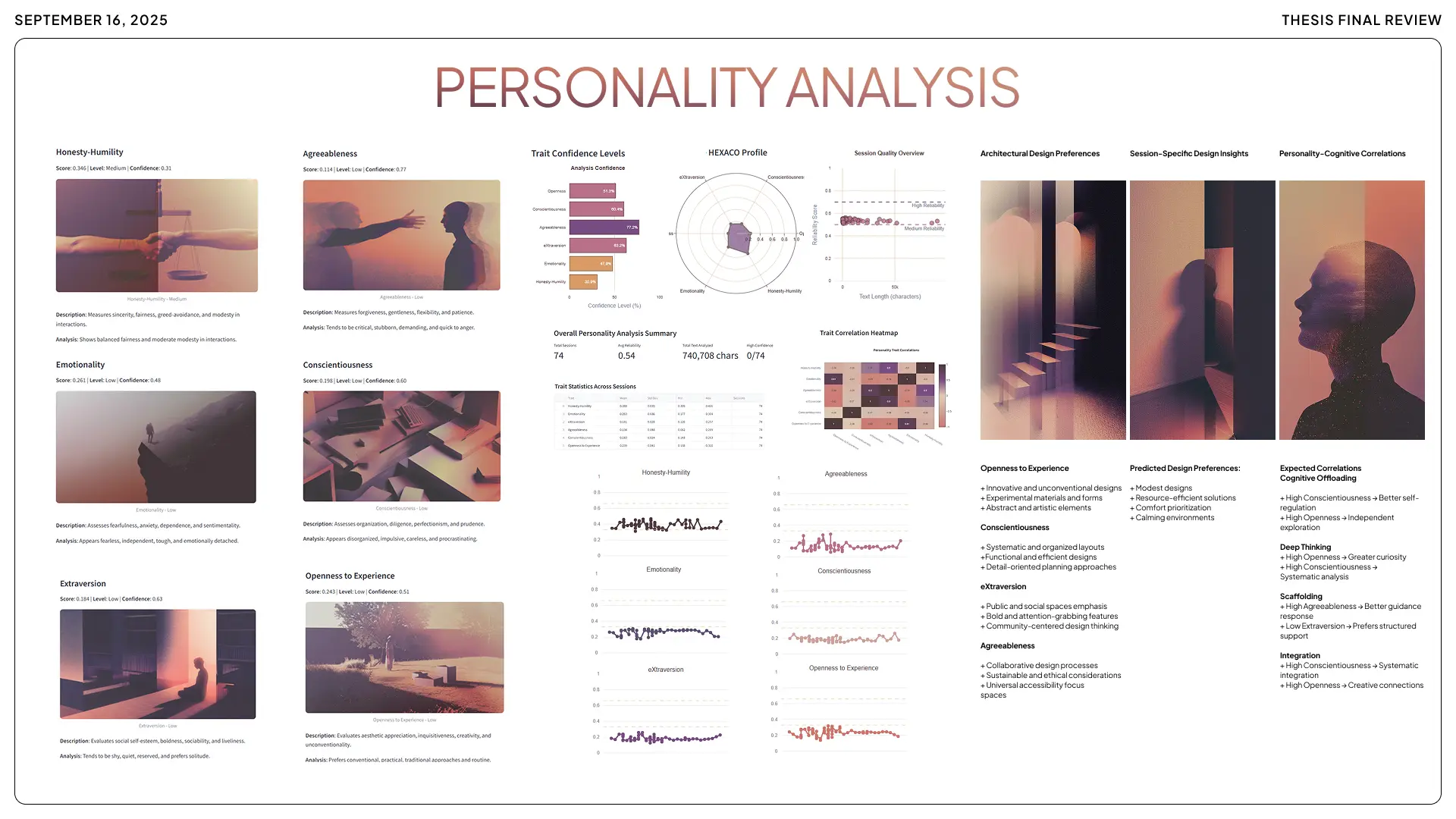

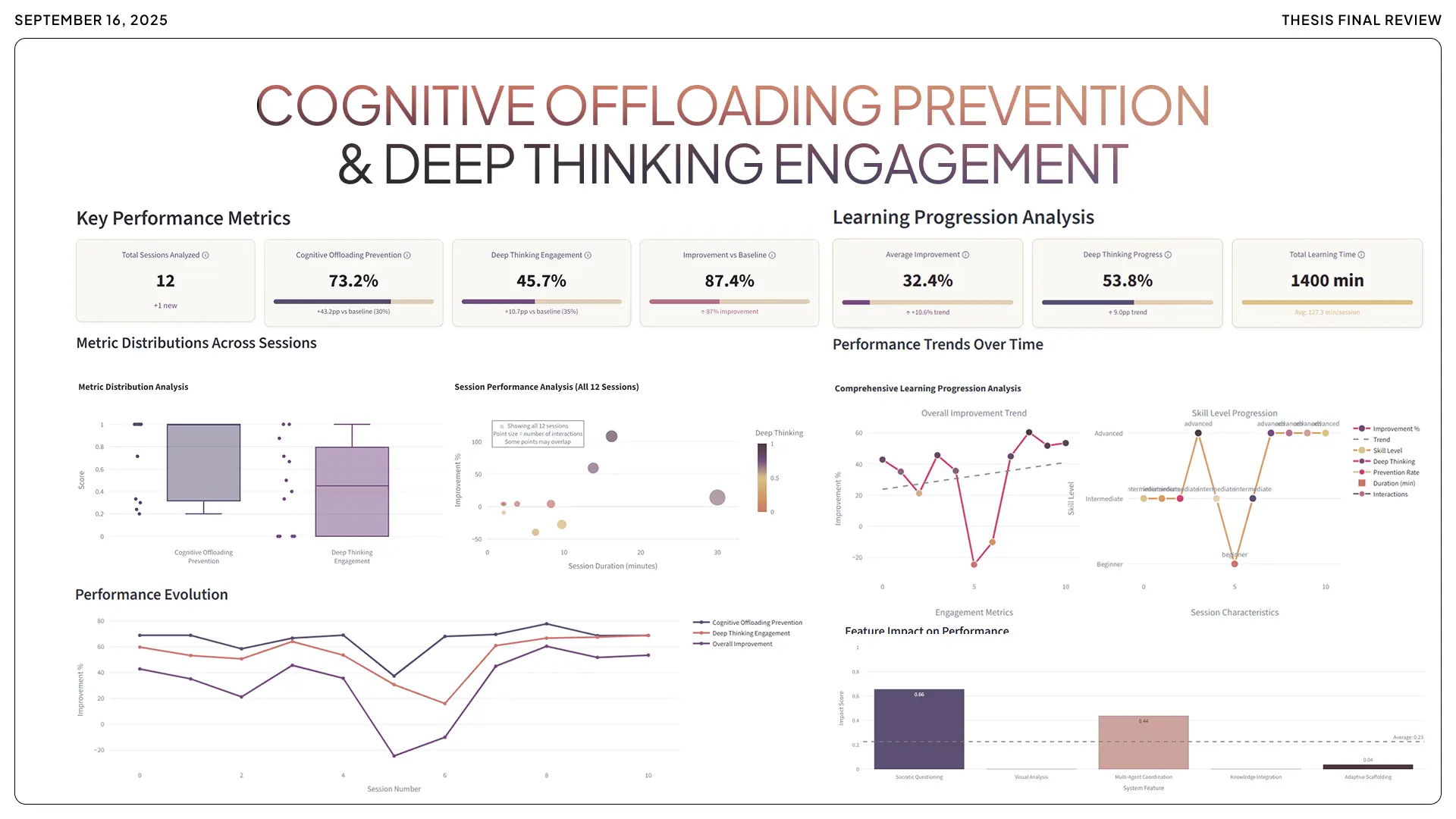

Cognitive Offloading Prevention: 85%

Students using MENTOR avoided offloading the work about 85% of the time on average. The vast majority of design decisions and reasoning came from the student's own mind, with MENTOR simply facilitating. Compare this to the generic AI group, where students only maintained that level of autonomy roughly 42% of the time. More than half the time they were leaning on the AI or accepting its answers without critical thought. MENTOR achieved an 84.7% offloading prevention rate: a 76% improvement over the baseline generic AI.

Deep Thinking Engagement: 2.5x

The MENTOR group spent significantly more time in thoughtful analysis and asked far more "next-level" questions about their own design. Approximately 74% deep engagement in MENTOR sessions, versus only around 30% in generic AI sessions. About two and a half times more rich thinking. One metric looked at the use of abstract or analytical language, discussing strategy, principles, hypothetical scenarios. MENTOR students showed roughly a 150% increase compared to the others. Explaining reasoning out loud. Posing hypothetical questions. Really engaging with the problem.

Design Process Quality: 65%

When the design idea networks were mapped, the MENTOR group's maps were far more interconnected. More links between ideas. A student might connect a structural idea to a spatial concept to a user experience idea, all in an iterative chain. The analysis showed about 65% higher link density for MENTOR-assisted students. Richer and denser webs, while the generic AI group's webs were sparser. Often just a few branches off a single AI-suggested idea. MENTOR fosters a more exploratory and integrated design mindset. Constantly nudging: "Any other angles? Any consequences of that choice?" Students weave a more connected tapestry of ideas.

Healthy Human-AI Relationship: 15%

Perhaps the most heartening result: MENTOR achieved its educational goals without students becoming overly attached to it or credulous of it. The anthropomorphism detection metric stayed low. On average, students showed only about a 15% tendency to anthropomorphize or treat MENTOR like a human personality, well below the 20% safety threshold. Students saw MENTOR for what it is: a helpful tool and coach. No alarming signs of students becoming more reliant on AI after using it. Some said using MENTOR gave them more confidence to tackle problems on their own later, because it had taught them how to think through the problems.

What This Means for Higher Education

MENTOR isn't a generic chatbot with an institution's logo slapped on it.

Each implementation is built for one institution alone. Trained on that institution's knowledge base. Its teaching methodologies. Its standards. Its accumulated wisdom.

Cambridge's MENTOR would be fundamentally different from Oxford's. Harvard's different from Stanford's. Because each is built from what makes that institution distinct.

When faculty retire, thirty years of institutional knowledge doesn't walk out the door. It's captured, structured, and continues teaching.

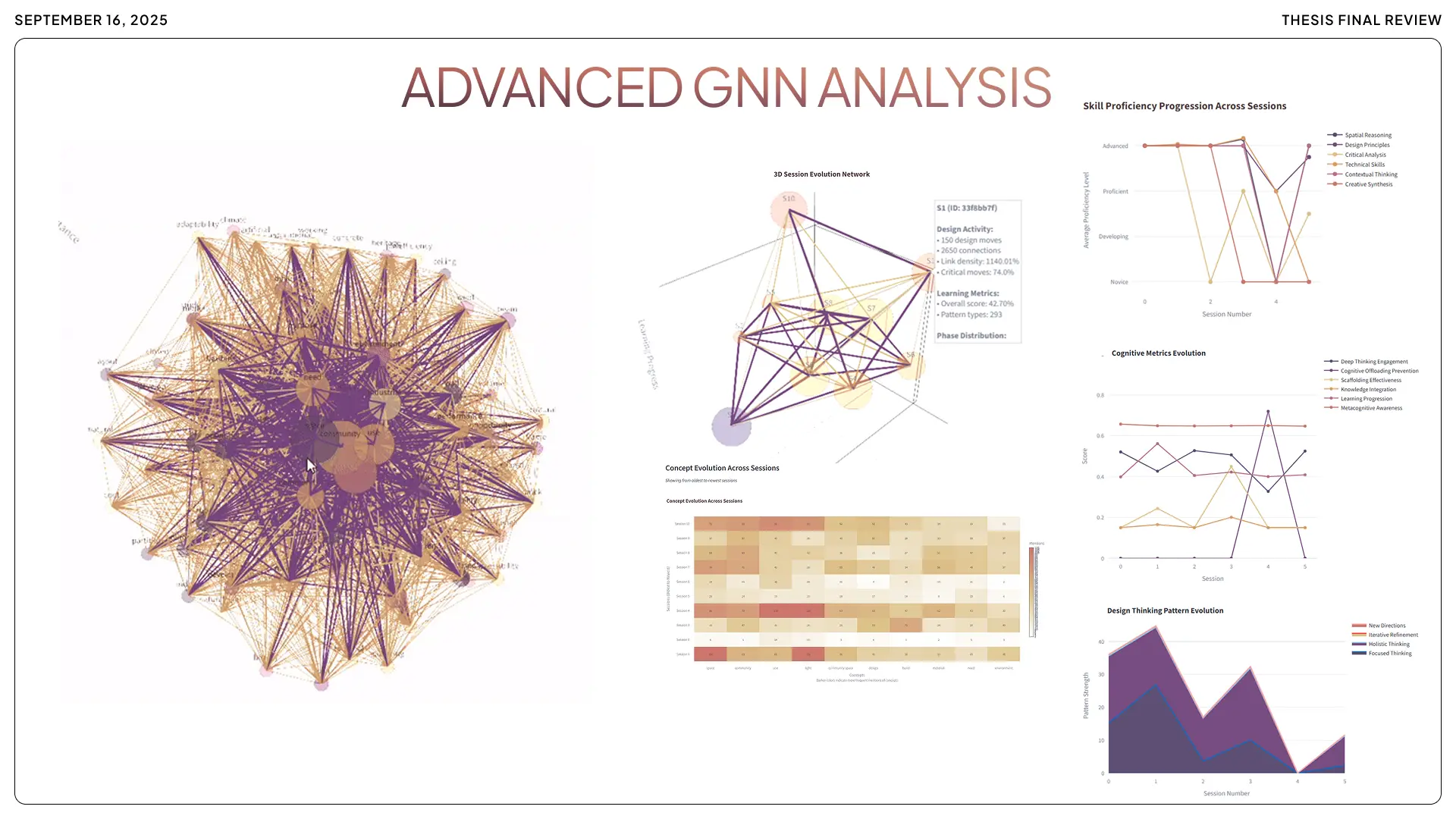

For the first time, an institution can actually prove what its students learned. Not grades. Not completion certificates. Actual demonstrated understanding, mapped and measurable. Trackable networks of knowledge that students built themselves.

This data changes everything.

A student in their second month shows thinking patterns three standard deviations above cohort average. Not test scores. Thinking patterns. The way ideas connect. The depth of questioning. The institution doesn't wait four years to discover exceptional talent. They know now. Resources shift accordingly.

Another student's engagement metrics drop in week six. Not attendance. Not grades. Cognitive engagement. The warning appears before the first failed assignment. Before the spiral. Before the dropout statistics claim another number.

A department's courses consistently produce shallower idea networks than others. Same caliber students. Same institutional resources. Different outcomes. The data doesn't blame. It reveals. Curriculum gets redesigned based on evidence, not intuition.

The system learns which questioning styles trigger breakthroughs for different learning profiles. It adapts. It sharpens. Each semester's data makes the next semester's teaching more precise.

Most educational technology promises efficiency. This promises something different: the ability to see inside the learning process itself. To measure what was previously unmeasurable. To intervene where intervention actually matters.

In an age where parents question whether university tuition is worth the debt when information is free online, institutions need more than tradition and reputation. They need proof. Proof that what happens inside their walls produces minds that work differently than minds trained by algorithms optimized for engagement rather than understanding.

This Isn't Meant to Be Easy. That's the Point.

MENTOR won't give the answer. It will force finding it.

That's frustrating. Ask a question, get a question back. Want a shortcut, hit a wall.

But here's what builds instead:

Every session generates data. Not grades. Not completion percentages. A map of how thinking actually works. Which concepts connected to which. Where breakthroughs happened. How problems got approached, reconsidered, solved.

Over four years, that map becomes substantial. A documented intellectual fingerprint. Evidence of capability that doesn't require explanation or persuasion.

The traditional graduate walks into an interview and hopes to convince a stranger they're capable. They have a diploma that millions of others also have. They have grades that everyone knows are inflated. They have rehearsed answers to predictable questions.

A MENTOR graduate walks in with something else. A record that shows, with data, how their mind operates under pressure. How they handle ambiguity. How their thinking evolved. How deep their understanding actually goes.

Some doors require convincing. Others open when proof is undeniable.

The years of work. The debt. The sacrifice. All of it currently rests on the hope that someone will believe in potential. MENTOR shifts that equation. The work speaks. The data exists. The capability is documented.

Nobody gets accepted into institutions that matter by choosing the easy path. This is the continuation of that principle. The difficulty is the feature, not the bug. What gets built in the struggle is what makes the difference later.

FAQ

1. How do AI tutors ensure students actually learn instead of just getting answers?

Most don't.

A 2024 MIT Media Lab study found that ChatGPT users showed the lowest brain engagement across all measured regions compared to those using Google Search or working unaided. Students described their AI-assisted essays as "soulless" and couldn't recall writing them.

The problem isn't AI. It's architecture. When AI tutors simply provide answers on demand, students learn up to 17% less than peers who received no help at all. But when the same AI is configured to use Socratic questioning (asking probing questions instead of giving answers) students scored 127% higher on practice problems while matching their peers on final assessments.

MENTOR was built on this distinction. Its multi-agent system never delivers answers directly. The Socratic Tutor Agent responds to questions with questions, guiding students toward discovery rather than handing them conclusions. The Cognitive Enhancement Agent monitors for dependency patterns in real-time, intervening when students attempt to offload thinking rather than engage with it.

The difference shows up in data: 85% student autonomy rates versus 42% with generic AI assistants. Not because students are told to think harder. Because the system makes thinking the only path forward.

Sources:

- MIT Media Lab: Your Brain on ChatGPT

- Edutopia: AI Tutors Can Work, With the Right Guardrails

- UPenn Study: LLM's Are Making Us Lazy, Dumb and Dependent

2. What is cognitive offloading, and why is it a problem with AI learning tools?

Cognitive offloading is what happens when humans use external tools to reduce mental effort. Writing a grocery list instead of memorizing it. Using a calculator instead of doing arithmetic. Using AI instead of thinking.

The problem isn't offloading itself, it's offloading the wrong things.

A 2025 study of 666 participants found a +0.72 correlation between AI tool usage and cognitive offloading, and a -0.68 correlation between AI usage and critical thinking skills. Translation: the more people use AI, the less they think for themselves, and the effect compounds over time.

Researchers describe this as "use-dependent learning", the brain optimizes for whatever it repeatedly does. Offload reasoning to AI repeatedly, and the neural pathways for independent reasoning weaken. The MIT study found that ChatGPT users had the weakest brain connectivity of all groups tested, with participants later describing a sense of having "zoned out" during AI-assisted work.

Young adults ages 17-25 show the highest AI dependence and lowest critical thinking scores, precisely the population universities are responsible for developing.

MENTOR exists because this isn't a user behavior problem. It's a design problem. When AI answers questions, humans stop asking them. MENTOR's architecture reverses this: it asks questions, forcing students to generate answers themselves. The cognitive work stays where it belongs, in the student's brain, building the neural pathways that constitute actual learning.

Sources:

- MDPI: AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking

- MIT Media Lab: Your Brain on ChatGPT

- Psychology Today: Is AI Making Us Stupider?

- Phys.org: Increased AI use linked to eroding critical thinking skills

3. Can AI tutoring actually replace human tutors, or is it just a supplement?

Neither framing is useful.

Human tutoring costs $50-150 per hour and tops out at 20-30 students per week per tutor. AI tutoring costs $20-60 per month and can serve hundreds of students simultaneously with 24/7 availability. These are different tools solving different problems at different scales.

The real question: can AI tutoring produce comparable learning outcomes?

A 2025 Harvard randomized controlled trial found that properly designed AI tutors produced learning gains twice as large as traditional lecture-based instruction. The operative phrase is "properly designed." Standard AI chat interfaces actually decreased learning outcomes, students using generic ChatGPT for homework scored 17% worse on subsequent tests than students who received no help at all.

The variable isn't AI versus human. It's answer-giving versus question-asking.

Effective human tutors don't just provide answers, they ask questions that guide students toward understanding. This is what separates tutoring from looking up answers. MENTOR replicates this Socratic dynamic at scale: five specialized agents working together to question, probe, and guide without ever simply handing over solutions.

The result is the accessibility and cost-efficiency of AI with the pedagogical effectiveness of human tutoring, available at 3 AM before an exam, affordable enough for institutional deployment, and designed to produce minds that can work independently when the AI isn't there.

Sources:

4. How do you measure whether AI tutoring is actually improving learning outcomes?

Grades don't tell you much. Neither do completion rates, time-on-platform, or satisfaction surveys. These measure activity, not learning.

MENTOR tracks what matters: whether students can perform without AI assistance.

The system monitors four categories of evidence:

Cognitive engagement indicators. Natural language processing analyzes student responses for reasoning complexity, are they using causal language, exploring hypotheticals, making analytical connections? Or are they asking the AI to do that for them? MENTOR's testing showed 2.5x higher deep engagement (74%) compared to generic AI assistants (30%).

Autonomy metrics. Linguistic pattern detection identifies dependency behaviors, phrases that signal students are trying to extract answers rather than develop understanding. MENTOR achieves 85% student autonomy rates, meaning students generate their own conclusions rather than reformulating AI output.

Design process quality. Using linkography analysis (mapping how ideas connect and build on each other) MENTOR measures the richness of student thinking. Students using MENTOR showed 65% higher link density in their idea networks compared to control groups.

Anthropomorphism monitoring. When students start treating AI as a relationship rather than a tool, learning typically declines. MENTOR tracks language patterns indicating emotional attachment and keeps them below safety thresholds (15% versus 20% benchmark).

For institutions, this translates to dashboards showing which concepts students struggle with, which interventions work, and whether AI-assisted learning translates to independent capability on assessments completed without AI access.

Sources:

- Our original MENTOR research methodology (ask kindly andwe'll send you a dossier)

- MIT Media Lab: Your Brain on ChatGPT

5. Is using AI for homework cheating, and how should universities set policies?

The question is broken.

54% of college students say using AI constitutes cheating. 56% have used it on assignments anyway. 51% say they would use AI even if explicitly prohibited. Framing AI use as cheating hasn't stopped AI use, it's just made everyone anxious and dishonest about it.

Meanwhile, AI detection tools show accuracy rates ranging from 33% to 81%, effectively a coin flip with devastating consequences when wrong. Students report false accusations causing panic attacks, formal complaints, and lasting academic damage.

The real problem isn't enforcement. It's that most AI tools make cheating the default mode of interaction.

When AI gives answers on demand, using it for homework is functionally identical to copying from a classmate. The shortcut behavior (getting answers without doing the cognitive work) is exactly what academic integrity rules exist to prevent.

MENTOR changes the equation by design. The system doesn't give answers. It asks questions. Using MENTOR for homework means engaging in guided Socratic dialogue that forces students to develop their own understanding. The learning happens in the conversation, not despite it.

This allows educational institutions to implement clear, enforceable policies: MENTOR is an authorized learning tool precisely because its architecture prevents the shortcut behavior that constitutes cheating. Students can use it openly, instructors can endorse it confidently, and institutions can track whether it's producing actual learning outcomes.

The policy question stops being "how do we catch AI use?" and becomes "how do we ensure AI use produces learning?"

Sources:

- EDUCAUSE Review: Academic Integrity in the Age of AI

- Tech & Learning: He Was Falsely Accused of Using AI

.webp)